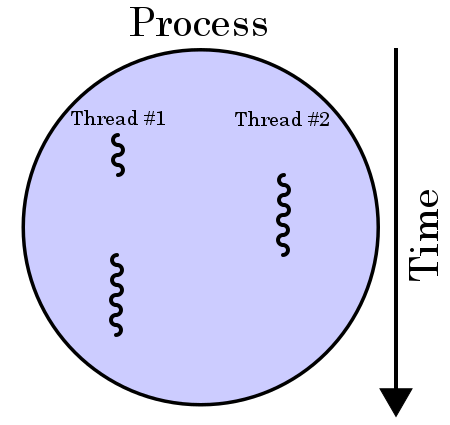

Just as a process represents a virtual computer, the thread

abstraction represents a virtual processor.

So threads are an abstraction.

Abstractions reduce complexity. Thus, the first question is what problem threads solve. The second question is how they can be implemented.

As to the first question: Threads make implementing multitasking easier. The main idea behind this is that multitasking is unnecessary if every task can be assigned to a unique worker. Actually, for the time being, it's fine to generalize the definition even further and say that the thread abstraction represents a virtual worker.

Now, imagine you have a robot that you want to give multiple tasks. Unfortunately, it can only execute a single, step by step task description. Well, if you want to make it multitask, you can try creating one big task description by interleaving the separate tasks you already have. This is a good start but the issue is that the robot sits at a desk and puts items on it while working. In order to get things right, you cannot just interleave instructions but also have to save and restore the items on the table.

This works, but now it's hard to disentangle the separate tasks by simply looking at the big task description that you created. Also, the ceremony of saving and restoring the items on the tabe is tedious and further clutters the task description.

Here is where the thread abstraction comes in and saves the day. It lets you assume that you have an infinite number of robots, each sitting in a different room at its own desk. Now, you can just throw task descriptions in a pot and everything else is taken care of by the thread abstraction's implementer. Remember? If there are enough workers, nobody has to multitask.

Often it is useful to indicate your perspective and say robot to mean real robots and virtual robot to mean the robots the thread abstraction provides you with.

At this point the problem of multitasking is solved for the case when the tasks are fully independent. However, wouldn't it be nice to let the robots go out of their rooms, interact and work together towards a common goal? Well, as you probably guessed, this requires coordination. Traffic lights, queues - you name it.

As an intermediate summary, the thread abstraction solves the problem of multitasking and creates an opportunity for cooperation. Without it, we only had a single robot, so cooperation was unthinkable. However, it has also brought the problem of coordination (synchronization) on us. Now we know what problem the tread abstraction solves and, as a bonus, we also know what new challenge it creates.

But wait, why do we care about multitasking in the first place?

First, multitasking can increase performance if the tasks involve waiting. For example, while the washing machine is running, you can easily start preparing dinner. And while your dinner is in the over, you can hang out the clothes. Note that here you wait because an independent component does the job for you. Tasks that involve waiting are called I/O bound tasks.

Second, if multitasking is done rapidly, and you look at it from a bird's eyes view, it appears as parallelism. It's a bit like how the human eye perceives a series of still images as motion if shown in quick succession. If I write a letter to Alice for one second and to Bob for one second as well, can you tell if I wrote the two letters simultaneously or alternately, if you only look at what I'm doing every two seconds? Search for Multitasking Operating System for more on this.

Now, let's focus on the question of how the thread abstraction can be implemented.

Essentially, implementing the thread abstraction is about writing a task, a main task, that takes care of scheduling all the other tasks.

A fundamental question to ask is: If the scheduler schedules all tasks and the scheduler is also a task, then who schedules the scheduler?

Let's brake this down. Say you write a scheduler, compile it and load it into the main memory of a computer at the address 1024, which happens to be the address that is loaded into the processor's instruction pointer when the computer is started. Now, your scheduler goes ahead and finds some tasks sitting precompiled in the main memory. For example, a task starts at the address 1,048,576. The scheduler wants to execute this task so it loads the task's address (1,048,576) into the instruction pointer. Huh, that was quite an ill considered move because now the scheduler has no way to regain control from the task it has just started.

One solution is to insert jump instructions to the scheduler (address 1024) into the task descriptions before execution. Actually, you shouldn't forget to save the items on the desk the robot is working at, so you also have to save the processor's registers before jumping. The issue here is that it is hard to tell where to insert the jump instructions. If there are too many, they create too much overhead and if there are too few of them, one task might monopolize the processor.

A second approach is to ask the task authors to designate a few places where control can be transferred back to the scheduler. Note that the authors don't have to write the logic for saving the registers and inserting the jump instruction because it suffices that they mark the appropriate places and the scheduler takes care of the rest. This looks like a good idea because task authors probably know that, for example, their task will wait for a while after loading and starting a washing machine, so they let the scheduler take control there.

The problem that neither of the above approaches solve is that of an erroneous or malicious task that, for example, gets caught up in an infinite loop and never jumps to the address where the scheduler lives.

Now, what to do if you cannot solve something in software? Solve it in hardware! What is needed is a programmable circuitry wired up to the processor that acts like an alarm clock. The scheduler sets a timer and its address (1024) and when the timer runs out, the alarm saves the registers and sets the instruction pointer to the address where the scheduler lives. This approach is called preemptive scheduling.

Probably by now you start to sense that implementing the thread abstraction is not like implementing a linked list. The most well-known implementers of the thread abstraction are operating systems. The threads they provide are sometimes called kernel-level threads. Since an operating system cannot afford losing control, all major, general-purpose operating systems uses preemptive scheduling.

Arguably, operating systems feel like the right place to implement the thread abstraction because they control all the hardware components and can suspend and resume threads very wisely. If a thread requests the contents of a file stored on a hard drive from the operating system, it immediately knows that this operation will most likely take a while and can let another task occupy the processor in the meanwhile. Then, it can pause the current task and resume the one that made the request, once the file's contents are available.

However, the story doesn't end here because threads can also be implemented in user space. These implementers are normally compilers. Interestingly, as far as I know, kernel-level threads are as powerful as threads can get. So why do we bother with user-level threads? The reason, of course, is performance. User-level threads are more lightweight so you can create more of them and normally the overhead of pausing and resuming them is small.

User-level threads can be implemented using async/await. Do you remember that one option to achieve that control gets back to the scheduler is to make task authors designate places where the transition can happen? Well, the async and await keywords serve exactly this purpose.

Now, if you've made it this far, be prepared because here comes the real fun!

Have you noticed that we barely talked about parallelism? I mean, don't we use threads to run related computations in parallel and thereby increase throughput? Well, not quiet.. Actually, if you only want parallelism, you don't need this machinery at all. You just create as many tasks as the number of processing units you have and none of the tasks has to be paused or resumed ever. You don't even need a scheduler because you don't multitask.

In a sense, parallelism is an implementation detail. If you think about it, implementers of the thread abstraction can utilize as many processors as they wish under the hood. You can just compile some well-written multithreaded code from 1950, run it on a multicore today and see that it utilizes all cores. Importantly, the programmer who wrote that code probably didn't anticipate that piece of code being run on a multicore.

You could even argue that threads are abused when they are used to achieve parallelism: Even though people know they don't need the core feature, multitasking, they use threads to get access to parallelism.

As a final thought, note that user-level threads alone cannot provide parallelism. Remember the quote from the beginning? Operating systems run programs inside a virtual computer (process) that is normally equipped with a single virtual processor (thread) by default. No matter what magic you do in user space, if your virtual computer has only a single virtual processor, you cannot run code in parallel.

So what do we want? Of course, we want parallelism. But we also want lightweight threads. Therefore, many implementers of the thread abstraction started to use a hybrid approach: They start as many kernel-level threads as there are processing units in the hardware and run many user-level threads on top of a few kernel-level threads. Essentially, parallelism is taken care of by the kernel-level and multitasking by the user-level threads.

Now, an interesting design decision is what threading interface a language exposes. Go, for example, provides a single interface that allows users to create hybrid threads, so called goroutines. There is no way to ask for, say, just a single kernel-level thread in Go. Other languages have separate interfaces for different kinds of threads. In Rust, kernel-level threads live in the standard library, while user-level and hybrid threads can be found in external libraries like async-std and tokio. In Python, the asyncio package provides user-level threads while multithreading and multiprocessing provide kernel-level threads. Interestingly, the threads multithreading provides cannot run in parallel. On the other hand, the threads multiprocessing provides can run in parallel but, as the library's name suggests, each kernel-level thread lives in a different process (virtual machine). This makes multiprocessing unsuitable for certain tasks because transferring data between different virtual machines is often slow.

Further resources:

Operating Systems: Principles and Practice by Thomas and Anderson

Concurrency is not parallelism by Rob Pike

Parallelism and concurrency need different tools

Asynchronous Programming in Rust

Inside Rust's Async Transform

Rust's Journey to Async/Await

What Color is Your Function?

Why goroutines instead of threads?

Why doesn't my program run faster with more CPUs?

John Reese - Thinking Outside the GIL with AsyncIO and Multiprocessing - PyCon 2018