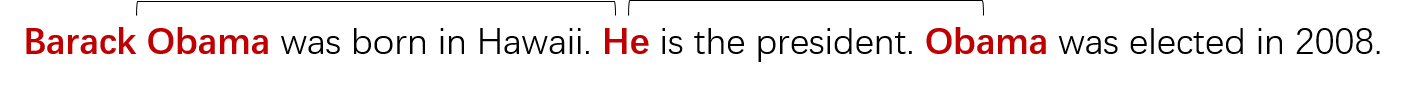

Stanford CoreNLP provides coreference resolution as mentioned here, also this thread, this, provides some insights about its implementation in Java.

However, I am using python and NLTK and I am not sure how can I use Coreference resolution functionality of CoreNLP in my python code. I have been able to set up StanfordParser in NLTK, this is my code so far.

from nltk.parse.stanford import StanfordDependencyParser

stanford_parser_dir = 'stanford-parser/'

eng_model_path = stanford_parser_dir + "stanford-parser-models/edu/stanford/nlp/models/lexparser/englishRNN.ser.gz"

my_path_to_models_jar = stanford_parser_dir + "stanford-parser-3.5.2-models.jar"

my_path_to_jar = stanford_parser_dir + "stanford-parser.jar"

How can I use coreference resolution of CoreNLP in python?