I generate a new dataframe based on the following code:

from pyspark.sql.functions import split, regexp_extract

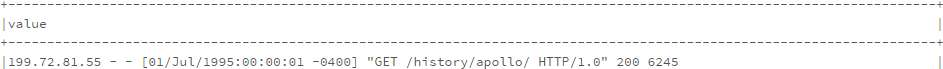

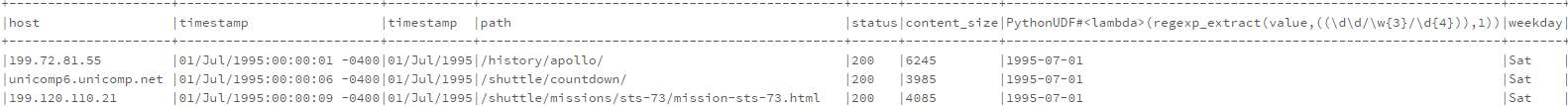

split_log_df = log_df.select(regexp_extract('value', r'^([^\s]+\s)', 1).alias('host'),

regexp_extract('value', r'^.*\[(\d\d/\w{3}/\d{4}:\d{2}:\d{2}:\d{2} -\d{4})]', 1).alias('timestamp'),

regexp_extract('value', r'^.*"\w+\s+([^\s]+)\s+HTTP.*"', 1).alias('path'),

regexp_extract('value', r'^.*"\s+([^\s]+)', 1).cast('integer').alias('status'),

regexp_extract('value', r'^.*\s+(\d+)$', 1).cast('integer').alias('content_size'))

split_log_df.show(10, truncate=False)

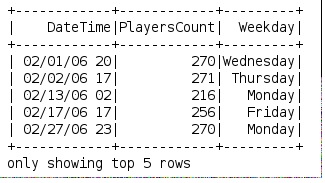

I need another column showing the dayofweek, what would be the best elegant way to create it? ideally just adding a udf like field in the select.

Thank you very much.

Updated: my question is different than the one in the comment, what I need is to make the calculation based on a string in log_df, not based on the timestamp like the comment, so this is not a duplicate question. Thanks.

datetimemodule and parse out thetimestampcolumn. – Bernersdatetime.strptimefor – Berners