Short version

I would like to know the technical reasons why do Docker images need to be created for multiple architectures. Also, it is not clear whether the point here is creating an image for each CPU architecture or for an OS. Shouldn't the OS abstract the architecture?

Long version

I can understand why the Docker Engine must be ported to multiple architectures. It is a piece of software that will interact with the OS, make system calls, and ultimately it is just code that is represented as a sequence of instructions within a particular instruction set, for a particular architecture. So the Docker Engine must be ported to multiple OS/architectures much like, let's say, Microsoft Word would have to be ported.

The same thing would occur to - let's say - the JVM, or to VirtualBox.

But, different than with Docker, software written for the JVM on Windows would run on Linux. The JVM would abstract the differences of the underlying OS/architectures, and run the same code on both platforms.

Why isn't that the case with Docker images? Why can't the Docker Engine just abstract the differences, and provide a common interface, so the image itself wouldn't need to be compatible with a specific OS/architecture?

Is this a decision (like "let's make different images per architecture because it is better for reason X"), or a consequence of how Docker works (like "we need to do it this way because Docker requires Y")?

Note

- I'm not crying "omg, why??". This is not a rant or criticism, I'm just looking for a technical explanation for the need of different images for different architectures.

- I'm not asking how to create a multi-architecture image.

- I'm not looking for an answer like "multi-architecture images are needed so you can run your images on various platforms", which answers "what for?", but not "why is that needed?" (which is my question).

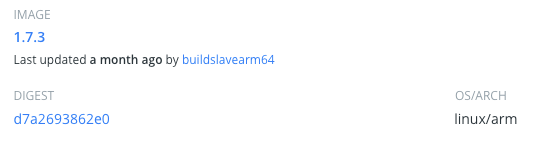

Besides that, when you see an image, it usually has an os/arch in the digest, like this:

What exactly the image is targeting? The OS, the architecture, or both? Shouldn't the OS abstract the underlying architecture?

edit: I'm starting to assume that the need for different images per architecture is on the lines of: the image will contain applications inside it. Let's say, it will contain the Go compiler. The Go compiler itself is a binary that must have been complied to different architectures. The image for x86-64 will contain the Go compiler compiled to x86-64, and so on. Is this correct? If this is correct, is this the only reason?