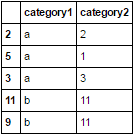

Say I have a dataframe as such

category1 category2 other_col another_col ....

a 1

a 2

a 2

a 3

a 3

a 1

b 10

b 10

b 10

b 11

b 11

b 11

I want to obtain a sample from my dataframe so that category1 a uniform number of times. I'm assuming that there are an equal number of each type in category1. I know that this can be done with pandas using pandas.sample(). However, I also want to ensure that that sample I select has category2 equally represented as well. So, for example, if I have a sample size of 5, I would want something such as:

a 1

a 2

b 10

b 11

b 10

I would not want something such as:

a 1

a 1

b 10

b 10

b 10

While this is a valid random sample of n=4, it would not meet my requirements as I want to vary as much as possible the types of category2.

Notice that in the first example, because a was only sampled twice, that 3 was not not represented from category2. This is okay. The goal is to just as uniformly as possible, represent that sample data.

If it helps to provide a clearer example, one could thing having the categories fruit, vegetables, meat, grains, junk. In a sample size of 10, I would want as much as possible to represent each category. So ideally, 2 of each. Then each of those 2 selected rows belonging to the chosen categories would have subcategories that are also represented as uniformly as possible. So, for example, fruit could have a subcategories of red_fruits, yellow_fruits, etc. For the 2 fruit categories that are selected of the 10, red_fruits and yellow_fruits would both represented in the sample. Of course, if we had larger sample size, we would include more of the subcategories of fruit (green_fruits, blue_fruits, etc.).

df.drop_duplicates(subset=['category1','category2']).sample(n=4)? – Uncharitabledf.reindex(np.random.permutation(df.index)).drop_duplicates(subset=['Category1','Category2']).sample(n=4), but even that's a problem - what if the sample size is such that, say, 2 samples are needed from some pair? Thedrop_duplicatesmakes this impossible. – Beiderbecke