A preliminary remark: with Knuth-style literate programming (i.e. when reading WEB or CWEB programs) the “real” program, as conceived by Knuth, is neither the “source” .w file nor the generated (tangled) .c file, but the typeset (woven) output. The source .w file is best thought of as a means to produce it (and of course also the .c source that's fed to the compiler). (If you don't have cweave and TeX handy; I've typeset some of these programs here; this program DLX1 is here.)

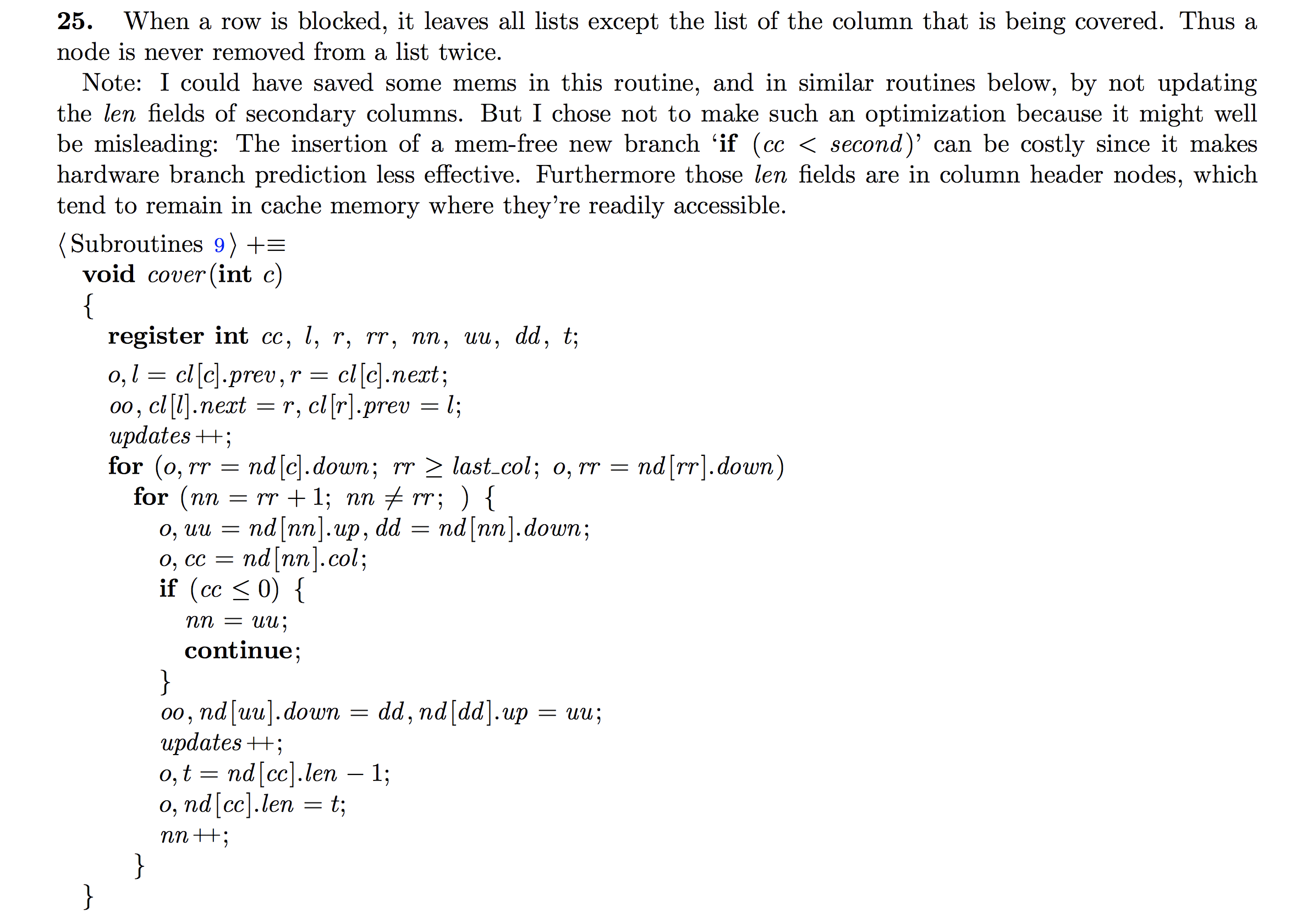

So in this case, I'd describe the location in the code as module 25 of DLX1, or subroutine "cover":

![question]()

Anyway, to return to the actual question: note that this (DLX1) is one of the programs written for The Art of Computer Programming. Because reporting the time taken by a program “seconds” or “minutes” becomes meaningless from year to year, he reports how long a program took in number of “mems” plus “oops”, that's dominated by the “mems”, i.e. the number of memory accesses to 64-bit words (usually). So the book contains statements like “this program finds the answer to this problem in 3.5 gigamems of running time”. Further, the statements are intended to be fundamentally about the program/algorithm itself, not the specific code generated by a specific version of a compiler for certain hardware. (Ideally when the details are very important he writes the program in MMIX or MMIXAL and analyses its operations on the MMIX hardware, but this is rare.) Counting the mems (to be reported as above) is the purpose of inserting o and oo instructions into the program. Note that it's more important to get this right for the “inner loop” instructions that are executed a lot of times, such as everything in the subroutine cover in this case.

This is elaborated in Section 1.3.1′ (part of Fascicle 1):

Timing. […] The running time of a program depends not only on the clock rate but also on the number of functional units that can be active simultaneously and the degree to which they are pipelined; it depends on the techniques used to prefetch instructions before they are executed; it depends on the size of the random-access memory that is used to give the illusion of 264 virtual bytes; and it depends on the sizes and allocation strategies of caches and other buffers, etc., etc.

For practical purposes, the running time of an MMIX program can often be estimated satisfactorily by assigning a fixed cost to each operation, based on the approximate running time that would be obtained on a high-performance machine with lots of main memory; so that’s what we will do. Each operation will be assumed to take an integer number of υ, where υ (pronounced “oops”) is a unit that represents the clock cycle time in a pipelined implementation. Although the value of υ decreases as technology improves, we always keep up with the latest advances because we measure time in units of υ, not in nanoseconds. The running time in our estimates will also be assumed to depend on the number of memory references or mems that a program uses; this is the number of load and store instructions. For example, we will assume that each LDO (load octa) instruction costs µ + υ, where µ is the average cost of a memory reference. The total running time of a program might be reported as, say, 35µ+ 1000υ, meaning “35 mems plus 1000 oops.” The ratio µ/υ has been increasing steadily for many years; nobody knows for sure whether this trend will continue, but experience has shown that µ and υ deserve to be considered independently.

And he does of course understand the difference from reality:

Even though we will often use the assumptions of Table 1 for seat-of-the-pants estimates of running time, we must remember that the actual running time might be quite sensitive to the ordering of instructions. For example, integer division might cost only one cycle if we can find 60 other things to do between the time we issue the command and the time we need the result. Several LDB (load byte) instructions might need to reference memory only once, if they refer to the same octabyte. Yet the result of a load command is usually not ready for use in the immediately following instruction. Experience has shown that some algorithms work well with cache memory, and others do not; therefore µ is not really constant. Even the location of instructions in memory can have a significant effect on performance, because some instructions can be fetched together with others. […] Only the meta-simulator can be trusted to give reliable information about a program’s actual behavior in practice; but such results can be difficult to interpret, because infinitely many configurations are possible. That’s why we often resort to the much simpler estimates of Table 1.

Finally, we can use Godbolt's Compiler Explorer to look at the code generated by a typical compiler for this code. (Ideally we'd look at MMIX instructions but as we can't do that, let's settle for the default there, which seems to be x68-64 gcc 8.2.) I removed all the os and oos.

For the version of the code with:

/*o*/ t = nd[cc].len - 1;

/*o*/ nd[cc].len = t;

the generated code for the first line is:

movsx rax, r13d

sal rax, 4

add rax, OFFSET FLAT:nd+8

mov eax, DWORD PTR [rax]

lea r14d, [rax-1]

and for the second line is:

movsx rax, r13d

sal rax, 4

add rax, OFFSET FLAT:nd+8

mov DWORD PTR [rax], r14d

For the version of the code with:

/*o ?*/ nd[cc].len --;

the generated code is:

movsx rax, r13d

sal rax, 4

add rax, OFFSET FLAT:nd+8

mov eax, DWORD PTR [rax]

lea edx, [rax-1]

movsx rax, r13d

sal rax, 4

add rax, OFFSET FLAT:nd+8

mov DWORD PTR [rax], edx

which as you can see (even without knowing much about x86-64 assembly) is simply the concatenation of the code generated in the former case (except for using register edx instead of r14d), so it's not as if writing the decrement in one line has saved you any mems. In particular, it would not be correct to count it as a single one, especially in something like cover that is called a huge number of times in this algorithm (dancing links for exact cover).

So the version as written by Knuth is correct, for its goal of counting the number of mems. He could also write oo,nd[cc].len--; (counting two mems) as you observed, but perhaps it might look like a bug at first glance in that case. (BTW, in your example in the question of oo,nd[k].len--,nd[k].aux=i-1; the two mems come from the load and the store in --; not two stores.)

o,t,nd,cc,aux. It's incomprehensible. – Dynode