Spark memory overhead related question asked multiple times in SO, I went through most of them. However, after going through multiple blogs, I got confused.

Below are the questions I have

- whether memory overhead is part of the executor memory or it's separate? As few of the blogs are saying memory overhead is part of the executor memory and others are saying executor memory + memory overhead(is that mean memory overhead is not part of the executor memory)?

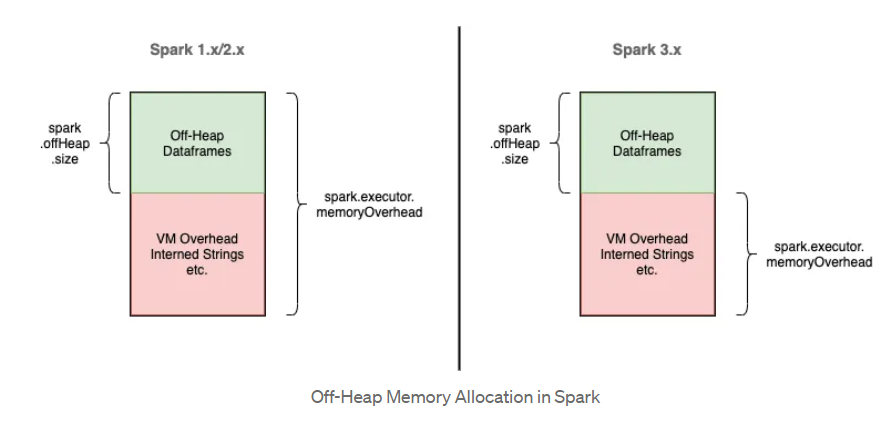

- Memory overhead and off-heap over are the same?

- What happens if I didn't mention overhead as part of the spark-submit, will it take default 18.75 or it won't?

- Will there be any side effects if we give more memory overhead than the default value?

https://docs.qubole.com/en/latest/user-guide/engines/spark/defaults-executors.html https://spoddutur.github.io/spark-notes/distribution_of_executors_cores_and_memory_for_spark_application.html

Below is the case I want to understand. I have 5 nodes with each node 16 vcores and 128GB Memory(out of which 120 is usable), now I want to submit spark application, below is the conf, I'm thinking

Total Cores 16 * 5 = 80

Total Memory 120 * 5 = 600GB

case 1: Memory Overhead part of the executor memory

spark.executor.memory=32G

spark.executor.cores=5

spark.executor.instances=14 (1 for AM)

spark.executor.memoryOverhead=8G ( giving more than 18.75% which is default)

spark.driver.memoryOverhead=8G

spark.driver.cores=5

Case 2: Memory Overhead not part of the executor memory

spark.executor.memory=28G

spark.executor.cores=5

spark.executor.instances=14 (1 for AM)

spark.executor.memoryOverhead=6G ( giving more than 18.75% which is default)

spark.driver.memoryOverhead=6G

spark.driver.cores=5

As per the below video, I'm trying to use 85% of the node i.e. around 100GB out of 120GB, not sure if we can use more than that.

https://www.youtube.com/watch?v=ph_2xwVjCGs&list=PLdqfPU6gm4b9bJEb7crUwdkpprPLseCOB&index=8&t=1281s (4:12)