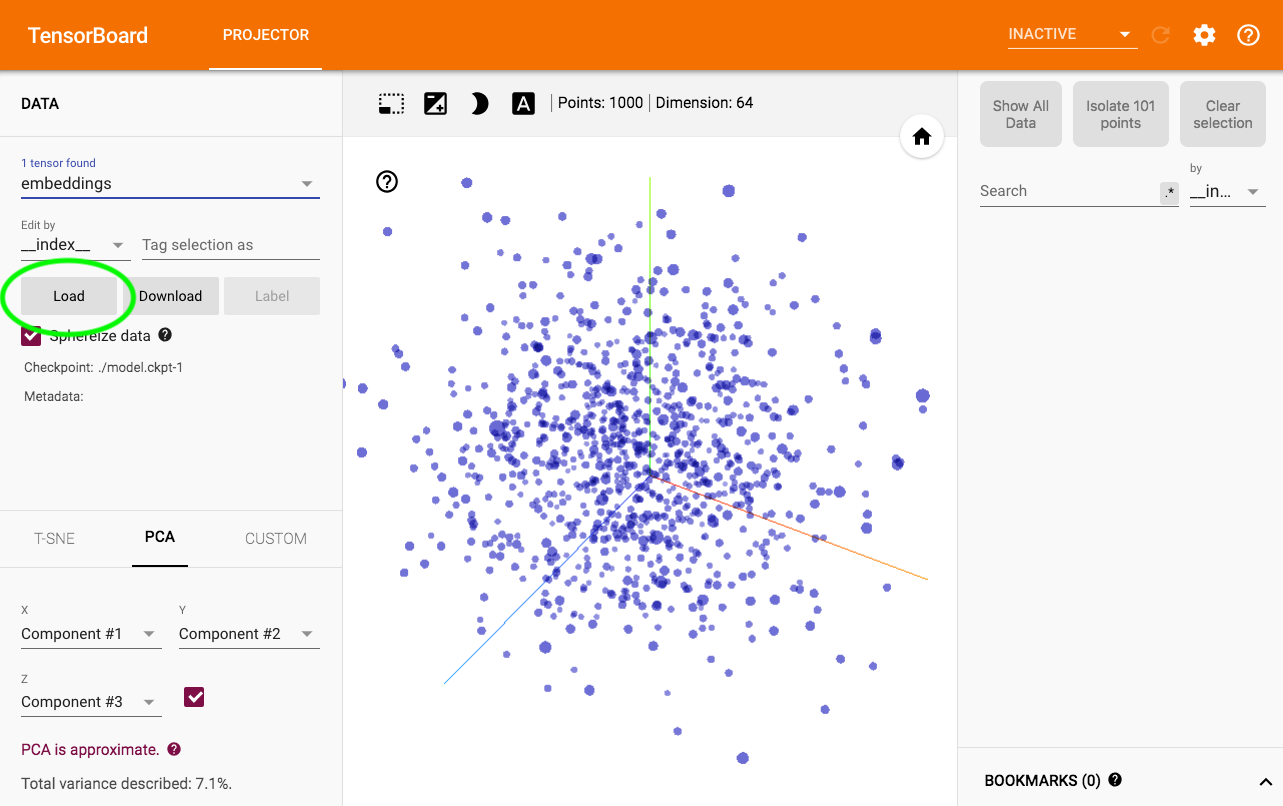

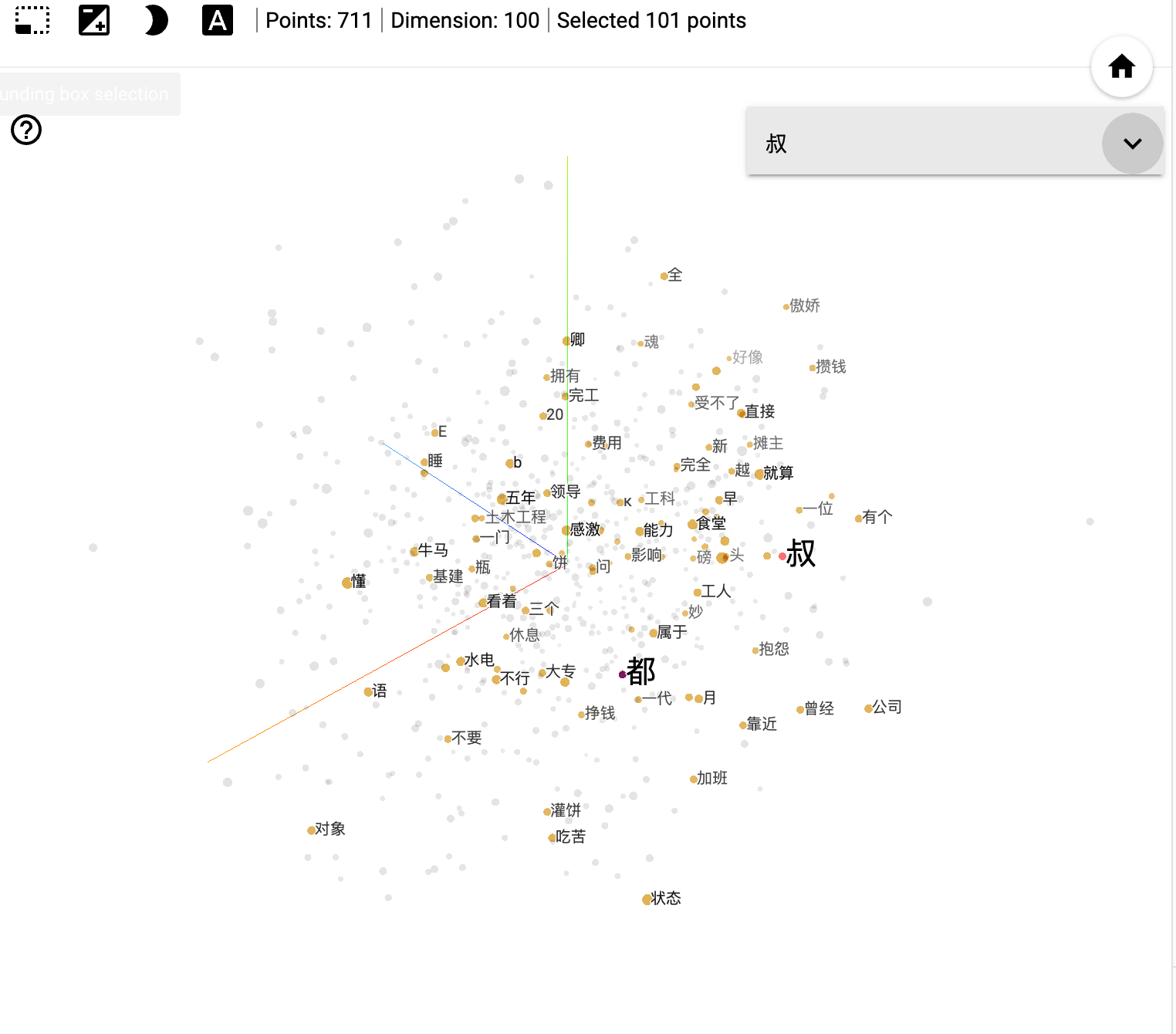

I've only seen a few questions that ask this, and none of them have an answer yet, so I thought I might as well try. I've been using gensim's word2vec model to create some vectors. I exported them into text, and tried importing it on tensorflow's live model of the embedding projector. One problem. It didn't work. It told me that the tensors were improperly formatted. So, being a beginner, I thought I would ask some people with more experience about possible solutions.

Equivalent to my code:

import gensim

corpus = [["words","in","sentence","one"],["words","in","sentence","two"]]

model = gensim.models.Word2Vec(iter = 5,size = 64)

model.build_vocab(corpus)

# save memory

vectors = model.wv

del model

vectors.save_word2vec_format("vect.txt",binary = False)

That creates the model, saves the vectors, and then prints the results out nice and pretty in a tab delimited file with values for all of the dimensions. I understand how to do what I'm doing, I just can't figure out what's wrong with the way I put it in tensorflow, as the documentation regarding that is pretty scarce as far as I can tell.

One idea that has been presented to me is implementing the appropriate tensorflow code, but I don’t know how to code that, just import files in the live demo.

Edit: I have a new problem now. The object I have my vectors in is non-iterable because gensim apparently decided to make its own data structures that are non-compatible with what I'm trying to do.

Ok. Done with that too! Thanks for your help!