I have a dataset containing workers with their demographic information like age gender,address etc and their work locations. I created an RDD from the dataset and converted it into a DataFrame.

There are multiple entries for each ID. Hence, I created a DataFrame which contained only the ID of the worker and the various office locations' that he/she had worked.

|----------|----------------|

| **ID** **Office_Loc** |

|----------|----------------|

| 1 |Delhi, Mumbai, |

| | Gandhinagar |

|---------------------------|

| 2 | Delhi, Mandi |

|---------------------------|

| 3 |Hyderbad, Jaipur|

-----------------------------

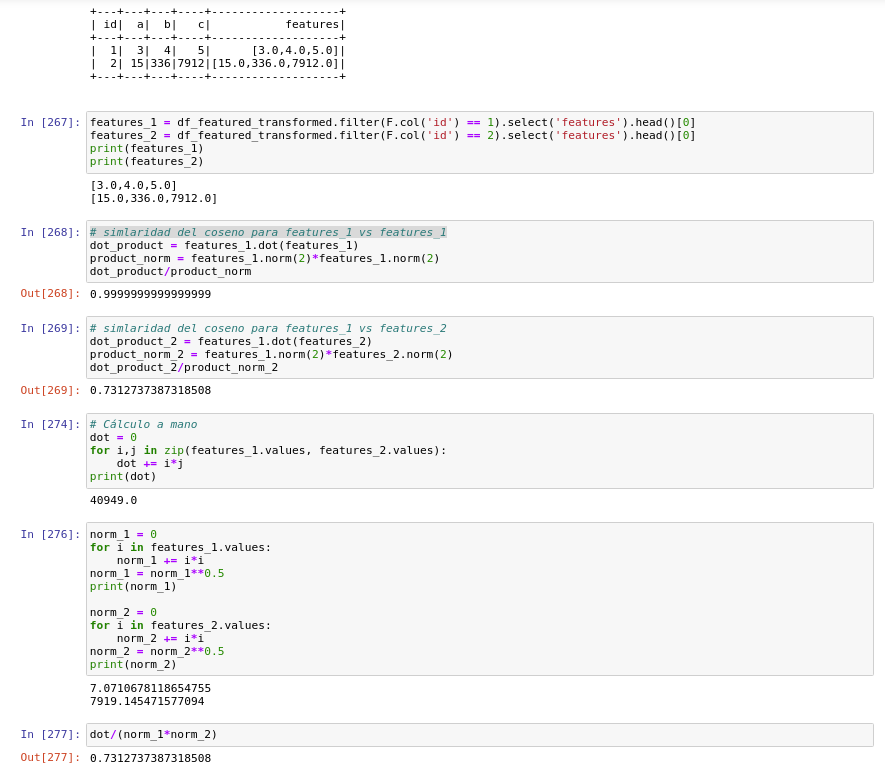

I want to calculate the cosine similarity between each worker with every other worker based on their office locations'.

So, I iterated through the rows of the DataFrame, retrieving a single row from the DataFrame :

myIndex = 1

values = (ID_place_df.rdd.zipWithIndex()

.filter(lambda ((l, v), i): i == myIndex)

.map(lambda ((l,v), i): (l, v))

.collect())

and then using map

cos_weight = ID_place_df.select("ID","office_location").rdd\

.map(lambda x: get_cosine(values,x[0],x[1]))

to calculated the cosine similarity between the extracted row and the whole DataFrame.

I do not think my approach is a good one since I am iterating through the rows of the DataFrame, it defeats the whole purpose of using spark. Is there a better way to do it in pyspark? Kindly advise.