I'm getting following error when I try to use one of the huggingface models for sentimental analysis:

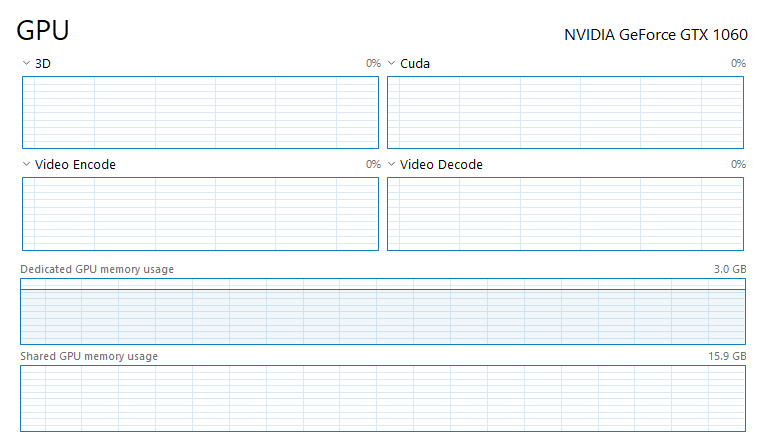

RuntimeError: CUDA out of memory. Tried to allocate 72.00 MiB (GPU 0; 3.00 GiB total capacity; 1.84 GiB already allocated; 5.45 MiB free; 2.04 GiB reserved in total by PyTorch)

Although I'm not using the CUDA memory it is still staying on the same level.

I tried to use torch.cuda.empty_cache() however it didn't affect the problem. When I closed the jupyter notebook it decreases to 0. So I am well sure that it is something with pytorch and python.

Here is my code:

import joblib

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification,pipeline

import torch.nn.functional as F

from torch.utils.data import DataLoader

import pandas as pd

import numpy as np

from tqdm import tqdm

tokenizer = AutoTokenizer.from_pretrained("savasy/bert-base-turkish-sentiment-cased")

model = AutoModelForSequenceClassification.from_pretrained("savasy/bert-base-turkish-sentiment-cased")

sa= pipeline("sentiment-analysis", tokenizer=tokenizer, model=model,device=0)

batcher = DataLoader(dataset=comments,

batch_size=100,

shuffle=True,

pin_memory=True)

predictions= []

for batch in tqdm(batcher):

p = sa(batch)

predictions.append(p)

I have a GTX 1060, python 3.8 and torch==1.7.1 and my os is Windows 10. And the count of comments are 187K. I would like to know if there is any work around for this memory issue. Maybe holding tensors somehow on CPU and only use batch on GPU. After using and getting this error the memory usage still continues. When I close my jupyter notebook it goes away. Is there any way that I can clear this memory ? Is there any way I can utilize Shared GPU memory ?