I found the answer myself.

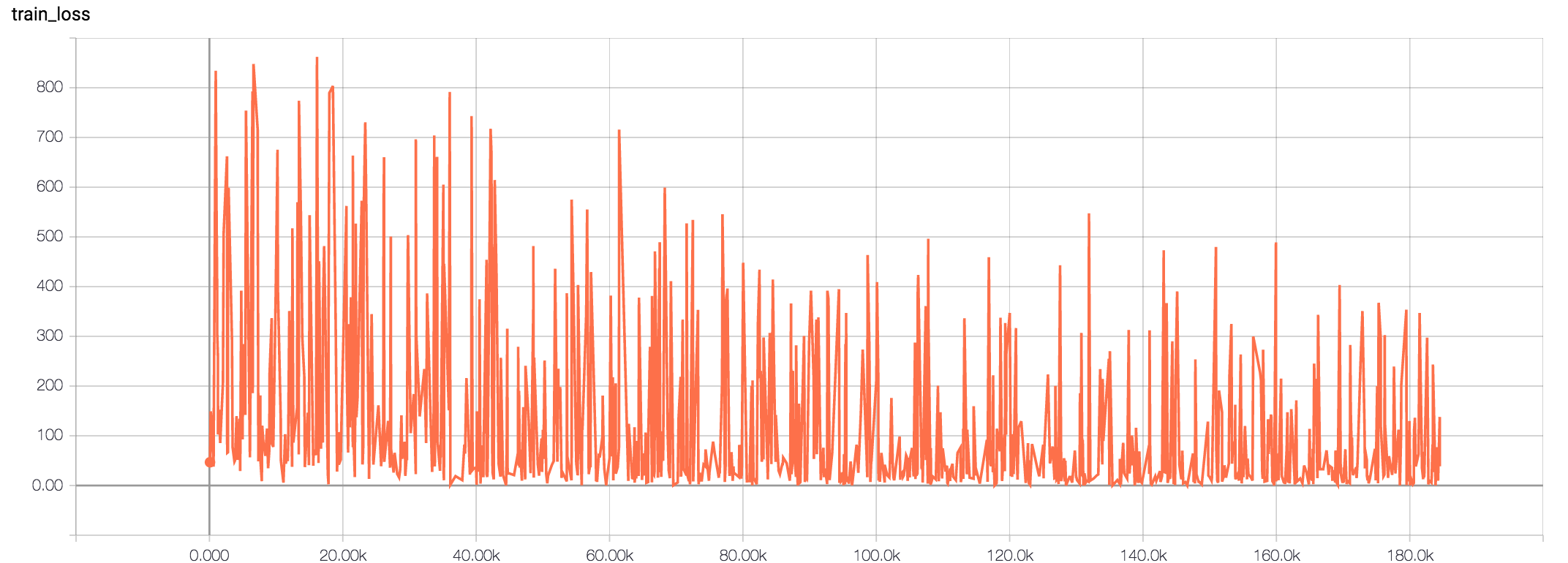

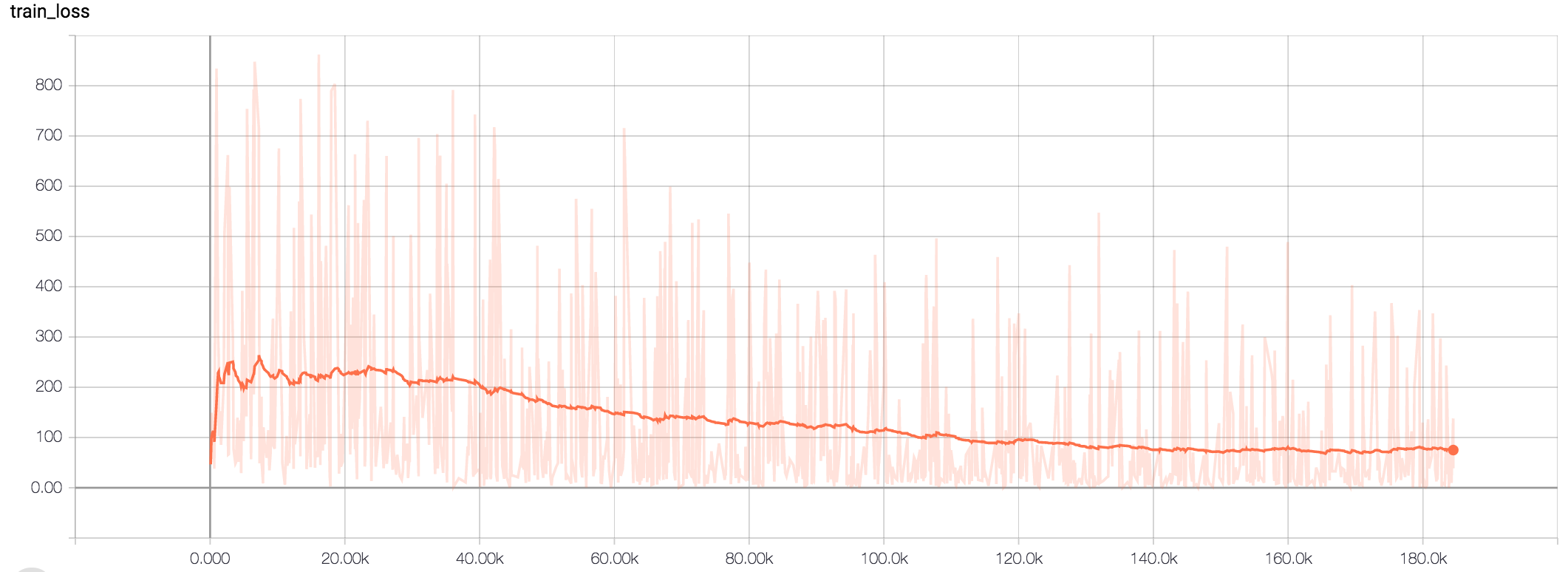

I think other answers are not correct, because they are based on experience with simpler models/architectures. The main point that was bothering me was the fact that noise in losses is usually more symmetrical (you can plot the average and the noise is randomly above and below the average). Here, we see more like low-tendency path and sudden peaks.

As I wrote, the architecture I'm using is an encoder-decoder with attention. It can easily concluded that inputs and outputs can have different lengths. The loss is summed over all time-steps, and doesn't need to be divided by the number of time-steps.

https://www.tensorflow.org/tutorials/seq2seq

Important note: It's worth pointing out that we divide the loss by batch_size, so our hyperparameters are "invariant" to batch_size. Some people divide the loss by (batch_size * num_time_steps), which plays down the errors made on short sentences. More subtly, our hyperparameters (applied to the former way) can't be used for the latter way. For example, if both approaches use SGD with a learning of 1.0, the latter approach effectively uses a much smaller learning rate of 1 / num_time_steps.

I was not averaging the loss, that's why the noise is observable.

P.S. Similarly the batch size of for example 8 can have a few hundred inputs and targets so in fact you can't say that it is small or big without knowing the mean length of example.