The question is like this one What's the input of each LSTM layer in a stacked LSTM network?, but more into implementing details.

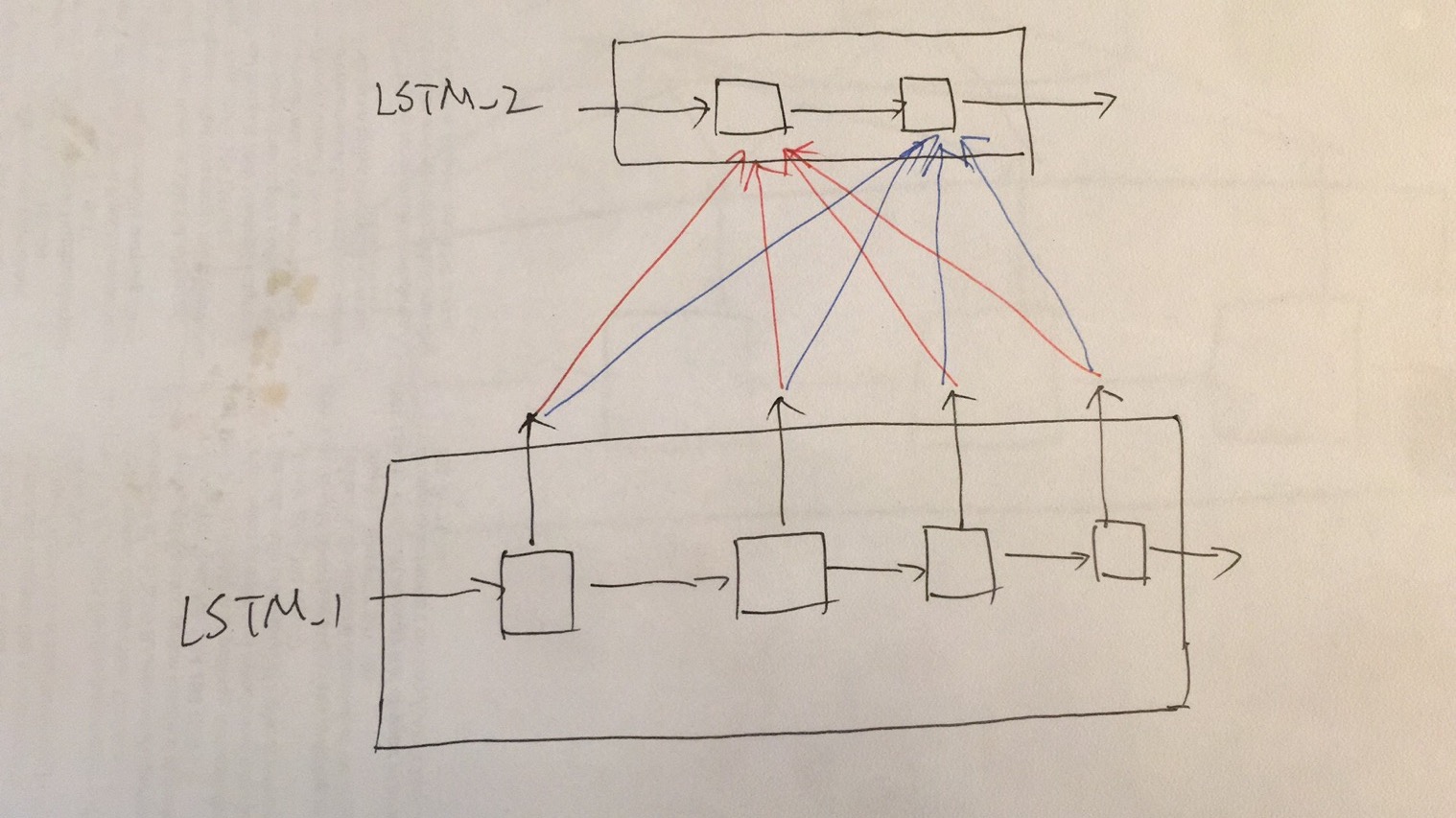

For simplicity how about 4 units and 2 units structures like the following

model.add(LSTM(4, input_shape=input_shape, return_sequences=True))

model.add(LSTM(2,input_shape=input_shape))

So I know the output of LSTM_1 is 4 length but how do the next 2 units handle these 4 inputs, are they fully connected to the next layer of nodes?

I guess they are fully connected but not sure like the following figure, it was not stated in the Keras document

Thanks!