The following quote is from "Comparison with other sort algorithms" section from Wikipedia Merge Sort page

On typical modern architectures, efficient quicksort implementations generally outperform mergesort for sorting RAM-based arrays.[citation needed] On the other hand, merge sort is a stable sort and is more efficient at handling slow-to-access sequential media.

My questions:

Why does Quicksort outperform Mergesort when the data to be sorted can all fit into memory? If all data needed are cached or in memory wouldn't it be fast for both Quicksort and Mergesort to access?

Why is Mergesort more efficient at handling slow-to-access sequential data (such as from disk in the case where the data to be sorted can't all fit into memory)?

(move from my comments below to here)In an array

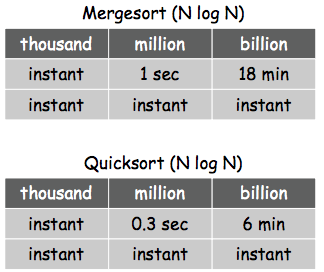

arrof primitives (data are sequential) of n elements. The pair of elements that has to be read and compared in MergeSort isarr[0]andarr[n/2](happens in the final merge). Now think the pair of elements that has to be read and compared in QuickSort isarr[1]andarr[n](happens in the first partition, assume we swap the randomly chosen pivot with the first element). We know data are read in blocks and load into cache, or disk to memory (correct me if I am wrong) then isn't there a better chance for the needed data gets load together in one block when using MergeSort? It just seems to me MergeSort would always have the upperhand because it is likely comparing elements that are closer together. I know this is False (see graph below) because QuickSort is obviously faster...... I know MergeSort is not in place and requires extra memory and that is likely to slow things down. Other than that what pieces am I missing in my analysis?

images are from Princeton CS MergeSort and QuickSort slides

My Motive:

I want to understand these above concepts because they are one of the main reasons of why mergeSort is preferred when sorting LinkedList,or none sequential data and quickSort is preferred when sorting Array, or sequential data. And why mergeSort is used to sort Object in Java and quickSort is used to sort primitive type in java.

update: Java 7 API actually uses TimSort to sort Object, which is a hybrid of MergeSort and InsertionSort. For primitives Dual-Pivot QuickSort. These changes were implemented starting in Java SE 7. This has to do with the stability of the sorting algorithm. Why does Java's Arrays.sort method use two different sorting algorithms for different types?

Edit:

I will appreciate an answer that addresses the following aspects:

- I know the two sorting algorithms differ in the number of moves, read, and comparisons. If those are that reasons contribute to the behaviors I see listed in my questions (I suspected it) then a thorough explanation of how the steps and process of the sorting algorithm results it having advantages or disadvantages seeking data from disk or memory will be much appreciated.

- Examples are welcome. I learn better with examples.

note: if you are reading @rcgldr's answer. check out our conversation in the chat room it has lots of good explanations and details. https://chat.stackoverflow.com/rooms/161554/discussion-between-rcgldr-and-oliver-koo