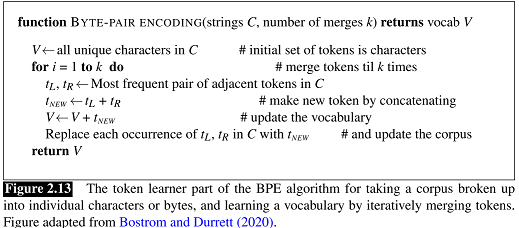

BPE is one of the three algorithms to deal with the unknown word problem(or languages with rich morphology that require dealing with structure below the word level) in an automatic way: byte-pair encoding, unigram language modeling, WordPiece, and the BPE tokenization schema has two parts: a token learner and a token segmenter.

The token learner takes a raw training corpus (sometimes roughly pre- separated into words, for example by whitespace) and induces a vocabulary, a set of tokens. The token segmenter takes a raw test sentence and segments it into the tokens in the vocabulary.

The algorithm has a parameter k which means k merges or k new symbols in the final vocabulary.

Let's train the BPE using this line: Pen Penapple Apple Pen adapted from PPAP, and show how the unknown/rare "words" penapplepen and applepen in the test data can be automatically reduced into known subword units.

Learning

![enter image description here]()

First, after some preprocessing(case mapping, regex based pre-tokenization, and adding end-of-word symbol _) we obtain the following strings C(as our corpus) and their frequencies(frequency: string):

2: p e n _

1: p e n a p p l e _

1: a p p l e _

The vocabulary V is [_, p, e, n, a, l]

Now let's run the first round of the for-loop in the above pseudocode:

p, e <- most frequent pair in {(p, e): 3, (e, n): 3, (n, _): 2, (a, p): 2, (p, p): 2, (p, l): 2, (l, e): 2, (e, _): 2, (n, a): 1}

pe <- p + e

[_, p, e, n, a, l, pe] <- [_, p, e, n, a, l] + pe

C becomes this:

2: pe n _

1: pe n a p p l e _

1: a p p l e _

Let's run the second merge as follows:

p, e <- most frequent pair in {(pe, n): 3, (n, _): 2, (a, p): 2, (p, p): 2, (p, l): 2, (l, e): 2, (e, _): 2, (n, a): 1}

pen <- pe + n

[_, p, e, n, a, l, pe, pen] <- [_, p, e, n, a, l, pe] + pen

C becomes this:

2: pen _

1: pen a p p l e _

1: a p p l e _

And here are the next merges if we take k as N >= 9:

Merge Current V

(pen, _) [_, p, e, n, a, l, pe, pen, pen_]

(a, p) [_, p, e, n, a, l, pe, pen, pen_, ap]

(ap, p) [_, p, e, n, a, l, pe, pen, pen_, ap, app]

(app, l) [_, p, e, n, a, l, pe, pen, pen_, ap, app, appl]

(appl, e) [_, p, e, n, a, l, pe, pen, pen_, ap, app, appl, apple]

(apple, _) [_, p, e, n, a, l, pe, pen, pen_, ap, app, appl, apple, apple_]

(pen, apple_) [_, p, e, n, a, l, pe, pen, pen_, ap, app, appl, apple, apple_, penapple_]

We see that after 9 iterations of merging, there are no adjacent pairs in C.

Parsing

The token parser just runs on the test data the merges we have learned from the training data, greedily, in the order we learned them. (Thus the frequencies in the test data don’t play a role, just the frequencies in the training data).

In this step we test the parser using this sentence: Applepen PenapplePen. As usual, we do the preprocessing we did in the training step and obtain:

a p p l e p e n _

p e n a p p l e p e n _

and follow the merging order:

(p, e), (pe, n), (pen, _), (a, p), (ap, p), (app, l), (appl, e), (apple, _), (pen, apple_)

First, (p, e). We merge p and e in the test data and get:

a p p l e pe n _

pe n a p p l e pe n _

Second, the (pe, n) and get:

a p p l e pen _

pen a p p l e pen _

.....

After all the 9 turns of merging we get(if k <= 9 we just apply the first k merges in this step; if k is 2 refer to this answer):

apple pen_

pen apple pen_

And the final tokenized test sentence is [apple, pen_, pen, apple, pen_], and the unknown(unseen in the training data) word penapplepen can also be separated.

Referneces:

- Neural Machine Translation of Rare Words with Subword Units

- Speech and Language Processing

- PPAP (Pen Pineapple Apple Pen)