I am working on Multiclass Classification (4 classes) for Language Task and I am using the BERT model for classification task. I am following this blog post Transfer Learning for NLP: Fine-Tuning BERT for Text Classification. My BERT Fine Tuned model returns nn.LogSoftmax(dim=1).

My data is pretty imbalanced so I used sklearn.utils.class_weight.compute_class_weight to compute weights of the classes and used the weights inside the Loss.

class_weights = compute_class_weight('balanced', np.unique(train_labels), train_labels)

weights= torch.tensor(class_weights,dtype=torch.float)

cross_entropy = nn.NLLLoss(weight=weights)

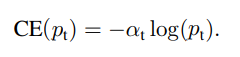

My results were not so good so I thought of Experementing with Focal Loss and have a code for Focal Loss.

class FocalLoss(nn.Module):

def __init__(self, alpha=1, gamma=2, logits=False, reduce=True):

super(FocalLoss, self).__init__()

self.alpha = alpha

self.gamma = gamma

self.logits = logits

self.reduce = reduce

def forward(self, inputs, targets):

BCE_loss = nn.CrossEntropyLoss()(inputs, targets)

pt = torch.exp(-BCE_loss)

F_loss = self.alpha * (1-pt)**self.gamma * BCE_loss

if self.reduce:

return torch.mean(F_loss)

else:

return F_loss

I have 3 questions now. First and the Most important is

- Should I use Class Weight with Focal Loss?

- If I have to Implement weights inside this

Focal Loss, can I useweightsparameters insidenn.CrossEntropyLoss() - If this implement is incorrect, what should be the proper code for this one including the weights (if possible)

compute_class_weight('balanced', np.unique(train_labels), train_labels)– Riorssonbalancedmeans assigning the class weight according to the Number of samples present per class? Isn't it? As given in this documentation If ‘balanced’, class weights will be given by n_samples / (n_classes * np.bincount(y)). – Rapallo