I have a pandas dataframe named "df" that I'm creating with the result of a SQL query against a Netezza database. I'm working in Jupyter notebook. The dataframe has two rows and two of the columns (CREATEDDATE and STAGEDATE) contain datetime values. When I run print(df), the result looks like this:

ID ISDELETED PARENTID CREATEDBYID \

0 017o000003tQRftAAG false a0no000000Hrv1IAAR 005o0000001w8wgAAA

1 017o000003jl52cAAA false a0no000000GszDUAAZ 005o0000001w2pTAAQ

CREATEDDATE FIELD OLDVALUE NEWVALUE STAGEDATE

0 2015-07-30 14:51:41 created None None 2016-06-06

1 2015-07-16 14:48:37 created None None 2016-06-06

If I run print(df.dtypes), the result is this:

ID object

ISDELETED object

PARENTID object

CREATEDBYID object

CREATEDDATE datetime64[ns]

FIELD object

OLDVALUE object

NEWVALUE object

STAGEDATE datetime64[ns]

dtype: object

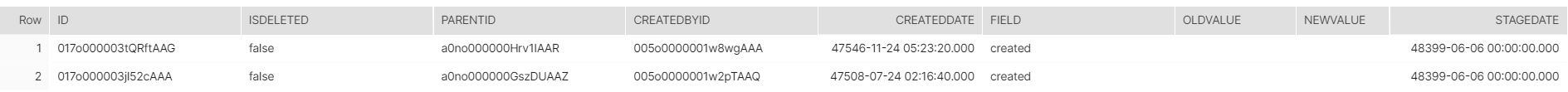

So, as far as I can tell, my datetime columns are correctly formatted to write to Snowflake using write_pandas(). However, after I do that, the dates are wildly different in Snowflake:

The STAGEDATE value of 2016-06-06, for example, is now 48399-06-06. Does anyone know how to fix this? I'm doing my pull from Netezza using pyodbc and using df = cs.execute() to populate the dataframe. I am importing and using snowflake.connector for my connection to Snowflake and am running the following to get write_pandas:

from snowflake.connector.pandas_tools import write_pandas