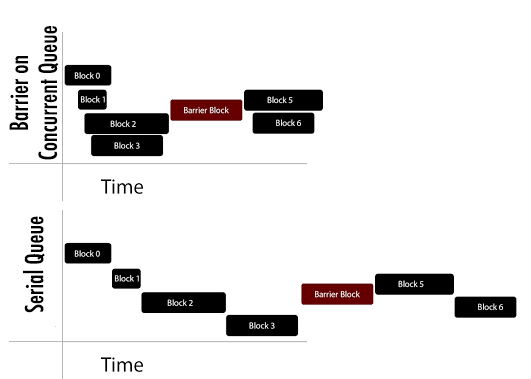

Your diagram perfectly illustrates how a barrier works. Seven blocks have been dispatched to a concurrent queue, four without a barrier (blocks 0 through 3 in your diagram), one with a barrier (the maroon colored "barrier block” numbered 4 in your diagram), and then two more blocks without a barrier (blocks 5 and 6 in your diagram).

As you can see, the first four run concurrently, but the barrier block will not run until those first four finish. And the last two will not start until the "barrier block" finishes.

Compare that to a serial queue, where none of the tasks can run concurrently:

![comparison]()

If every block dispatched to the concurrent queue was dispatched with a barrier, then you're right, that it would be equivalent to using a serial queue. But the power of barriers comes into play only when you combine barrier dispatched blocks with non-barrier dispatched blocks on concurrent queue. So, when you want to enjoy the concurrent behavior, don't use barriers. But where a single block needs serial-like behavior on concurrent queue, use a barrier.

One example is that you might dispatch 10 blocks without barriers, but then add an 11th with a barrier. Thus the first 10 may run concurrently with respect to each other, but the 11th will only start when the first 10 finish (achieving a "completion handler" behavior).

Or as rmaddy said (+1), the other common use for barriers is where you're accessing some shared resource for which you will allow concurrent reads (without barrier), but must enforce synchronized writes (with barrier). In that case, you often use dispatch_sync for the reads and dispatch_barrier_async for the writes.