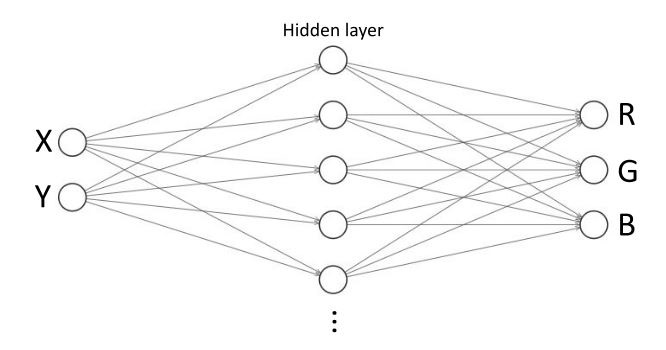

I want to draw StackOverflow's logo with this Neural Network:

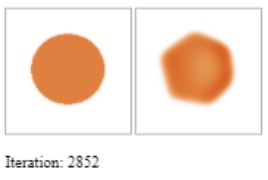

The NN should ideally become [r, g, b] = f([x, y]). In other words, it should return RGB colors for a given pair of coordinates. The FFNN works pretty well for simple shapes like a circle or a box. For example after several thousands epochs a circle looks like this:

Try it yourself: https://codepen.io/adelriosantiago/pen/PoNGeLw

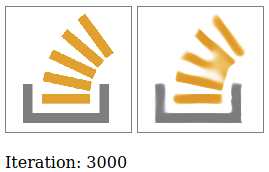

However since StackOverflow's logo is far more complex even after several thousands of iterations the FFNN's results are somewhat poor:

From left to right:

- StackOverflow's logo at 256 colors.

- With 15 hidden neurons: The left handle never appears.

- 50 hidden neurons: Pretty poor result in general.

- 0.03 as learning rate: Shows blue in the results (blue is not in the orignal image)

- A time-decreasing learning rate: The left handle appears but other details are now lost.

Try it yourself: https://codepen.io/adelriosantiago/pen/xxVEjeJ

Some parameters of interest are synaptic.Architect.Perceptron definition and learningRate value.

How can I improve the accuracy of this NN?

Could you improve the snippet? If so, please explain what you did. If there is a better NN architecture to tackle this type of job could you please provide an example?

Additional info:

- Artificial Neural Network library used: Synaptic.js

- To run this example in your localhost: See repository