I've written a little parsing program to compare the older System.IO.Stream and the newer System.IO.Pipelines in .NET Core. I'm expecting the pipelines code to be of equivalent speed or faster. However, it's about 40% slower.

The program is simple: it searches for a keyword in a 100Mb text file, and returns the line number of the keyword. Here is the Stream version:

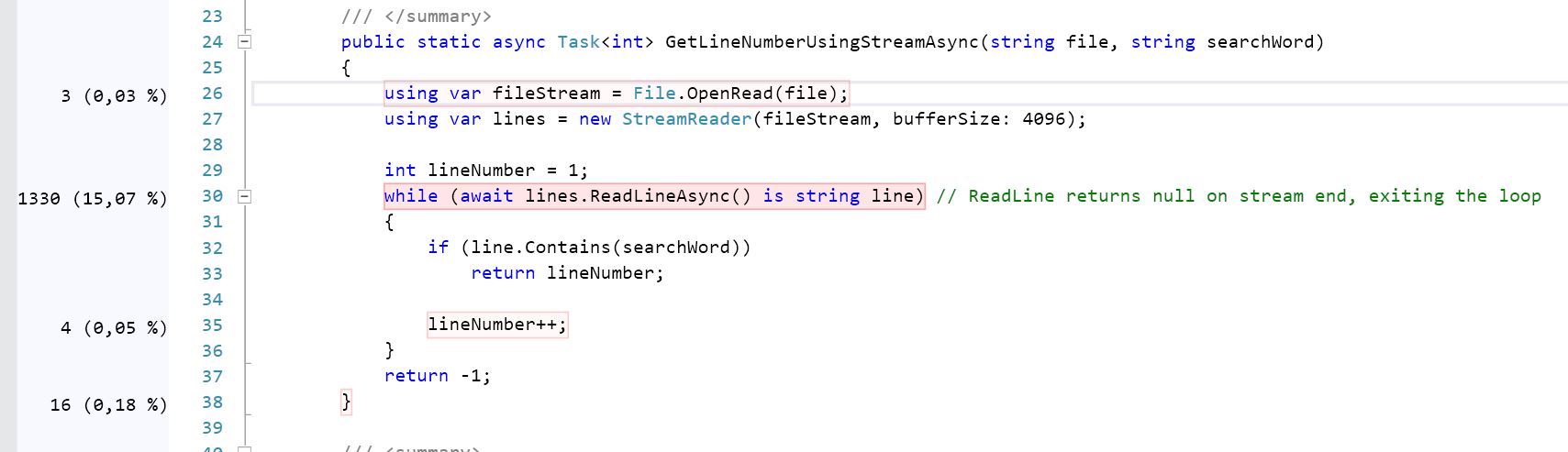

public static async Task<int> GetLineNumberUsingStreamAsync(

string file,

string searchWord)

{

using var fileStream = File.OpenRead(file);

using var lines = new StreamReader(fileStream, bufferSize: 4096);

int lineNumber = 1;

// ReadLineAsync returns null on stream end, exiting the loop

while (await lines.ReadLineAsync() is string line)

{

if (line.Contains(searchWord))

return lineNumber;

lineNumber++;

}

return -1;

}

I would expect the above stream code to be slower than the below pipelines code, because the stream code is encoding the bytes to a string in the StreamReader. The pipelines code avoids this by operating on bytes:

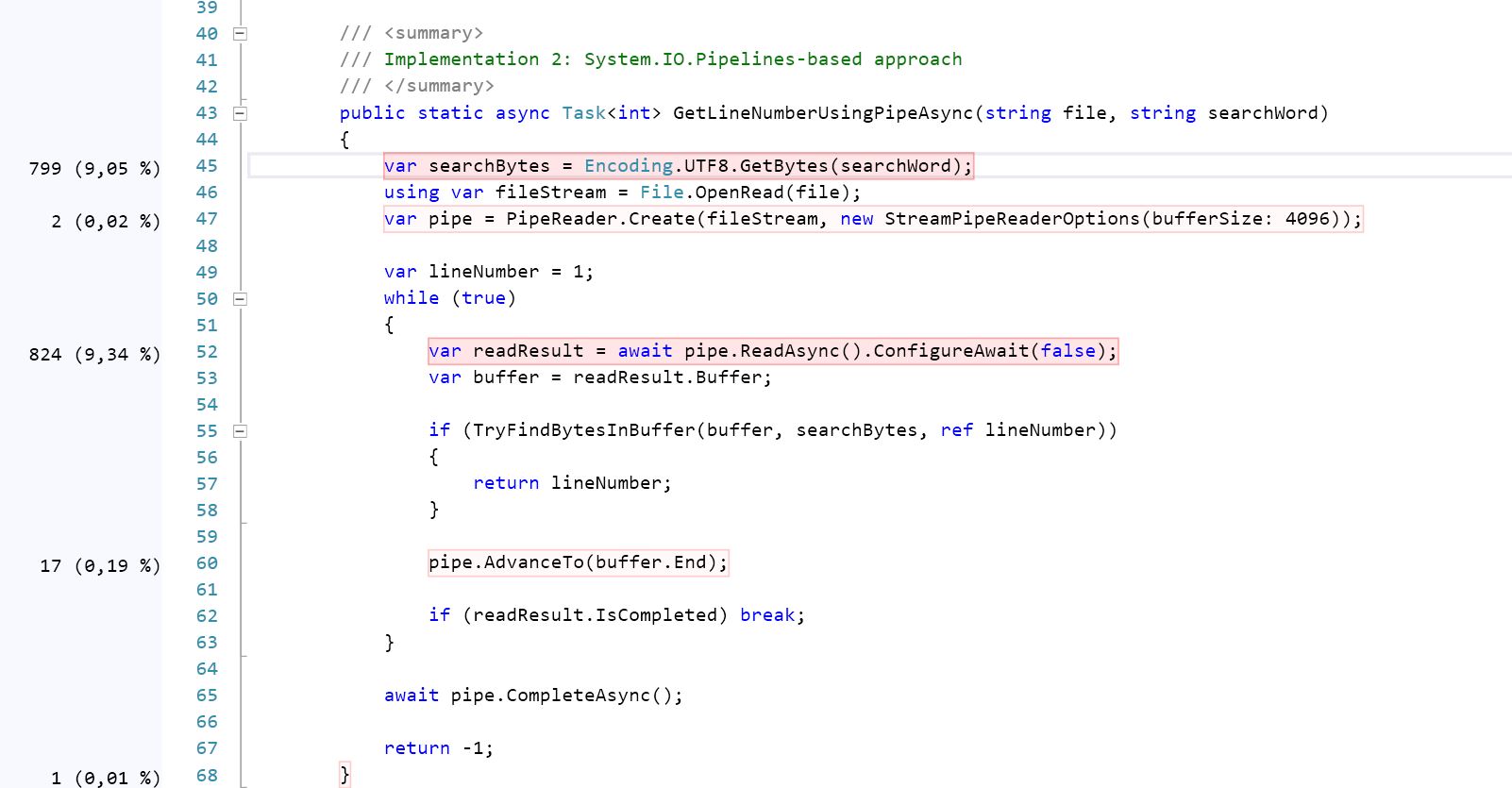

public static async Task<int> GetLineNumberUsingPipeAsync(string file, string searchWord)

{

var searchBytes = Encoding.UTF8.GetBytes(searchWord);

using var fileStream = File.OpenRead(file);

var pipe = PipeReader.Create(fileStream, new StreamPipeReaderOptions(bufferSize: 4096));

var lineNumber = 1;

while (true)

{

var readResult = await pipe.ReadAsync().ConfigureAwait(false);

var buffer = readResult.Buffer;

if(TryFindBytesInBuffer(ref buffer, searchBytes, ref lineNumber))

{

return lineNumber;

}

pipe.AdvanceTo(buffer.End);

if (readResult.IsCompleted) break;

}

await pipe.CompleteAsync();

return -1;

}

Here are the associated helper methods:

/// <summary>

/// Look for `searchBytes` in `buffer`, incrementing the `lineNumber` every

/// time we find a new line.

/// </summary>

/// <returns>true if we found the searchBytes, false otherwise</returns>

static bool TryFindBytesInBuffer(

ref ReadOnlySequence<byte> buffer,

in ReadOnlySpan<byte> searchBytes,

ref int lineNumber)

{

var bufferReader = new SequenceReader<byte>(buffer);

while (TryReadLine(ref bufferReader, out var line))

{

if (ContainsBytes(ref line, searchBytes))

return true;

lineNumber++;

}

return false;

}

static bool TryReadLine(

ref SequenceReader<byte> bufferReader,

out ReadOnlySequence<byte> line)

{

var foundNewLine = bufferReader.TryReadTo(out line, (byte)'\n', advancePastDelimiter: true);

if (!foundNewLine)

{

line = default;

return false;

}

return true;

}

static bool ContainsBytes(

ref ReadOnlySequence<byte> line,

in ReadOnlySpan<byte> searchBytes)

{

return new SequenceReader<byte>(line).TryReadTo(out var _, searchBytes);

}

I'm using SequenceReader<byte> above because my understanding is that it's more intelligent/faster than ReadOnlySequence<byte>; it has a fast path for when it can operate on a single Span<byte>.

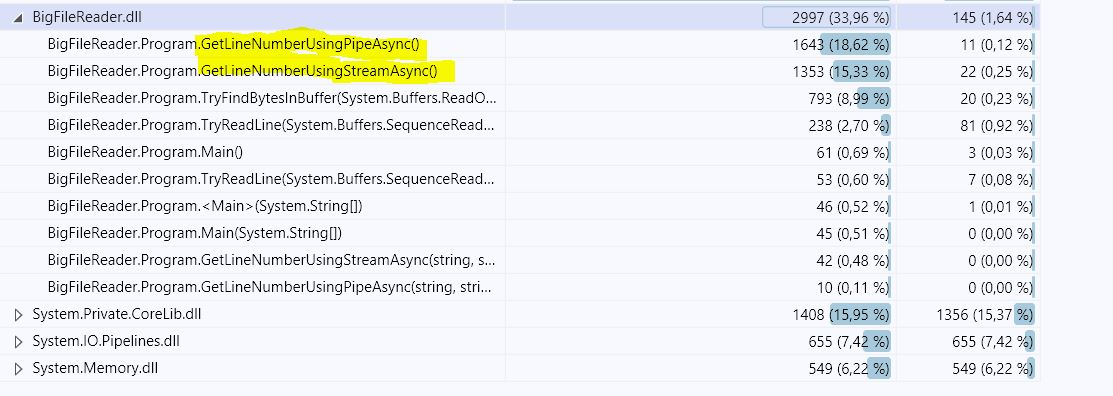

Here are the benchmark results (.NET Core 3.1). Full code and BenchmarkDotNet results are available in this repo.

- GetLineNumberWithStreamAsync - 435.6 ms while allocating 366.19 MB

- GetLineNumberUsingPipeAsync - 619.8 ms while allocating 9.28 MB

Am I doing something wrong in the pipelines code?

Update: Evk has answered the question. After applying his fix, here are the new benchmark numbers:

- GetLineNumberWithStreamAsync - 452.2 ms while allocating 366.19 MB

- GetLineNumberWithPipeAsync - 203.8 ms while allocated 9.28 MB