I'm trying to get Bitbucket Pipelines to work with multiple steps that define the deployment area. When I do, I get the error

Configuration error The deployment environment 'Staging' in your bitbucket-pipelines.yml file occurs multiple times in the pipeline. Please refer to our documentation for valid environments and their ordering.

From what I read, the deployment variable has to happen on a step by step basis.

How would I set up this example pipelines file to not hit that error?

image: ubuntu:18.04

definitions:

steps:

- step: &build

name: npm-build

condition:

changesets:

includePaths:

# Only run npm if anything in the build directory was touched

- "build/**"

image: node:14.17.5

script:

- echo 'build initiated'

- cd build

- npm install

- npm run dev

- echo 'build complete'

artifacts:

- themes/factor/css/**

- themes/factor/js/**

- step: &deploychanges

name: Deploy_Changes

deployment: Staging

script:

- echo 'Installing server dependencies'

- apt-get update -q

- apt-get install -qy software-properties-common

- add-apt-repository -y ppa:git-ftp/ppa

- apt-get update -q

- apt-get install -qy git-ftp

- echo 'All dependencies installed'

- echo 'Transferring changes'

- git ftp init --user $FTP_USER --passwd $FTP_PASSWORD $FTP_ADDRESS push --force --changed-only -vv

- echo 'File transfer complete'

- step: &deploycompiled

name: Deploy_Compiled

deployment: Staging

condition:

changesets:

includePaths:

# Only run npm if anything in the build directory was touched

- "build/**"

script:

- echo 'Installing server dependencies'

- apt-get update -q

- apt-get install -qy software-properties-common

- add-apt-repository -y ppa:git-ftp/ppa

- apt-get update -q

- apt-get install -qy git-ftp

- echo 'All dependencies installed'

- echo 'Transferring compiled assets'

- git ftp init --user $FTP_USER --passwd $FTP_PASSWORD $FTP_ADDRESS push --all --syncroot themes/factor/css/ -vv

- git ftp init --user $FTP_USER --passwd $FTP_PASSWORD $FTP_ADDRESS push --all --syncroot themes/factor/js/ -vv

- echo 'File transfer complete'

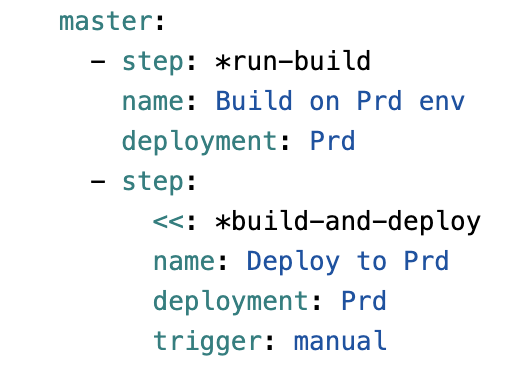

pipelines:

branches:

master:

- step: *build

<<: *deploychanges

deployment: Production

- step:

<<: *deploycompiled

deployment: Production

dev:

- step: *build

- step: *deploychanges

- step: *deploycompiled