There are two separate items that must be dealt with to upload to S3 - authentication and uploading.

Auth

Some possibilities, in order of security:

- Make your folder public (either via policy or ACL).

- Create a role in IAM that has your preferred limits, and use its keys.

- Use STS to issue temporary credentials, either authenticating yourself or using Federation

- Generate a pre-signed upload link for every file.

Generating pre-signed links was demonstrated by Aaron Rau.

Using STS is conceptually simpler (no need to sign each link), but is somewhat less secure (the same temp credentials can be used elsewhere until they expire).

If you use federated auth, you can skip the server-side entirely!

Some good tutorials for getting temporary IAM credentials from federated users, are here (for FineUploader, but the mechanism is the same)] and here.

To generate your own temporary IAM credentials you can use the AWS-SDK. An example in PHP:

Server:

<?php

require 'vendor/autoload.php';

use Aws\Result;

use Aws\Sts\StsClient;

$client = new StsClient(['region' => 'us-east-1', 'version' => 'latest']);

$result = $client->getSessionToken();

header('Content-type: application/json');

echo json_encode($result['Credentials']);

Client:

let dropzonesetup = async () => {

let creds = await fetch('//example.com/auth.php')

.catch(console.error);

// If using aws-sdk.js

AWS.config.credentials = new AWS.Credentials(creds);

Uploading

Either use DropZone natively and amend as needed, or have Dropzone be a front for the aws-sdk.

To use the aws-sdk

You need to include it

<script src="//sdk.amazonaws.com/js/aws-sdk-2.262.1.min.js"></script>

And then update Dropzone to interact with it (based on this tutorial).

let canceled = file => { if (file.s3upload) file.s3upload.abort() }

let options =

{ canceled

, removedfile: canceled

, accept (file, done) {

let params = {Bucket: 'mybucket', Key: file.name, Body: file };

file.s3upload = new AWS.S3.ManagedUpload({params});

done();

}

}

// let aws-sdk send events to dropzone.

function sendEvents(file) {

let progress = i => dz.emit('uploadprogress', file, i.loaded * 100 / i.total, i.loaded);

file.s3upload.on('httpUploadProgress', progress);

file.s3upload.send(err => err ? dz.emit('error', file, err) : dz.emit('complete', file));

}

Dropzone.prototype.uploadFiles = files => files.map(sendEvents);

var dz = new Dropzone('#dz', options)

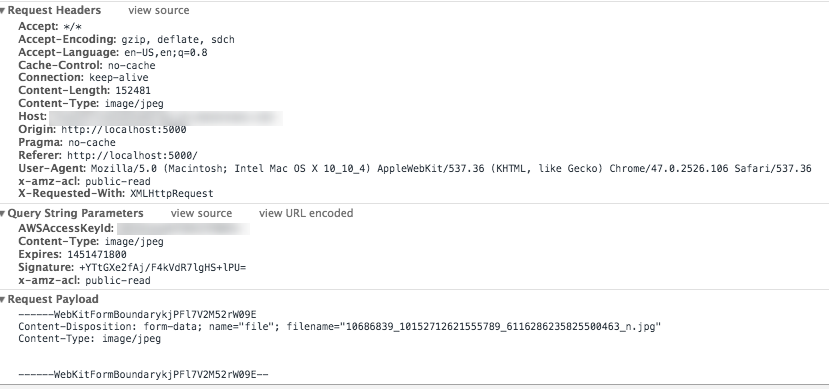

To use DropZone natively

let options =

{ method: 'put'

// Have DZ send raw data instead of formData

, sending (file, xhr) {

let _send = xhr.send

xhr.send = () => _send.call(xhr, file)

}

// For STS, if creds is the result of getSessionToken / getFederatedToken

, headers: { 'x-amz-security-token': creds.SessionToken }

// Or, if you are using signed URLs (see other answers)

processing: function(file){ this.options.url = file.signedRequest; }

async accept (file, done) {

let url = await fetch('https://example.com/auth.php')

.catch(err => done('Failed to get an S3 signed upload URL', err));

file.uploadURL = url

done()

}

}

The above is without testing - have added just the token, but am not sure which headers really needed to be added. Check here, here and here for the docs, and perhaps use FineUploader's implementation as a guide.

Hopefully this will help, and if anyone wants to add a pull request for S3 support (as is in FineUploader), I'm sure it will be appreciated.