I am training a classifier over tweets for sentiment analysis purposes.

The code is the following:

df = pd.read_csv('Trainded Dataset Sentiment.csv', error_bad_lines=False)

df.head(5)

#TWEET

X = df[['SentimentText']].loc[2:50000]

#SENTIMENT LABEL

y = df[['Sentiment']].loc[2:50000]

#Apply Normalizer function over the tweets

X['Normalized Text'] = X.SentimentText.apply(text_normalization_sentiment)

X = X['Normalized Text']

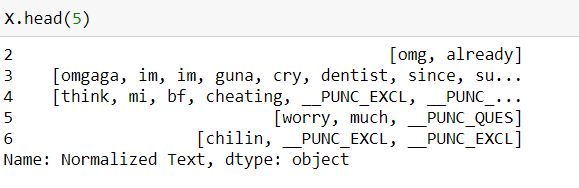

After normalization, the dataframe looks like:

X_train, X_test, y_train, y_test =

sklearn.cross_validation.train_test_split(X, y,

test_size=0.2, random_state=42)

#Classifier

vec = TfidfVectorizer(min_df=5, max_df=0.95, sublinear_tf=True,

use_idf=True, ngram_range=(1,2))

svm_clf = svm.LinearSVC(C=0.1)

vec_clf = Pipeline([('vectorizer', vec), ('pac', svm_clf)])

vec_clf.fit(X_train, y_train) #Problem

joblib.dump(vec_clf, 'svmClassifier.pk1', compress=3)

It fails with the following error:

AttributeError: 'list' object has no attribute 'lower'

Full Traceback:

--------------------------------------------------------------------------- AttributeError Traceback (most recent call last) <ipython-input-33-4264de810c2b> in <module>()

4 svm_clf = svm.LinearSVC(C=0.1)

5 vec_clf = Pipeline([('vectorizer', vec), ('pac', svm_clf)])

----> 6 vec_clf.fit(X_train, y_train)

7 joblib.dump(vec_clf, 'svmClassifier.pk1', compress=3)

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\pipeline.py in fit(self, X, y, **fit_params)

255 This estimator

256 """

--> 257 Xt, fit_params = self._fit(X, y, **fit_params)

258 if self._final_estimator is not None:

259 self._final_estimator.fit(Xt, y, **fit_params)

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\pipeline.py in

_fit(self, X, y, **fit_params)

220 Xt, fitted_transformer = fit_transform_one_cached(

221 cloned_transformer, None, Xt, y,

--> 222 **fit_params_steps[name])

223 # Replace the transformer of the step with the fitted

224 # transformer. This is necessary when loading the transformer

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\externals\joblib\memory.py in __call__(self, *args, **kwargs)

360

361 def __call__(self, *args, **kwargs):

--> 362 return self.func(*args, **kwargs)

363

364 def call_and_shelve(self, *args, **kwargs):

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\pipeline.py in

_fit_transform_one(transformer, weight, X, y, **fit_params)

587 **fit_params):

588 if hasattr(transformer, 'fit_transform'):

--> 589 res = transformer.fit_transform(X, y, **fit_params)

590 else:

591 res = transformer.fit(X, y, **fit_params).transform(X)

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\feature_extraction\text.py in fit_transform(self, raw_documents, y) 1379 Tf-idf-weighted document-term matrix. 1380 """

-> 1381 X = super(TfidfVectorizer, self).fit_transform(raw_documents) 1382 self._tfidf.fit(X) 1383 # X is already a transformed view of raw_documents so

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\feature_extraction\text.py in fit_transform(self, raw_documents, y)

867

868 vocabulary, X = self._count_vocab(raw_documents,

--> 869 self.fixed_vocabulary_)

870

871 if self.binary:

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\feature_extraction\text.py in _count_vocab(self, raw_documents, fixed_vocab)

790 for doc in raw_documents:

791 feature_counter = {}

--> 792 for feature in analyze(doc):

793 try:

794 feature_idx = vocabulary[feature]

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\feature_extraction\text.py in <lambda>(doc)

264

265 return lambda doc: self._word_ngrams(

--> 266 tokenize(preprocess(self.decode(doc))), stop_words)

267

268 else:

C:\Users\Monviso\Anaconda3\lib\site-packages\sklearn\feature_extraction\text.py in <lambda>(x)

230

231 if self.lowercase:

--> 232 return lambda x: strip_accents(x.lower())

233 else:

234 return strip_accents

AttributeError: 'list' object has no attribute 'lower'

X['Normalized Text'] = X.SentimentText.apply(text_normalization_sentiment)line, but hard to understand without full traceback – Carloscarlota