I have been searching the whole day for a solution. I've checked out several Threads regarding my problem.

But it didn't help me a lot. Basically I want that the Camera Preview is fullscreen but text only gets recognized in the center of the screen, where a Rectangle is drawn.

Technologies I am using:

- Google Mobile Vision API’s for Optical character recognition(OCR)

- Dependecy:

play-services-vision

My current state: I created a BoxDetector class:

public class BoxDetector extends Detector {

private Detector mDelegate;

private int mBoxWidth, mBoxHeight;

public BoxDetector(Detector delegate, int boxWidth, int boxHeight) {

mDelegate = delegate;

mBoxWidth = boxWidth;

mBoxHeight = boxHeight;

}

public SparseArray detect(Frame frame) {

int width = frame.getMetadata().getWidth();

int height = frame.getMetadata().getHeight();

int right = (width / 2) + (mBoxHeight / 2);

int left = (width / 2) - (mBoxHeight / 2);

int bottom = (height / 2) + (mBoxWidth / 2);

int top = (height / 2) - (mBoxWidth / 2);

YuvImage yuvImage = new YuvImage(frame.getGrayscaleImageData().array(), ImageFormat.NV21, width, height, null);

ByteArrayOutputStream byteArrayOutputStream = new ByteArrayOutputStream();

yuvImage.compressToJpeg(new Rect(left, top, right, bottom), 100, byteArrayOutputStream);

byte[] jpegArray = byteArrayOutputStream.toByteArray();

Bitmap bitmap = BitmapFactory.decodeByteArray(jpegArray, 0, jpegArray.length);

Frame croppedFrame =

new Frame.Builder()

.setBitmap(bitmap)

.setRotation(frame.getMetadata().getRotation())

.build();

return mDelegate.detect(croppedFrame);

}

public boolean isOperational() {

return mDelegate.isOperational();

}

public boolean setFocus(int id) {

return mDelegate.setFocus(id);

}

@Override

public void receiveFrame(Frame frame) {

mDelegate.receiveFrame(frame);

}

}

And implemented an instance of this class here:

final TextRecognizer textRecognizer = new TextRecognizer.Builder(App.getContext()).build();

// Instantiate the created box detector in order to limit the Text Detector scan area

BoxDetector boxDetector = new BoxDetector(textRecognizer, width, height);

//Set the TextRecognizer's Processor but using the box collider

boxDetector.setProcessor(new Detector.Processor<TextBlock>() {

@Override

public void release() {

}

/*

Detect all the text from camera using TextBlock

and the values into a stringBuilder which will then be set to the textView.

*/

@Override

public void receiveDetections(Detector.Detections<TextBlock> detections) {

final SparseArray<TextBlock> items = detections.getDetectedItems();

if (items.size() != 0) {

mTextView.post(new Runnable() {

@Override

public void run() {

StringBuilder stringBuilder = new StringBuilder();

for (int i = 0; i < items.size(); i++) {

TextBlock item = items.valueAt(i);

stringBuilder.append(item.getValue());

stringBuilder.append("\n");

}

mTextView.setText(stringBuilder.toString());

}

});

}

}

});

mCameraSource = new CameraSource.Builder(App.getContext(), boxDetector)

.setFacing(CameraSource.CAMERA_FACING_BACK)

.setRequestedPreviewSize(height, width)

.setAutoFocusEnabled(true)

.setRequestedFps(15.0f)

.build();

On execution this Exception is thrown:

Exception thrown from receiver.

java.lang.IllegalStateException: Detector processor must first be set with setProcessor in order to receive detection results.

at com.google.android.gms.vision.Detector.receiveFrame(com.google.android.gms:play-services-vision-common@@19.0.0:17)

at com.spectures.shopendings.Helpers.BoxDetector.receiveFrame(BoxDetector.java:62)

at com.google.android.gms.vision.CameraSource$zzb.run(com.google.android.gms:play-services-vision-common@@19.0.0:47)

at java.lang.Thread.run(Thread.java:919)

If anyone has a clue, what my fault is or has any alternatives I would really appreciate it. Thank you!

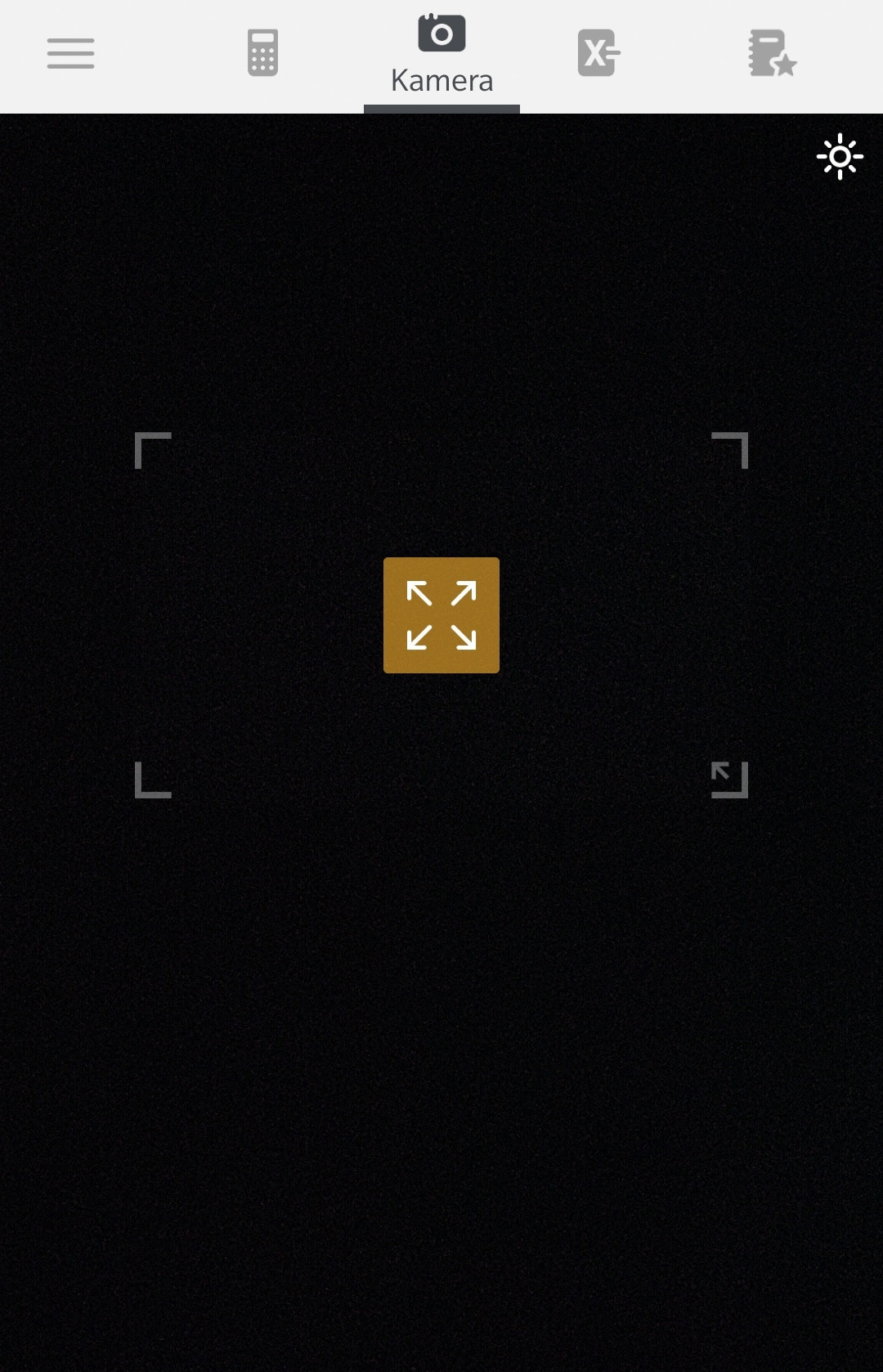

This is what I want to achieve, a Rect. Text area scanner: