I'd use SparkListener that can intercept onTaskEnd or onStageCompleted events that you could use to access task metrics.

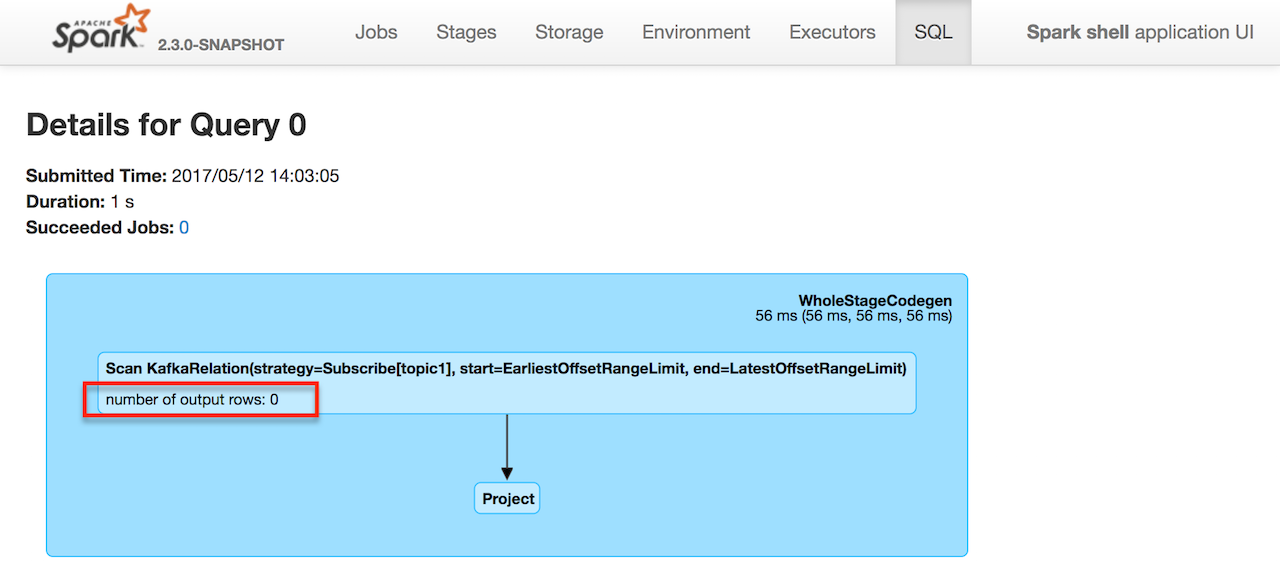

Task metrics give you the accumulators Spark uses to display metrics in SQL tab (in Details for Query).

![web UI / Details for Query]()

For example, the following query:

spark.

read.

option("header", true).

csv("../datasets/people.csv").

limit(10).

write.

csv("people")

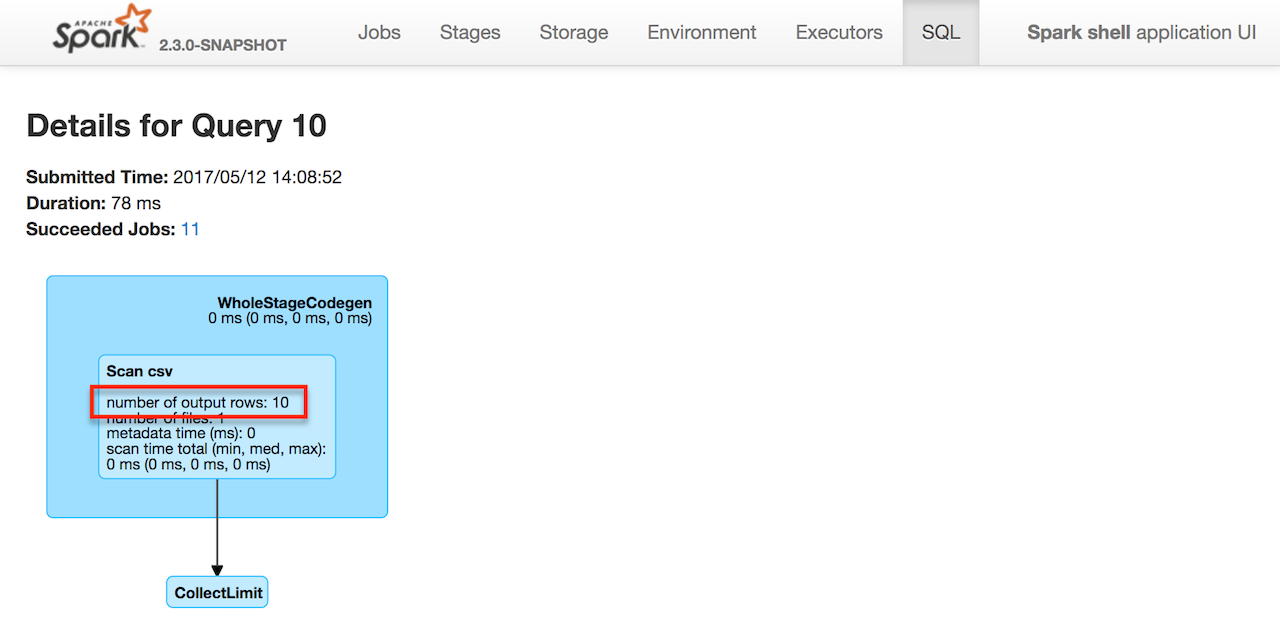

gives exactly 10 output rows so Spark knows it (and you could too).

![enter image description here]()

You could also explore Spark SQL's QueryExecutionListener:

The interface of query execution listener that can be used to analyze execution metrics.

You can register a QueryExecutionListener using ExecutionListenerManager that's available as spark.listenerManager.

scala> :type spark.listenerManager

org.apache.spark.sql.util.ExecutionListenerManager

scala> spark.listenerManager.

clear clone register unregister

I think it's closer to the "bare metal", but haven't used that before.

@D3V (in the comments section) mentioned accessing the numOutputRows SQL metrics using QueryExecution of a structured query. Something worth considering.

scala> :type q

org.apache.spark.sql.DataFrame

scala> :type q.queryExecution.executedPlan.metrics

Map[String,org.apache.spark.sql.execution.metric.SQLMetric]

q.queryExecution.executedPlan.metrics("numOutputRows").value

SparkListenerwith SQL? I've done some crude testing before, and it didn't capture SQL write metrics.ExecutionListenerManageris the way to go, and has nice example in the tests. – Throes