My Question: To what extent does RAII substitute other design patterns

like Garbage Collection? I am assuming that manual memory management

is not used to represent shared ownership in the system.

I'm not sure about calling this a design pattern, but in my equally strong opinion, and just talking about memory resources, RAII tackles almost all the problems that GC can solve while introducing fewer.

Is shared ownership of objects a sign of bad design?

I share the thought that shared ownership is far, far from ideal in most cases, because the high-level design doesn't necessarily call for it. About the only time I've found it unavoidable is during the implementation of persistent data structures, where it's at least internalized as an implementation detail.

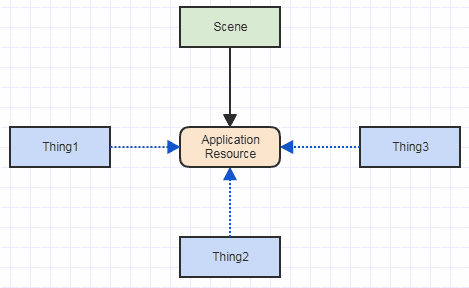

The biggest problem I find with GC or just shared ownership in general is that it doesn't free the developer of any responsibilities when it comes to application resources, but can give the illusion of doing so. If we have a case like this (Scene is the sole logical owner of the resource, but other things hold a reference/pointer to it like a camera storing a scene exclusion list defined by the user to omit from rendering):

![enter image description here]()

And let's say the application resource is like an image, and its lifetime is tied to user input (ex: the image should be freed when the user requests to close a document containing it), then the work to properly free the resource is the same with or without GC.

Without GC, we might remove it from a scene list and allow its destructor to be invoked, while triggering an event to allow Thing1, Thing2, and Thing3 to set their pointers to it to null or remove them from a list so that they don't have dangling pointers.

With GC, it's basically the same thing. We remove the resource from the scene list while triggering an event to allow Thing1, Thing2, and Thing3 to set their references to null or remove them from a list so that the garbage collector can collect it.

The Silent Programmer Mistake Which Flies Under Radar

The difference in this scenario is what happens when a programmer mistake occurs, like Thing2 failing to handle the removal event. If Thing2 stores a pointer, it now has a dangling pointer and we might have a crash. That's catastrophic but something we might easily catch in our unit and integration tests, or at least something QA or testers will catch rather quickly. I don't work in a mission-critical or security-critical context, so if the crashy code managed to ship somehow, it's still not so bad if we can get a bug report, reproduce it, and detect it and fix it rather quickly.

If Thing2 stores a strong reference and shares ownership, we have a very silent logical leak, and the image won't be freed until Thing2 is destroyed (which it might not be destroyed until shutdown). In my domain, this silent nature of the mistake is very problematic, since it can go quietly unnoticed even after shipping until users start to notice that working in the application for an hour causes it to take gigabytes of memory, e.g., and starts slowing down until they restart it. And at that point, we might have accumulated a large number of these issues, since it's so easy for them to fly under the radar like a stealth fighter bug, and there's nothing I dislike more than stealth fighter bugs.

![enter image description here]()

And it's due to that silent nature that I tend to dislike shared ownership with a passion, and TBH I never understood why GC is so popular (might be my particular domain -- I'm admittedly very ignorant of ones that are mission-critical, e.g.) to the point where I'm hungry for new languages without GC. I have found investigating all such leaks related to shared ownership to be very time-consuming, and sometimes investigating for hours only to find the leak was caused by source code outside of our control (third party plugin).

Weak References

Weak references are conceptually ideal to me for Thing1, Thing2, and Thing3. That would allow them to detect when the resource has been destroyed in hindsight without extending its lifetime, and perhaps we could guarantee a crash in those cases or some might even be able to gracefully deal with this in hindsight. The problem to me is that weak references are convertible to strong references and vice versa, so among the internal and third party developers out there, someone could still carelessly end up storing a strong reference in Thing2 even though a weak reference would have been far more appropriate.

I did try in the past to encourage the use of weak references as much as possible among the internal team and documenting that it should be used in the SDK. Unfortunately it was difficult to promote the practice among such a wide and mixed group of people, and we still ended up with our share of logical leaks.

The ease at which anyone, at any given time, can extend the lifetime of an object far longer than appropriate by simply storing a strong reference to it in their object starts to become a very frightening prospect when looking down at a huge codebase that's leaking massive resources. I often wish that a very explicit syntax was required to store any kind of strong reference as a member of an object of a kind which at least would lead a developer to think twice about doing it needlessly.

Explicit Destruction

So I tend to favor explicit destruction for persistent application resources, like so:

on_removal_event:

// This is ideal to me, not trying to release a bunch of strong

// references and hoping things get implicitly destroyed.

destroy(app_resource);

... since we can count on it to free the resource. We can't be completely assured that something out there in the system won't end up having a dangling pointer or weak reference, but at least those issues tend to be easy to detect and reproduce in testing. They don't go unnoticed for ages and accumulate.

The one tricky case has always been multithreading for me. In those cases, what I have found useful instead of full-blown garbage collection or, say, shared_ptr, is to simply defer destruction somehow:

on_removal_event:

// *May* be deferred until threads are finished processing the resource.

destroy(app_resource);

In some systems where the persistent threads are unified in a way such that they have a processing event, e.g., we can mark the resource to be destroyed in a deferred fashion in a time slice when threads are not being processed (almost starts to feel like stop-the-world GC, but we're keeping explicit destruction). In other cases, we might use, say, reference counting but in a way that avoids shared_ptr, where a resource's reference count starts at zero and will be destroyed using that explicit syntax above unless a thread locally extends its lifetime by incrementing the counter temporarily (ex: using a scoped resource in a local thread function).

As roundabout as that seems, it avoids exposing GC references or shared_ptr to the outside world which can easily tempt some developers (internally on your team or a third party developer) to store strong references (shared_ptr, e.g.) as a member of an object like Thing2 and thereby extend a resource's lifetime inadvertently, and possibly for far, far longer than appropriate (possibly all the way until application shutdown).

RAII

Meanwhile RAII automatically eliminates physical leaks just as well as GC, but furthermore, it works for resources other than just memory. We can use it for a scoped mutex, a file which automatically closes on destruction, we can use it even to automatically reverse external side effects through scope guards, etc. etc.

So if given the choice and I had to pick one, it's easily RAII for me. I work in a domain where those silent memory leaks caused by shared ownership are absolutely killer, and a dangling pointer crash is actually preferable if (and it likely will) be caught early during testing. Even in some really obscure event that it's caught late, if it manifests itself in a crash close to the site where the mistake occurred, that's still preferable than using memory profiling tools and trying to figure out who forgot to release a reference while wading through millions of lines of code. In my very blunt opinion, GC introduces more problems than it solves for my particular domain (VFX, which is somewhat similar to games in terms of scene organization and application state), and one of the reasons besides those very silent shared ownership leaks is because it can give the developers the false impression that they don't have to think about resource management and ownership of persistent application resources while inadvertently causing logical leaks left and right.

"When does RAII fail"

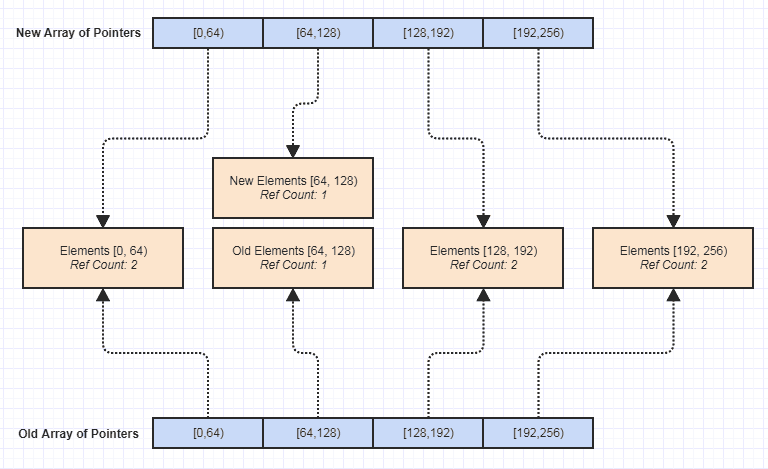

The only case I've ever encountered in my whole career where I couldn't think of any possible way to avoid shared ownership of some sort is when I implemented a library of persistent data structures, like so:

![enter image description here]()

I used it to implement an immutable mesh data structure which can have portions modified without being made unique, like so (test with 4 million quadrangles):

![enter image description here]()

Every single frame, a new mesh is being created as the user drags over it and sculpts it. The difference is that the new mesh is strong referencing parts not made unique by the brush so that we don't have to copy all the vertices, all the polygons, all the edges, etc. The immutable version trivializes thread safety, exception safety, non-destructive editing, undo systems, instancing, etc.

In this case the whole concept of the immutable data structure revolves around shared ownership to avoid duplicating data that wasn't made unique. It's a genuine case where we cannot avoid shared ownership no matter what (at least I can't think of any possible way).

That's about the only case where we might need GC or reference counting that I've encountered. Others might have encountered some of their own, but from my experience, very, very few cases genuinely need shared ownership at the design level.