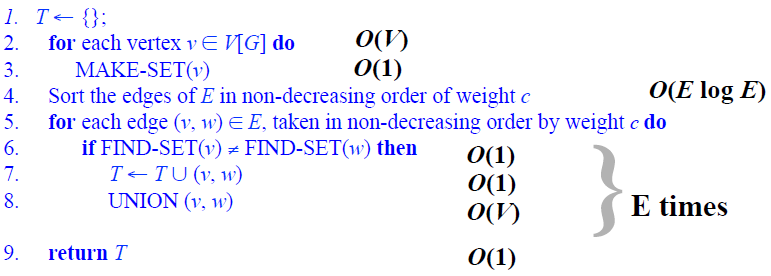

In a class for analysis of algorithms, we are presented with this pseudocode for Kruskal's algorithm:

He then states the following, for disjoint-set forests:

A sequence of m MAKE-SET, UNION, and FIND-SET operations, n of which are MAKE-SET operations, can be performed on a disjoint-set forest with union by rank and path compression in worst-case time O(m α(n)).

Used to compute the complexity of Step 2, and steps 5-8

For connected G: |E| ≥ |V| -1; m = O(V + E), n = O(V);

So Steps 2, 5-8: O((V + E) α(V)) = O(E α(V))

α(V) = O(lg V) = O(lg E); so we obtain O(E lg E) ----- // how is α(V) equal here?

Kruskal: Steps 3, 5-8, and step 4: O(E lg E)

Observe: |E| < |V|2 -> lg E = O(lg V)

So, Kruskal complexity: O(E lg V)

I have attempted to understand the logic behind this "alpha(n)"/"α(n)" function, and from what I've read it seems that, simplistically, the Ackermann function is one that grows exponentially incredibly fast, and the inverse is one that grows logarithmically incredibly slowly.

If my interpretation is correct, what does "α(n)" represent? Does it mean that MAKE-SET operations are at most O(lg n)? How/Why is using inverse-Ackermann necessary? I was under the impression this operation is performed V times (for each vertex). Following this, α(V) is also simplified to O(lg V) = O(lg E), does this mean that, at a maximum, α(V) may be represented by O(lg V)?

Also, why is the |E| < |V|^2 -> lg E = O(lg V) statement made, how is it known that that |E| < |V|^2?

I think my question really boils down to, why is it that a "forest" representation of disjoint sets seems to be more efficient than those implemented with linked lists when my lecturer states they are both O(E log V)? Therefore is there a point in the increased difficulty of implementing disjoint sets with forests?