I am trying to characterize timer jitter on Linux. My task was to run 100ms timers and see how the numbers work out.

I'm working on a multicore machine. I used a standard user program with setitimer(), the same run as root, then with processor affinity, and finally with processor affinity and with process priority. Then I ran the same with the PREEMPT_RT kernel and then ran the examples using clock_nanosleep() as in the demo code on the PREEMPT_RT page. Of all the runs, the timer performance was very similar, with no real difference despite the changes.

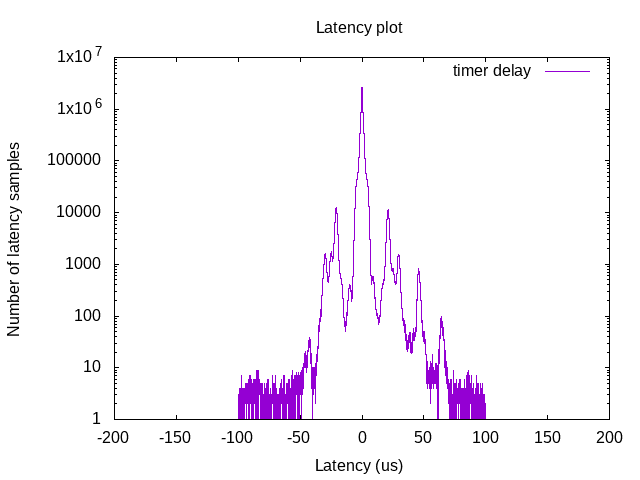

Our end goal is a steady timer. The best worst-case I could get regularly was about 200us. The histogram for all cases shows really odd behavior. For one, I wouldn't expect timers to fire early. But they do (edit: okay they don't, but they appear to). And as you can see in the histogram, I get troughs on either side of 0 offset. These are visible in three bands in the second graph. In the first graph, the X axis is in microseconds. In the second graph, the Y axis is in microseconds.

I ran a 30s test (that is, 300 timer events) 100 times to generate some numbers. You can see them in the following diagrams. There is a big drop off at 200us. All 30000 timer event clock offsets are graphed in the second graph, where you can see some outliers.

So the question is, has anyone else done this kind of analysis before? Did you see the same sort of behavior? My assumption is the RT kernel helps on systems with heavy loads, but in our case it didn't help elimitating timer jitter. Is that your experience?

Here's the code. Like I said before, I modified the example code on PREEMPT_RT site that uses the clock_nanosleep() function, so I won't include my minimal changes for that.

#include <signal.h>

#include <stdio.h>

#include <string.h>

#include <sys/time.h>

#include <stdlib.h>

#define US_PER_SEC 1000000

#define WAIT_TIME 100000

#define MAX_COUNTER 300

int counter = 0;

long long last_time = 0;

static long long times[MAX_COUNTER];

int i = 0;

struct sigaction sa;

void timer_handler(int signum)

{

if (counter > MAX_COUNTER)

{

sigaction(SIGALRM, &sa, NULL);

for (i = 0; i < MAX_COUNTER; i++)

{

printf("%ld\n", times[i]);

}

exit(EXIT_SUCCESS);

}

struct timeval t;

gettimeofday(&t, NULL);

long long elapsed = (t.tv_sec * US_PER_SEC + t.tv_usec);

if (last_time != 0)

{

times[counter] = elapsed - last_time;

++counter;

}

last_time = elapsed;

}

int main()

{

struct itimerval timer;

memset(&sa, 0, sizeof(sa));

sa.sa_handler = &timer_handler;

sigaction(SIGALRM, &sa, NULL);

timer.it_value.tv_sec = 0;

timer.it_value.tv_usec = 1;

timer.it_interval.tv_sec = 0;

timer.it_interval.tv_usec = WAIT_TIME;

setitimer(ITIMER_REAL, &timer, NULL);

while (1)

{

sleep(1);

}

}

EDIT: this is on a Xeon E31220L, running at 2.2 GHz, running x86_64 Fedora Core 19.

timer_handler()function as well. – Untraveled