My understanding is that I should be able to grab a TensorFlow model from Google's AI Hub, deploy it to TensorFlow Serving and use it to make predictions by POSTing images via REST requests using curl.

I could not find any bbox predictors on AI Hub at this time but I did find one on the TensorFlow model zoo:

http://download.tensorflow.org/models/object_detection/ssd_mobilenet_v2_coco_2018_03_29.tar.gz

I have the model deployed to TensorFlow serving, but the documentation is unclear with respect to exactly what should be included in the JSON of the REST request.

My understanding is that

- The SignatureDefinition of the model determines what the JSON should look like

- I should base64 encode the images

I was able to get the signature definition of the model like so:

>python tensorflow/tensorflow/python/tools/saved_model_cli.py show --dir /Users/alexryan/alpine/git/tfserving-tutorial3/model-volume/models/bbox/1/ --all

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['serving_default']:

The given SavedModel SignatureDef contains the following input(s):

inputs['in'] tensor_info:

dtype: DT_UINT8

shape: (-1, -1, -1, 3)

name: image_tensor:0

The given SavedModel SignatureDef contains the following output(s):

outputs['out'] tensor_info:

dtype: DT_FLOAT

shape: unknown_rank

name: detection_boxes:0

Method name is: tensorflow/serving/predict

I think the shape info here is telling me that the model can handle images of any dimensions?

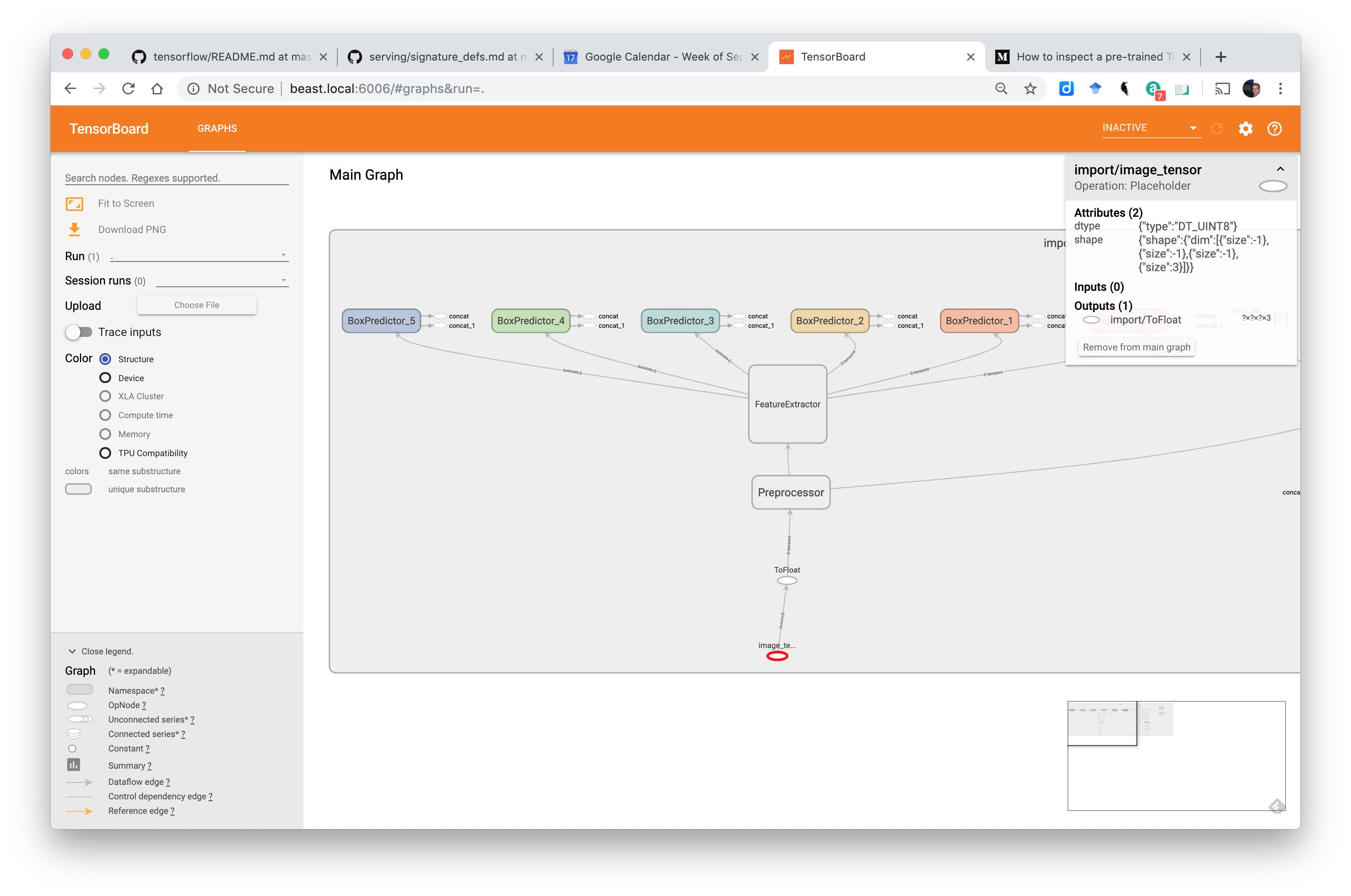

The input layer looks like this in Tensorboard:

But how do I convert this SignatureDefinition to a valid JSON request?

I'm assuming that I'm supposed to use the predict API ...

and Google's doc says ...

URL

POST http://host:port/v1/models/${MODEL_NAME}[/versions/${MODEL_VERSION}]:predict

/versions/${MODEL_VERSION} is optional. If omitted the latest version is used.

Request format

The request body for predict API must be JSON object formatted as follows:{ // (Optional) Serving signature to use. // If unspecifed default serving signature is used. "signature_name": <string>, // Input Tensors in row ("instances") or columnar ("inputs") format. // A request can have either of them but NOT both. "instances": <value>|<(nested)list>|<list-of-objects> "inputs": <value>|<(nested)list>|<object> }Encoding binary values JSON uses UTF-8 encoding. If you have input feature or tensor values that need to be binary (like image bytes), you must Base64 encode the data and encapsulate it in a JSON object having b64 as the key as follows:

{ "b64": "base64 encoded string" }You can specify this object as a value for an input feature or tensor. The same format is used to encode output response as well.

A classification request with image (binary data) and caption features is shown below:

{ "signature_name": "classify_objects", "examples": [

{

"image": { "b64": "aW1hZ2UgYnl0ZXM=" },

"caption": "seaside"

},

{

"image": { "b64": "YXdlc29tZSBpbWFnZSBieXRlcw==" },

"caption": "mountains"

} ] }

Uncertainties include:

- should I use "instances" in my JSON

- should I base64 encode a JPG or PNG or something else?

- Should the image be of a particular width and height?

In Serving Image-Based Deep Learning Models with TensorFlow-Serving’s RESTful API this format is suggested:

{

"instances": [

{"b64": "iVBORw"},

{"b64": "pT4rmN"},

{"b64": "w0KGg2"}

]

}

I used this image: https://tensorflow.org/images/blogs/serving/cat.jpg

and base64 encoded it like so:

# Download the image

dl_request = requests.get(IMAGE_URL, stream=True)

dl_request.raise_for_status()

# Compose a JSON Predict request (send JPEG image in base64).

jpeg_bytes = base64.b64encode(dl_request.content).decode('utf-8')

predict_request = '{"instances" : [{"b64": "%s"}]}' % jpeg_bytes

But when I use curl to POST the base64 encoded image like so:

{"instances" : [{"b64": "/9j/4AAQSkZJRgABAQAASABIAAD/4QBYRXhpZgAATU0AKgAA

...

KACiiigAooooAKKKKACiiigAooooA//Z"}]}

I get a response like this:

>./test_local_tfs.sh

HEADER=|Content-Type:application/json;charset=UTF-8|

URL=|http://127.0.0.1:8501/v1/models/saved_model/versions/1:predict|

* Trying 127.0.0.1...

* TCP_NODELAY set

* Connected to 127.0.0.1 (127.0.0.1) port 8501 (#0)

> POST /v1/models/saved_model/versions/1:predict HTTP/1.1

> Host: 127.0.0.1:8501

> User-Agent: curl/7.54.0

> Accept: */*

> Content-Type:application/json;charset=UTF-8

> Content-Length: 85033

> Expect: 100-continue

>

< HTTP/1.1 100 Continue

* We are completely uploaded and fine

< HTTP/1.1 400 Bad Request

< Content-Type: application/json

< Date: Tue, 17 Sep 2019 10:47:18 GMT

< Content-Length: 85175

<

{ "error": "Failed to process element: 0 of \'instances\' list. Error: Invalid argument: JSON Value: {\n \"b64\": \"/9j/4AAQSkZJRgABAQAAS

...

ooooA//Z\"\n} Type: Object is not of expected type: uint8" }

I've tried converting a local version of the same file to base64 like so (confirming that the dtype is uint8) ...

img = cv2.imread('cat.jpg')

print('dtype: ' + str(img.dtype))

_, buf = cv2.imencode('.jpg', img)

jpeg_bytes = base64.b64encode(buf).decode('utf-8')

predict_request = '{"instances" : [{"b64": "%s"}]}' % jpeg_bytes

But posting this JSON generates the same error.

However, when the json is formated like so ...

{'instances': [[[[112, 71, 48], [104, 63, 40], [107, 70, 20], [108, 72, 21], [109, 77, 0], [106, 75, 0], [92, 66, 0], [106, 80, 0], [101, 80, 0], [98, 77, 0], [100, 75, 0], [104, 80, 0], [114, 88, 17], [94, 68, 0], [85, 54, 0], [103, 72, 11], [93, 62, 0], [120, 89, 25], [131, 101, 37], [125, 95, 31], [119, 91, 27], [121, 93, 29], [133, 105, 40], [119, 91, 27], [119, 96, 56], [120, 97, 57], [119, 96, 53], [102, 78, 36], [132, 103, 44], [117, 88, 28], [125, 89, 4], [128, 93, 8], [133, 94, 0], [126, 87, 0], [110, 74, 0], [123, 87, 2], [120, 92, 30], [124, 95, 33], [114, 90, 32],

...

, [43, 24, 33], [30, 17, 36], [24, 11, 30], [29, 20, 38], [37, 28, 46]]]]}

... it works. The problem is this json file is >11 MB in size.

How do I make the base64 encoded version of the json work?

UPDATE: It seems that we have to edit the pretrained model to accept base64 images at the input layer

This article describes how to edit the model ... Medium: Serving Image-Based Deep Learning Models with TensorFlow-Serving’s RESTful API ... unfortunately, it assumes that we have access to the code which generated the model.

user260826's solution provides a work-around using an estimator but it assumes the model is a keras model. Not true in this case.

Is there a generic method to make a model ready for TensorFlow Serving REST interface with a base64 encoded image that works with any of the TensorFlow model formats?