tl;dr / quick fix

- Don't decode/encode willy nilly

- Don't assume your strings are UTF-8 encoded

- Try to convert strings to Unicode strings as soon as possible in your code

- Fix your locale: How to solve UnicodeDecodeError in Python 3.6?

- Don't be tempted to use quick

reload hacks

Unicode Zen in Python 2.x - The Long Version

Without seeing the source it's difficult to know the root cause, so I'll have to speak generally.

UnicodeDecodeError: 'ascii' codec can't decode byte generally happens when you try to convert a Python 2.x str that contains non-ASCII to a Unicode string without specifying the encoding of the original string.

In brief, Unicode strings are an entirely separate type of Python string that does not contain any encoding. They only hold Unicode point codes and therefore can hold any Unicode point from across the entire spectrum. Strings contain encoded text, beit UTF-8, UTF-16, ISO-8895-1, GBK, Big5 etc. Strings are decoded to Unicode and Unicodes are encoded to strings. Files and text data are always transferred in encoded strings.

The Markdown module authors probably use unicode() (where the exception is thrown) as a quality gate to the rest of the code - it will convert ASCII or re-wrap existing Unicodes strings to a new Unicode string. The Markdown authors can't know the encoding of the incoming string so will rely on you to decode strings to Unicode strings before passing to Markdown.

Unicode strings can be declared in your code using the u prefix to strings. E.g.

>>> my_u = u'my ünicôdé strįng'

>>> type(my_u)

<type 'unicode'>

Unicode strings may also come from file, databases and network modules. When this happens, you don't need to worry about the encoding.

Gotchas

Conversion from str to Unicode can happen even when you don't explicitly call unicode().

The following scenarios cause UnicodeDecodeError exceptions:

# Explicit conversion without encoding

unicode('€')

# New style format string into Unicode string

# Python will try to convert value string to Unicode first

u"The currency is: {}".format('€')

# Old style format string into Unicode string

# Python will try to convert value string to Unicode first

u'The currency is: %s' % '€'

# Append string to Unicode

# Python will try to convert string to Unicode first

u'The currency is: ' + '€'

Examples

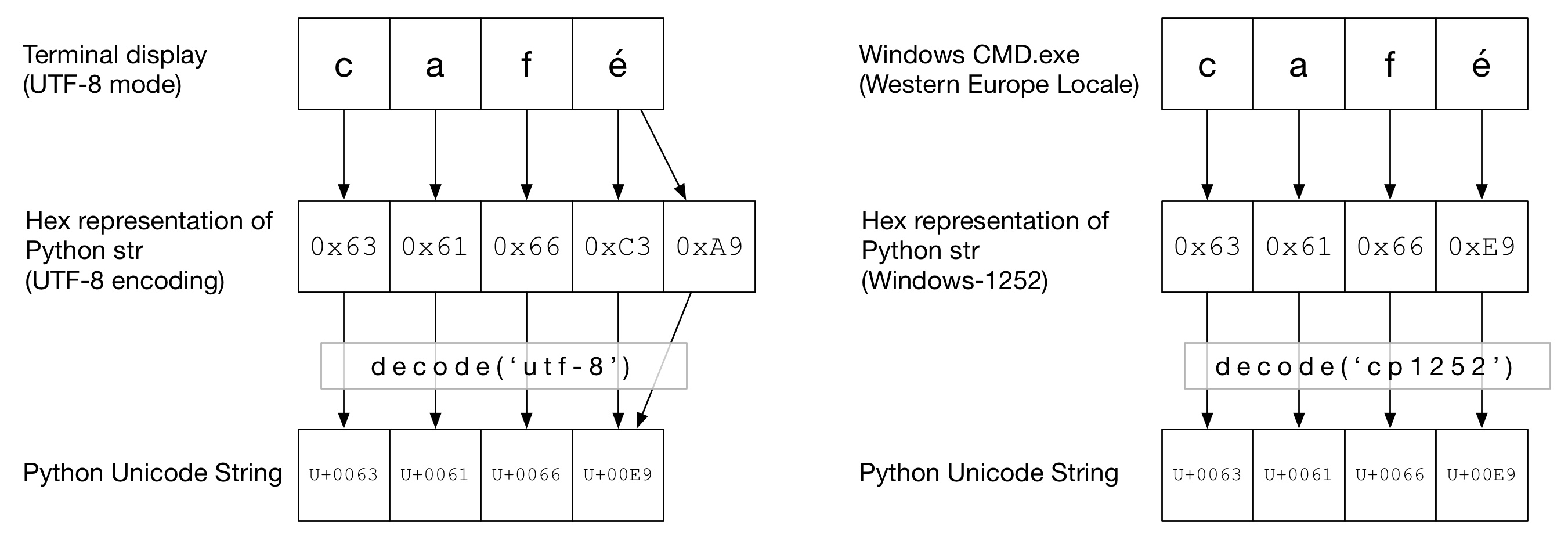

In the following diagram, you can see how the word café has been encoded in either "UTF-8" or "Cp1252" encoding depending on the terminal type. In both examples, caf is just regular ascii. In UTF-8, é is encoded using two bytes. In "Cp1252", é is 0xE9 (which is also happens to be the Unicode point value (it's no coincidence)). The correct decode() is invoked and conversion to a Python Unicode is successfull:

![Diagram of a string being converted to a Python Unicode string]()

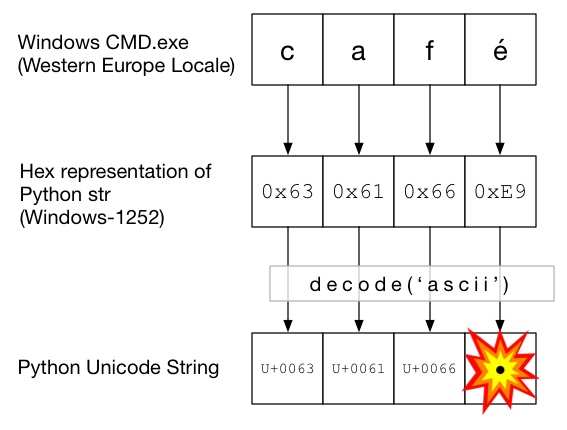

In this diagram, decode() is called with ascii (which is the same as calling unicode() without an encoding given). As ASCII can't contain bytes greater than 0x7F, this will throw a UnicodeDecodeError exception:

![Diagram of a string being converted to a Python Unicode string with the wrong encoding]()

The Unicode Sandwich

It's good practice to form a Unicode sandwich in your code, where you decode all incoming data to Unicode strings, work with Unicodes, then encode to strs on the way out. This saves you from worrying about the encoding of strings in the middle of your code.

Input / Decode

Source code

If you need to bake non-ASCII into your source code, just create Unicode strings by prefixing the string with a u. E.g.

u'Zürich'

To allow Python to decode your source code, you will need to add an encoding header to match the actual encoding of your file. For example, if your file was encoded as 'UTF-8', you would use:

# encoding: utf-8

This is only necessary when you have non-ASCII in your source code.

Files

Usually non-ASCII data is received from a file. The io module provides a TextWrapper that decodes your file on the fly, using a given encoding. You must use the correct encoding for the file - it can't be easily guessed. For example, for a UTF-8 file:

import io

with io.open("my_utf8_file.txt", "r", encoding="utf-8") as my_file:

my_unicode_string = my_file.read()

my_unicode_string would then be suitable for passing to Markdown. If a UnicodeDecodeError from the read() line, then you've probably used the wrong encoding value.

CSV Files

The Python 2.7 CSV module does not support non-ASCII characters 😩. Help is at hand, however, with https://pypi.python.org/pypi/backports.csv.

Use it like above but pass the opened file to it:

from backports import csv

import io

with io.open("my_utf8_file.txt", "r", encoding="utf-8") as my_file:

for row in csv.reader(my_file):

yield row

Databases

Most Python database drivers can return data in Unicode, but usually require a little configuration. Always use Unicode strings for SQL queries.

MySQL

In the connection string add:

charset='utf8',

use_unicode=True

E.g.

>>> db = MySQLdb.connect(host="localhost", user='root', passwd='passwd', db='sandbox', use_unicode=True, charset="utf8")

PostgreSQL

Add:

psycopg2.extensions.register_type(psycopg2.extensions.UNICODE)

psycopg2.extensions.register_type(psycopg2.extensions.UNICODEARRAY)

HTTP

Web pages can be encoded in just about any encoding. The Content-type header should contain a charset field to hint at the encoding. The content can then be decoded manually against this value. Alternatively, Python-Requests returns Unicodes in response.text.

Manually

If you must decode strings manually, you can simply do my_string.decode(encoding), where encoding is the appropriate encoding. Python 2.x supported codecs are given here: Standard Encodings. Again, if you get UnicodeDecodeError then you've probably got the wrong encoding.

The meat of the sandwich

Work with Unicodes as you would normal strs.

Output

stdout / printing

print writes through the stdout stream. Python tries to configure an encoder on stdout so that Unicodes are encoded to the console's encoding. For example, if a Linux shell's locale is en_GB.UTF-8, the output will be encoded to UTF-8. On Windows, you will be limited to an 8bit code page.

An incorrectly configured console, such as corrupt locale, can lead to unexpected print errors. PYTHONIOENCODING environment variable can force the encoding for stdout.

Files

Just like input, io.open can be used to transparently convert Unicodes to encoded byte strings.

Database

The same configuration for reading will allow Unicodes to be written directly.

Python 3

Python 3 is no more Unicode capable than Python 2.x is, however it is slightly less confused on the topic. E.g the regular str is now a Unicode string and the old str is now bytes.

The default encoding is UTF-8, so if you .decode() a byte string without giving an encoding, Python 3 uses UTF-8 encoding. This probably fixes 50% of people's Unicode problems.

Further, open() operates in text mode by default, so returns decoded str (Unicode ones). The encoding is derived from your locale, which tends to be UTF-8 on Un*x systems or an 8-bit code page, such as windows-1251, on Windows boxes.

Why you shouldn't use sys.setdefaultencoding('utf8')

It's a nasty hack (there's a reason you have to use reload) that will only mask problems and hinder your migration to Python 3.x. Understand the problem, fix the root cause and enjoy Unicode zen.

See Why should we NOT use sys.setdefaultencoding("utf-8") in a py script? for further details