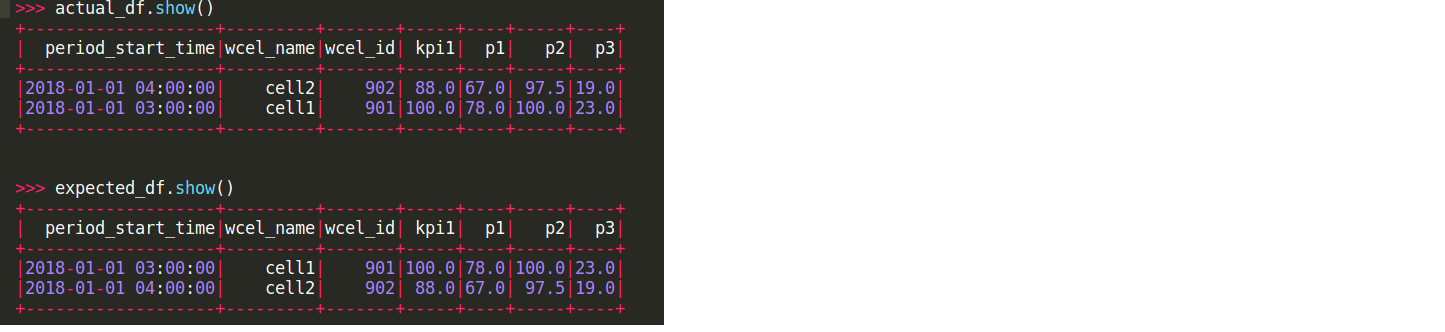

I have 2 pyspark dataframe as shown in file attached. expected_df and actual_df

In my unit test I am trying to check if both are equal or not.

for which my code is

expected = map(lambda row: row.asDict(), expected_df.collect())

actual = map(lambda row: row.asDict(), actaual_df.collect())

assert expected = actual

Since both dfs are same but row order is different so assert fails here. What is best way to compare such dfs.