I have two audio recordings of a same signal by 2 different microphones (for example, in a WAV format), but one of them is recorded with delay, for example, several seconds.

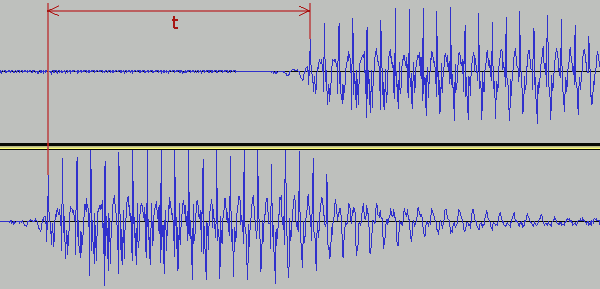

It's easy to identify such a delay visually when viewing these signals in some kind of waveform viewer - i.e. just spotting first visible peak in every signal and ensuring that they're the same shape:

(source: greycat.ru)

But how do I do it programmatically - find out what this delay (t) is? Two digitized signals are slightly different (because microphones are different, were at different positions, due to ADC setups, etc).

I've digged around a bit and found out that this problem is usually called "time-delay estimation" and it has myriads of approaches to it - for example, one of them.

But are there any simple and ready-made solutions, such as command-line utility, library or straight-forward algorithm available?

Conclusion: I've found no simple implementation and done a simple command-line utility myself - available at https://bitbucket.org/GreyCat/calc-sound-delay (GPLv3-licensed). It implements a very simple search-for-maximum algorithm described at Wikipedia.