I have Postgresql on a server in a docker container. How can I connect to it from the outside, that is, from my local computer? What setting should I apply to allow that?

You can run Postgres this way (map a port):

docker run --name some-postgres -e POSTGRES_PASSWORD=mysecretpassword -d -p 5432:5432 postgres

So now you have mapped the port 5432 of your container to port 5432 of your server. -p <host_port>:<container_port> .So now your postgres is accessible from your public-server-ip:5432

To test: Run the postgres database (command above)

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

05b3a3471f6f postgres "/docker-entrypoint.s" 1 seconds ago Up 1 seconds 0.0.0.0:5432->5432/tcp some-postgres

Go inside your container and create a database:

docker exec -it 05b3a3471f6f bash

root@05b3a3471f6f:/# psql -U postgres

postgres-# CREATE DATABASE mytest;

postgres-# \q

Go to your localhost (where you have some tool or the psql client).

psql -h public-ip-server -p 5432 -U postgres

(password mysecretpassword)

postgres=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+----------+----------+------------+------------+-----------------------

mytest | postgres | UTF8 | en_US.utf8 | en_US.utf8 |

postgres | postgres | UTF8 | en_US.utf8 | en_US.utf8 |

template0 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres

So you're accessing the database (which is running in docker on a server) from your localhost.

In this post it's expained in detail.

ifconfig -u | grep 'inet ' | grep -v 127.0.0.1 | cut -d\ -f2 | head -1 –

Dittany psql -h some-internal-docker-host -p 5432 -U postgres? –

Etty docker run --net=host --name some-postgres -e POSTGRES_PASSWORD=mysecretpassword -d -p 5432:5432 postgres –

Ishtar docker exec -it 05b3a3471f6f bash @Ishtar at that line, don't need to write the SHA here, you can replace it as "some-postgres" because the container is named from the command above –

Azilian psql -h docker.for.mac.localhost -p 5432 -U postgres. Both of those options works –

Leahy I managed to get it run on linux

run the docker postgres - make sure the port is published, I use alpine because it's lightweight.

docker run --rm -P -p 127.0.0.1:5432:5432 -e POSTGRES_PASSWORD="1234" --name pg postgres:alpineusing another terminal, access the database from the host using the postgres uri

psql postgresql://postgres:1234@localhost:5432/postgres

for mac users, replace psql with pgcli

sudo to run your container. –

Nannana You can also access through docker exec command by:

$ docker exec -it postgres-container bash

# su postgres

$ psql

Or

$ docker exec -it postgres-container psql -U postgres

su postgres do? –

Ankerite su postgres means: switch user to the postgres user. –

Estop psql after su postgres helped –

Monaco I am using django with postgres in Docker containers. in the docker-compose file, add the following:

db:

image: postgres:10-alpine

environment:

- POSTGRES_DB=app

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=supersecretpassword

ports:

- "6543:5432"

This ports setting uses the port 6543 (it just needs to be different from 5432) that is accessible by your local machine. For myself, I connected DBeaver to it. this will prevent port clashes between your app request and local machine request.

At first, I got a message saying that the port 5432 is in use (which is by django app) so I couldn't access by pgAdmin or DBeaver.

5432 can be used further for the container at no harm. I had a Windows PostgreSQL installation which was occupying the port 5432 needed to reacht the Docker host, see Connect to dockerized postgres from Windows Docker host?. –

Transposal I'm assuming that you want to be able to view data present in your container everytime you connect to it from outside. To do this, you will have to persist data on the postgres image.

If you don't have persistent data, you will have to repeat everything you did the first time.

Steps 3, 5, 6, 7, and 8 answer your question directly.

Here is the detailed overview of the entire process I followed on Windows 10 powershell (commands are the same in Linux and macOS as well):

Step 1: Start powershell in non-admin mode

Step 2: Download postgres docker image:

docker pull postgres:latest

Step 3: Start docker container in detached mode and persist data on postgres image by creating a volume and binding it to a destination

(Note: by default 5432 is the default port that is used; but state it explicitly to prevent connection errors from clients like pgadmin, dbeaver, etc.)

docker run --name postgres-test -e POSTGRES_PASSWORD=password \

-p 5432:5432 -v postgres-data:/var/lib/postgresql/data \

-d postgres:latest

Step 4: Check status of running containers

docker ps -a

Step 5: Go inside container_name in interactive mode

(Note: commands like ls, pwd, etc. can be executed here if you've checked Linux containers during installation)

docker exec -it postgres-test psql -U postgres

Step 6: Create sample data. At this point, you can play with psql commands in the following manner:

# CREATE DATABASE test;

# \c test

# CREATE TABLE test_table(something int);

# INSERT INTO test_table VALUES (123);

# SELECT * FROM test_table;

# \q

Step 7: Open a database client application like pgadmin or dbeaver and enter the below in the connection fields:

Host: localhost

Database: test

User: postgres

Password: password

Step 8: Enter the query

SELECT * FROM test_table

in the query editor and you should be able to see the output 123.

I know this is late, if you used docker-compose like @Martin

These are the snippets that helped me connect to psql inside the container

docker-compose run db bash

root@de96f9358b70:/# psql -h db -U root -d postgres_db

I cannot comment because I don't have 50 reputation. So hope this helps.

I already had running postgres on host machine and didn't want to allow connections from network, so I did run temporary postgres instance in container and created database in just two lines:

# Run PostgreSQL

docker run --name postgres-container -e POSTGRES_PASSWORD=password -it -p 5433:5432 postgres

# Create database

docker exec -it postgres-container createdb -U postgres my-db

-e POSTGRES_DB=my-db to create my-db instead of postgres –

Duvalier For some reason 5432 port seems protected. I changed my port config from 5432:5432to 5416:5432 and the following command worked to connect to your postgres database from outside its docker container:

psql -h localhost -p 5416 -U <my-user> -d <my-database>

postgres service on your host machine which will already bind to localhost:5432 preventing you from using it. Mapping a different host port to the default port 5432 inside the container is a good solution to that; alternatively you could stop the postgres service on your host but perhaps it's used for something you need. –

Carryingon Connect to a local container running postgres

Install

psqlbrew search postgres brew install postgresqlStart the Docker

docker run --name postgres -e POSTGRES_DB=users \ -e POSTGRES_USER=john \ -e POSTGRES_PASSWORD=password \ -p 5432:5432 -d postgresConnect to database

psql --host=localhost --username=john --dbname=users

To connect from the localhost you need to add '--net host':

docker run --name some-postgres --net host -e POSTGRES_PASSWORD=mysecretpassword -d -p 5432:5432 postgres

You can access the server directly without using exec from your localhost, by using:

psql -h localhost -p 5432 -U postgres

I tried to connect from localhost (mac) to a postgres container. I changed the port in the docker-compose file from 5432 to 3306 and started the container. No idea why I did it :|

Then I tried to connect to postgres via PSequel and adminer and the connection could not be established.

After switching back to port 5432 all works fine.

db:

image: postgres

ports:

- 5432:5432

restart: always

volumes:

- "db_sql:/var/lib/mysql"

environment:

POSTGRES_USER: root

POSTGRES_PASSWORD: password

POSTGRES_DB: postgres_db

This was my experience I wanted to share. Perhaps someone can make use of it.

/var/lib/mysql ? –

Decrescent first open the docker image for the postgres

docker exec -it <container_name>

then u will get the root --root@868594e88b53:/#

it need the database connection

psql postgresql://<username>:<databasepassword>@postgres:5432/<database>

This one worked for me:

PGPASSWORD=postgres psql -h localhost -p 3307 -U postgres -d postgres

Use the above to load an initial script as:

PGPASSWORD=postgres psql -h localhost -p 3307 -U postgres -d postgres < src/sql/local/blabla.sql

Do not that i remap my ports as:

docker run -p3307:5432 --name postgres -e POSTGRES_PASSWORD=postgres -d postgres

In case, it is a django backend application, you can do something like this.

docker exec -it container_id python manage.py dbshell

After building my gateway-microservice application i had the same issue. Can not to connect to contenerized postgresql from Heidisql.

At this moment i have solved it by simply specifying postgresql password to docker-compose.yml as well as port.

So you should find and open docker-compose.yml. Then you should enter POSTGRES_PASSWORD (don`t let it to be empty), and specify the port “5432:5432”

services: microservice33-postgresql: environment: - POSTGRES_USER=microservice33 - POSTGRES_PASSWORD=wwww - POSTGRES_HOST_AUTH_METHOD=trust ports: - 5432:5432

link for reference and screenshots post

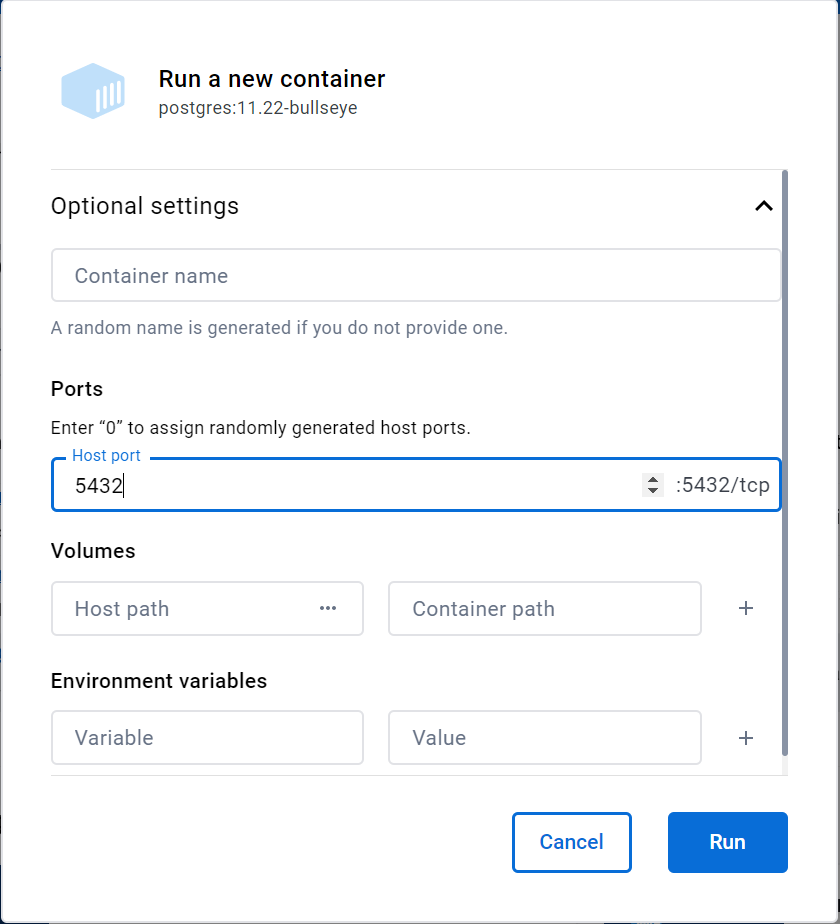

The answers in this thread helped me figure out how to this in Docker Desktop. Figure I would share that info. There are optional settings you can access when creating a new container and you can set the host port here.

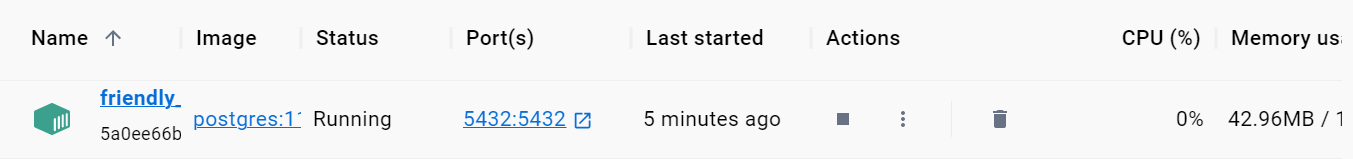

When you go to your containers you should see value in the ports, that's how you know you can connect to the container from your local computer.

There are good answers here but If you like to have some interface for postgres database management, you can install pgAdmin on your local computer and connect to the remote machine using its IP and the postgres exposed port (by default 5432).

docker ps -a to get container ids then

docker exec -it psql -U -W

© 2022 - 2024 — McMap. All rights reserved.