I have an java app (JDK13) running in a docker container. Recently I moved the app to JDK17 (OpenJDK17) and found a gradual increase of memory usage by docker container.

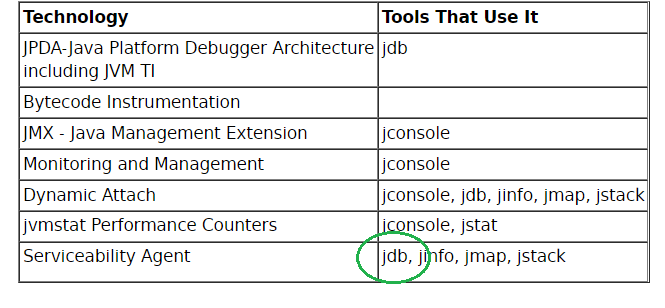

During investigation I found that the 'serviceability memory category' NMT grows constantly (15mb per an hour). I checked the page https://docs.oracle.com/en/java/javase/17/troubleshoot/diagnostic-tools.html#GUID-5EF7BB07-C903-4EBD-A9C2-EC0E44048D37 but this category is not mentioned there.

Could anyone explain what this serviceability category means and what can cause such gradual increase? Also there are some additional new memory categories comparing to JDK13. Maybe someone knows where I can read details about them.

Here is the result of command jcmd 1 VM.native_memory summary

Native Memory Tracking:

(Omitting categories weighting less than 1KB)

Total: reserved=4431401KB, committed=1191617KB

- Java Heap (reserved=2097152KB, committed=479232KB)

(mmap: reserved=2097152KB, committed=479232KB)

- Class (reserved=1052227KB, committed=22403KB)

(classes #29547)

( instance classes #27790, array classes #1757)

(malloc=3651KB #79345)

(mmap: reserved=1048576KB, committed=18752KB)

( Metadata: )

( reserved=139264KB, committed=130816KB)

( used=130309KB)

( waste=507KB =0.39%)

( Class space:)

( reserved=1048576KB, committed=18752KB)

( used=18149KB)

( waste=603KB =3.21%)

- Thread (reserved=387638KB, committed=40694KB)

(thread #378)

(stack: reserved=386548KB, committed=39604KB)

(malloc=650KB #2271)

(arena=440KB #752)

- Code (reserved=253202KB, committed=76734KB)

(malloc=5518KB #23715)

(mmap: reserved=247684KB, committed=71216KB)

- GC (reserved=152419KB, committed=92391KB)

(malloc=40783KB #34817)

(mmap: reserved=111636KB, committed=51608KB)

- Compiler (reserved=1506KB, committed=1506KB)

(malloc=1342KB #2557)

(arena=165KB #5)

- Internal (reserved=5579KB, committed=5579KB)

(malloc=5543KB #33822)

(mmap: reserved=36KB, committed=36KB)

- Other (reserved=231161KB, committed=231161KB)

(malloc=231161KB #347)

- Symbol (reserved=30558KB, committed=30558KB)

(malloc=28887KB #769230)

(arena=1670KB #1)

- Native Memory Tracking (reserved=16412KB, committed=16412KB)

(malloc=575KB #8281)

(tracking overhead=15837KB)

- Shared class space (reserved=12288KB, committed=12136KB)

(mmap: reserved=12288KB, committed=12136KB)

- Arena Chunk (reserved=18743KB, committed=18743KB)

(malloc=18743KB)

- Tracing (reserved=32KB, committed=32KB)

(arena=32KB #1)

- Logging (reserved=7KB, committed=7KB)

(malloc=7KB #289)

- Arguments (reserved=1KB, committed=1KB)

(malloc=1KB #53)

- Module (reserved=1045KB, committed=1045KB)

(malloc=1045KB #5026)

- Safepoint (reserved=8KB, committed=8KB)

(mmap: reserved=8KB, committed=8KB)

- Synchronization (reserved=204KB, committed=204KB)

(malloc=204KB #2026)

- Serviceability (reserved=31187KB, committed=31187KB)

(malloc=31187KB #49714)

- Metaspace (reserved=140032KB, committed=131584KB)

(malloc=768KB #622)

(mmap: reserved=139264KB, committed=130816KB)

- String Deduplication (reserved=1KB, committed=1KB)

(malloc=1KB #8)

The detailed information about increasing part of memory is:

[0x00007f6ccb970cbe] OopStorage::try_add_block()+0x2e

[0x00007f6ccb97132d] OopStorage::allocate()+0x3d

[0x00007f6ccbb34ee8] StackFrameInfo::StackFrameInfo(javaVFrame*, bool)+0x68

[0x00007f6ccbb35a64] ThreadStackTrace::dump_stack_at_safepoint(int)+0xe4

(malloc=6755KB type=Serviceability #10944)

Update#1 from 2022-01-17:

Thanks to @Aleksey Shipilev for help! We were able to find a place which causes the issue, is related to many ThreadMXBean#.dumpAllThreads calls. Here is MCVE, Test.java:

Run with:

java -Xmx512M -XX:NativeMemoryTracking=detail Test.java

and check periodically serviceability category in result of

jcmd YOUR_PID VM.native_memory summary

Test java:

import java.lang.management.ManagementFactory;

import java.lang.management.ThreadInfo;

import java.lang.management.ThreadMXBean;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.TimeUnit;

public class Test {

private static final int RUNNING = 40;

private static final int WAITING = 460;

private final Object monitor = new Object();

private final ThreadMXBean threadMxBean = ManagementFactory.getThreadMXBean();

private final ExecutorService executorService = Executors.newFixedThreadPool(RUNNING + WAITING);

void startRunningThread() {

executorService.submit(() -> {

while (true) {

}

});

}

void startWaitingThread() {

executorService.submit(() -> {

try {

monitor.wait();

} catch (InterruptedException e) {

e.printStackTrace();

}

});

}

void startThreads() {

for (int i = 0; i < RUNNING; i++) {

startRunningThread();

}

for (int i = 0; i < WAITING; i++) {

startWaitingThread();

}

}

void shutdown() {

executorService.shutdown();

try {

executorService.awaitTermination(5, TimeUnit.SECONDS);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

public static void main(String[] args) throws InterruptedException {

Test test = new Test();

Runtime.getRuntime().addShutdownHook(new Thread(test::shutdown));

test.startThreads();

for (int i = 0; i < 12000; i++) {

ThreadInfo[] threadInfos = test.threadMxBean.dumpAllThreads(false, false);

System.out.println("ThreadInfos: " + threadInfos.length);

Thread.sleep(100);

}

test.shutdown();

}

}