My Airflow DAGs mainly consist of PythonOperators, and I would like to use my Python IDEs debug tools to develop python "inside" airflow. - I rely on Airflow's database connectors, which I think would be ugly to move "out" of airflow for development.

I have been using Airflow for a bit, and have so far only achieved development and debugging via the CLI. Which is starting to get tiresome.

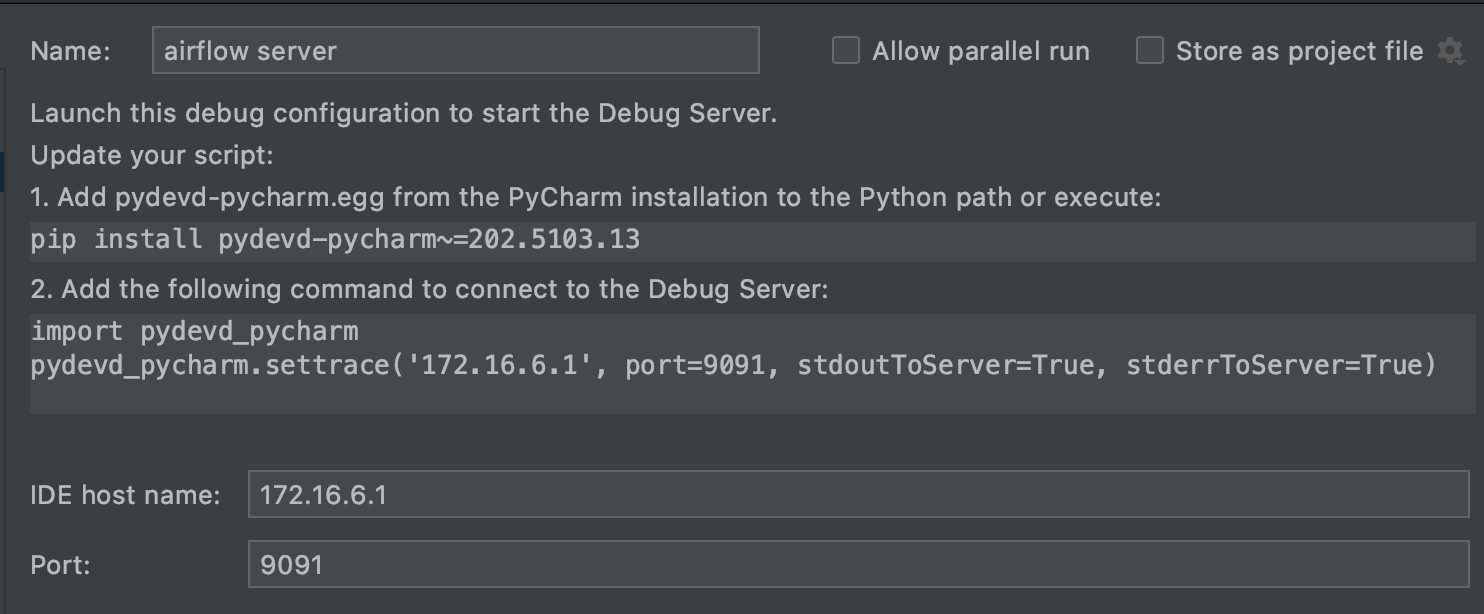

Does anyone know of a nice way to set up PyCharm, or another IDE, that enables me to use the IDE's debug toolset when running airflow test ..?