Update: Dev's answer is great and deserves more votes.

It's great because it includes a Python implmentation of a paper that dynamically computes the 4 points.

My answer is useful a first principles approach:

- great when getting started from scratch and trying/experimenting different approaches

- not a robust solution since it relies on working out threshold values on a case by case basis (e.g. contour simplification epsilon value, etc.).

If it's still useful to understand these base principles read along.

I recommend the following steps:

threshold() the imagedilate() the image - this will remove the black line splitting the top and bottom section and also darker artifacts on the lower partfindContours() using setting to retrieve only external contours(RETR_EXTERNAL) and simplify the output(CHAIN_APPROX_SIMPLE)- process the contours further

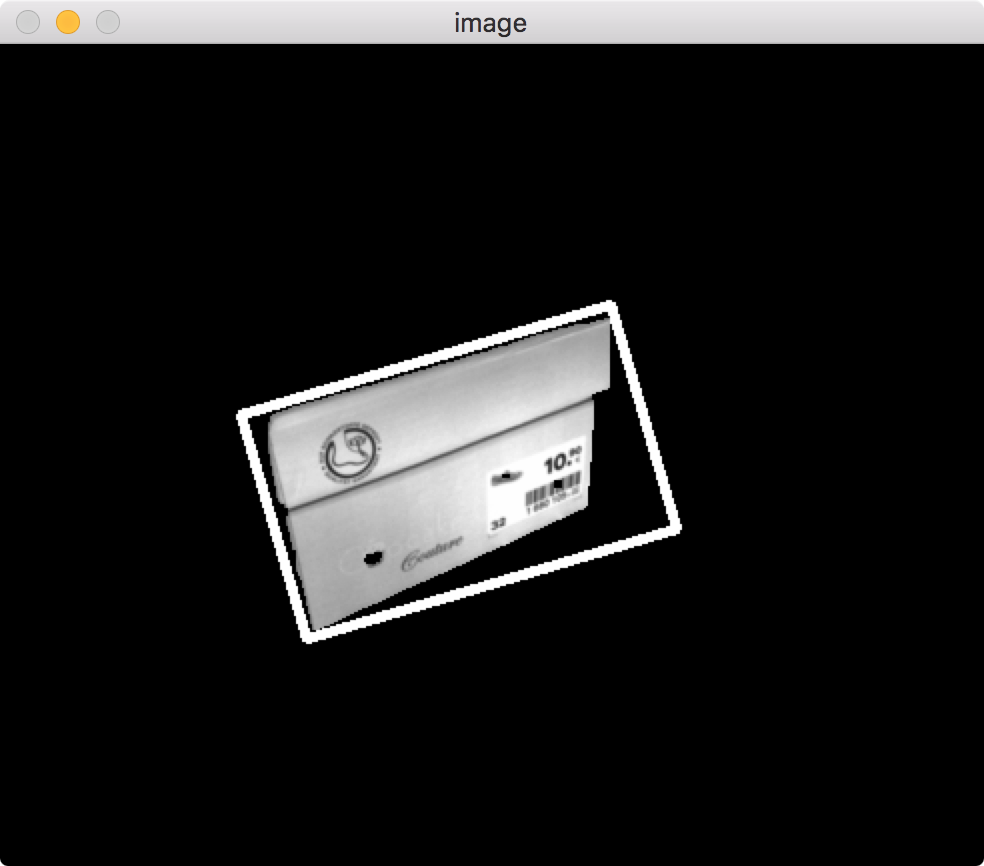

Step 1:threshold

# threshold image

ret,thresh = cv2.threshold(img,127,255,0)

cv2.imshow('threshold ',thresh)

![threshold]()

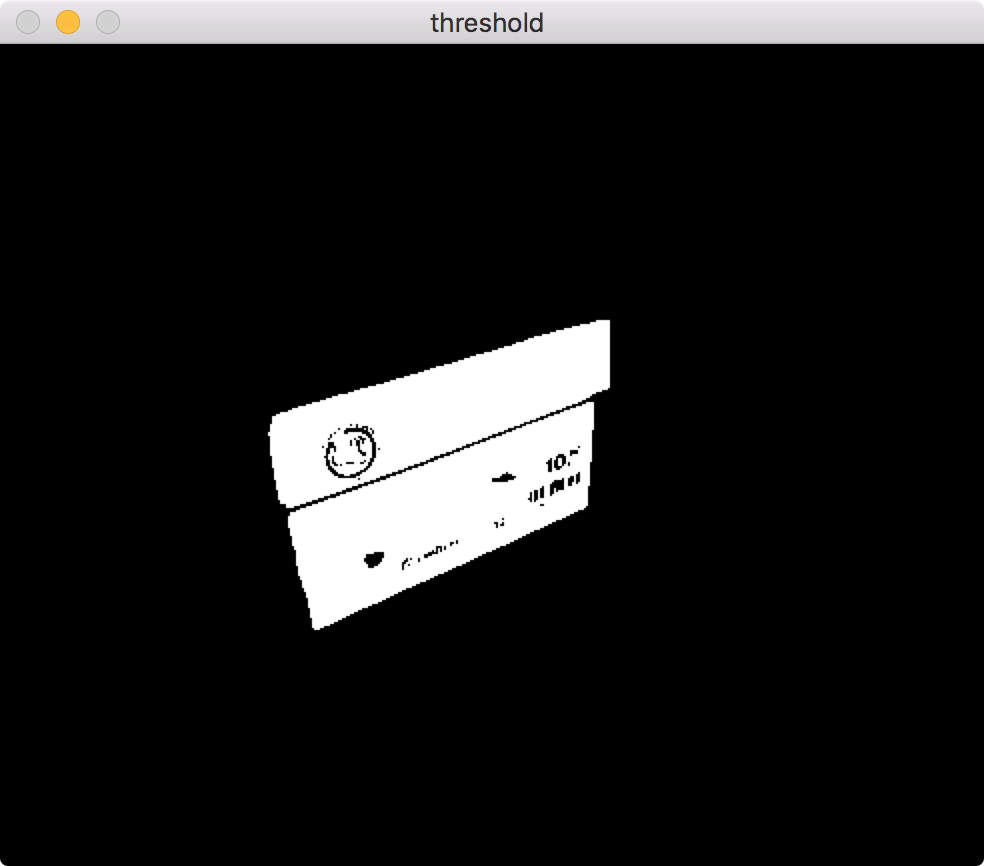

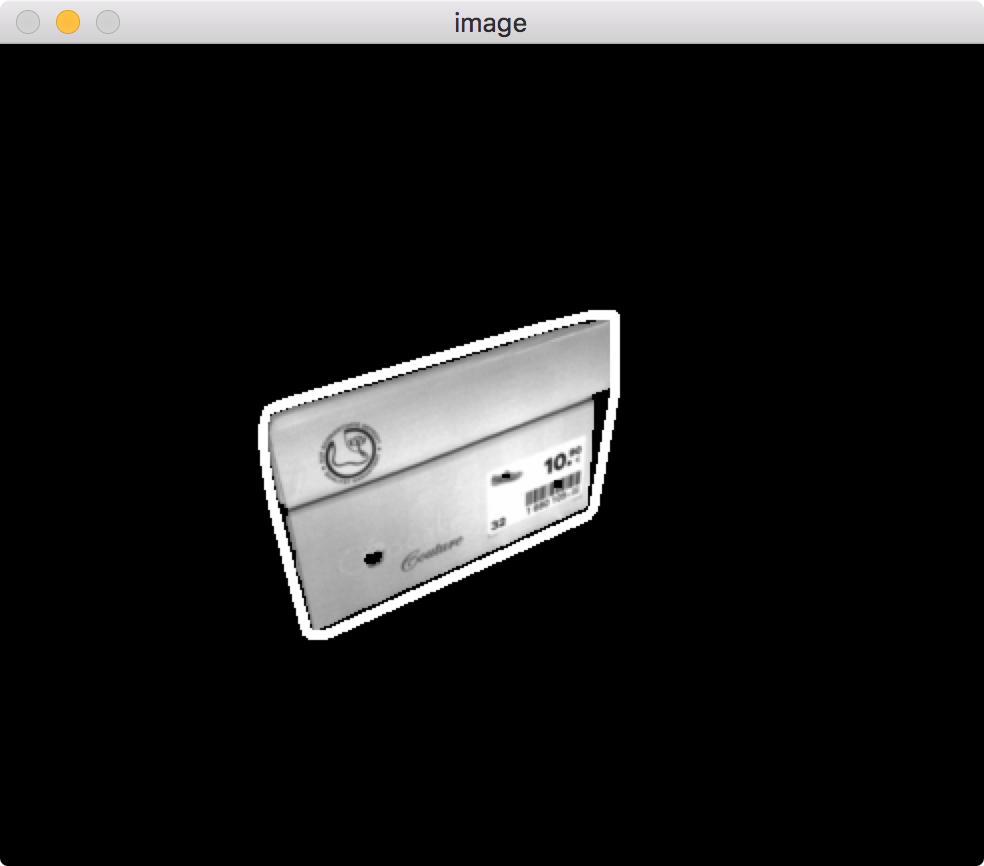

Step 2:dilate

# dilate thresholded image - merges top/bottom

kernel = np.ones((3,3), np.uint8)

dilated = cv2.dilate(thresh, kernel, iterations=3)

cv2.imshow('threshold dilated',dilated)

![dilate]()

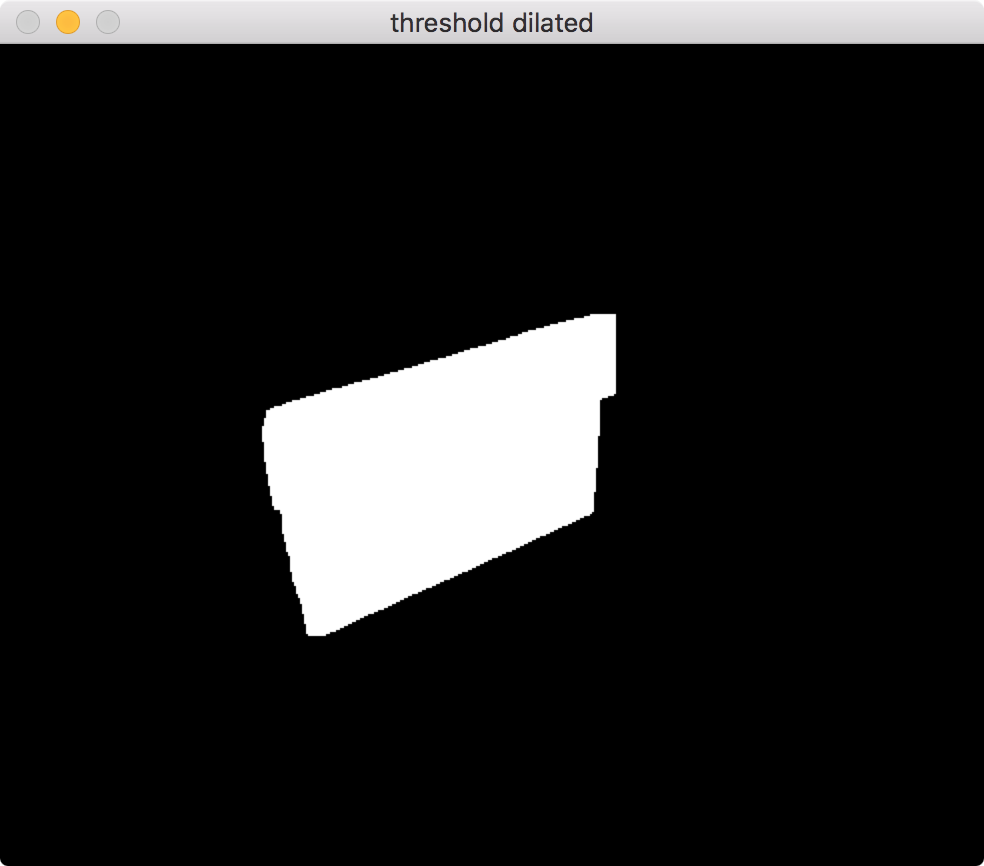

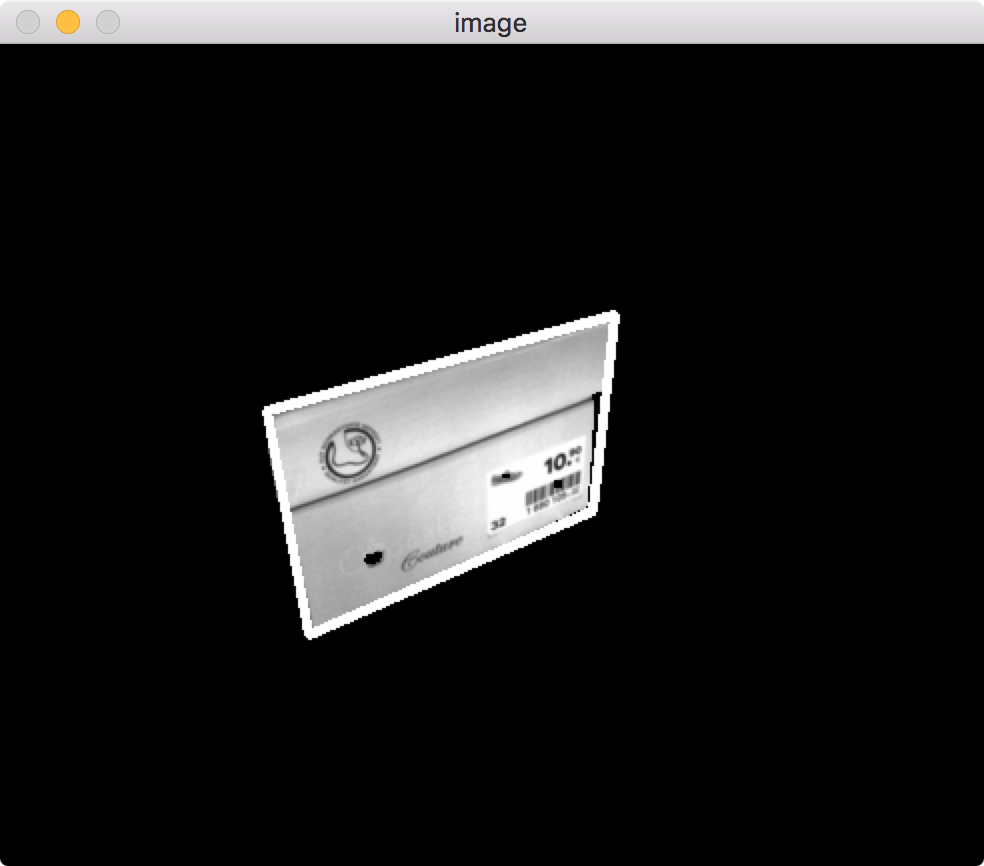

Step 3: find contours

# find contours

contours, hierarchy = cv2.findContours(dilated,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

cv2.drawContours(img, contours, 0, (255,255,255), 3)

print "contours:",len(contours)

print "largest contour has ",len(contours[0]),"points"

![contours]()

Notice that dilating first, then using simple external contours gets you shape you're after, but it's still pretty complex (containg 279 points)

From this point onward you can futher process the contour features.

There are a few options, available such as:

a: getting the min. area rectangle

# minAreaRect

rect = cv2.minAreaRect(contours[0])

box = cv2.cv.BoxPoints(rect)

box = np.int0(box)

cv2.drawContours(img,[box],0,(255,255,255),3)

![minAreaRect]()

Can be useful, but not exactly what you need.

b: convex hull

# convexHull

hull = cv2.convexHull(contours[0])

cv2.drawContours(img, [hull], 0, (255,255,255), 3)

print "convex hull has ",len(hull),"points"

![convexHull]()

Better, but you still have 22 points to deal with and it's not tight as it could be

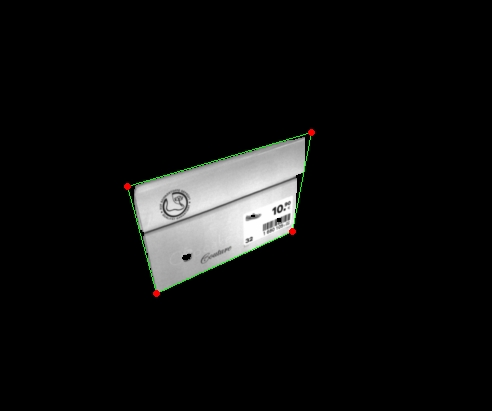

c: simplify contours

# simplify contours

epsilon = 0.1*cv2.arcLength(contours[0],True)

approx = cv2.approxPolyDP(contours[0],epsilon,True)

cv2.drawContours(img, [approx], 0, (255,255,255), 3)

print "simplified contour has",len(approx),"points"

![aproxPolyDP]()

This is probably what you're after: just 4 points.

You can play with the epsilon value if you need more points.

Bare in mind, now you have a quad, but the picture is flattened: there's no information on perspective/3d rotation.

Full OpenCV Python code listing (comment/uncomment as needed, use the reference to adapt to c++/java/etc.):

import numpy as np

import cv2

img = cv2.imread('XwzWQ.png',0)

# threshold image

ret,thresh = cv2.threshold(img,127,255,0)

cv2.imshow('threshold ',thresh)

# dilate thresholded image - merges top/bottom

kernel = np.ones((3,3), np.uint8)

dilated = cv2.dilate(thresh, kernel, iterations=3)

cv2.imshow('threshold dilated',dilated)

# find contours

contours, hierarchy = cv2.findContours(dilated,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

# cv2.drawContours(img, contours, 0, (255,255,255), 3)

print "contours:",len(contours)

print "largest contour has ",len(contours[0]),"points"

# minAreaRect

# rect = cv2.minAreaRect(contours[0])

# box = cv2.cv.BoxPoints(rect)

# box = np.int0(box)

# cv2.drawContours(img,[box],0,(255,255,255),3)

# convexHull

# hull = cv2.convexHull(contours[0])

# cv2.drawContours(img, [hull], 0, (255,255,255), 3)

# print "convex hull has ",len(hull),"points"

# simplify contours

epsilon = 0.1*cv2.arcLength(contours[0],True)

approx = cv2.approxPolyDP(contours[0],epsilon,True)

cv2.drawContours(img, [approx], 0, (255,255,255), 3)

print "simplified contour has",len(approx),"points"

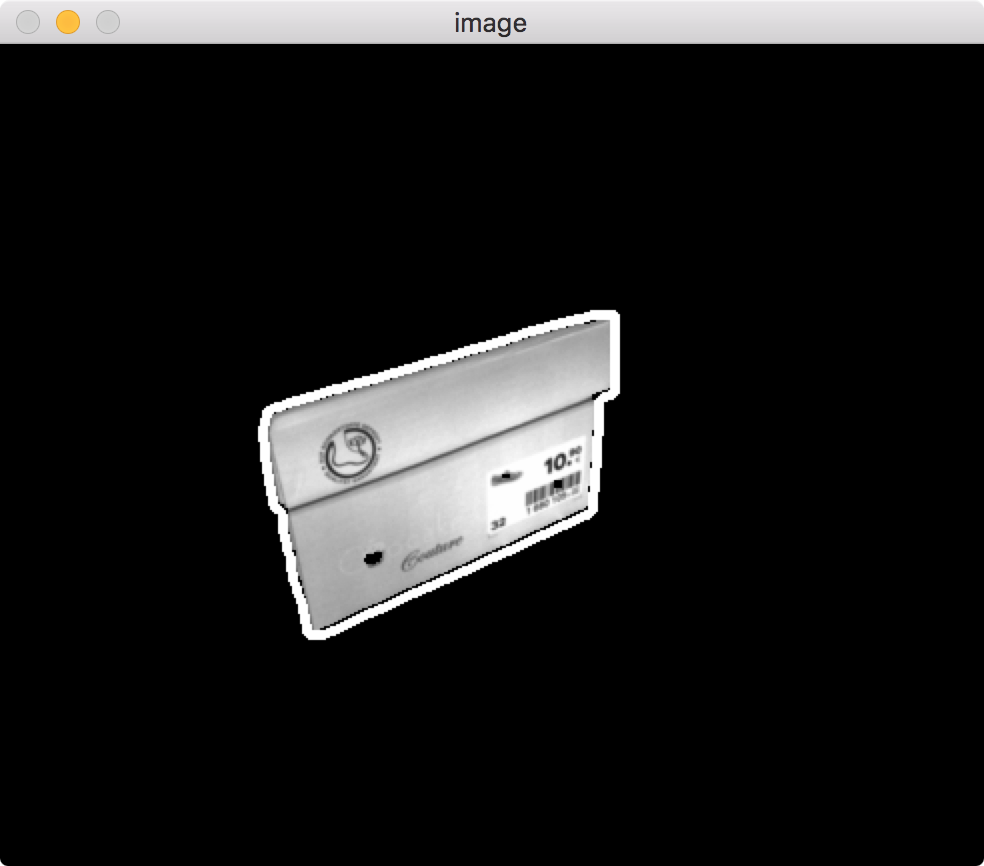

# display output

cv2.imshow('image',img)

cv2.waitKey(0)

cv2.destroyAllWindows()