Linear Regression

There is a standard formula for N-dimensional linear regression given by

![Normal Equation for N-dimensional linear Regression]()

Where the result, ![enter image description here]() is a vector of size n + 1 giving the coefficients of the function that best fits the data.

is a vector of size n + 1 giving the coefficients of the function that best fits the data.

In your case n = 3. While X is a mx(n+1) matrix called the design matrix -- in your case mx4. To construct the design matrix, you simply have to copy each data point coordinate value (x1,x2,...) into a row of X and in addition, place the number 1 in column 1 on each row. The vector y has the values associated with those coordinates. The terms ![enter image description here]() and

and ![enter image description here]() are the "transpose of X" and the "inverse of the product of the transpose of X and X." That last term can be computationally intensive to obtain because to invert a matrix is O(n^3), but for you n = 4, as long as n less than say 5000, no problem.

are the "transpose of X" and the "inverse of the product of the transpose of X and X." That last term can be computationally intensive to obtain because to invert a matrix is O(n^3), but for you n = 4, as long as n less than say 5000, no problem.

An example

Lets say you have data points (6,4,11) = 20, (8,5,15) = 30, (12,9,25) = 50, and (2,1,3) = 7.

In that case,

![enter image description here]()

Then you simply have to multiply things out and you can get ![]() directly. Multiplying matrices is straightforward and though more complicated, taking the inverse of a matrix is fairly straightforward (see here for example). However, for scientific computing languages like Matlab, Octave, and Julia (which I'll illustrate with) it's a one-liner.

directly. Multiplying matrices is straightforward and though more complicated, taking the inverse of a matrix is fairly straightforward (see here for example). However, for scientific computing languages like Matlab, Octave, and Julia (which I'll illustrate with) it's a one-liner.

julia> X = [1 6 4 11; 1 8 5 15; 1 12 9 25; 1 2 1 3]

4x4 Array{Int64,2}:

1 6 4 11

1 8 5 15

1 12 9 25

1 2 1 3

julia> y = [20;30;50;7]

4-element Array{Int64,1}:

20

30

50

7

julia> T = pinv(X'*X)*X'*y

4-element Array{Float64,1}:

4.0

-5.5

-7.0

7.0

Verifying...

julia> 12*(-5.5) + 9*(-7.0) + 25*(7) + 4

50.0

In Julia, Matlab, and Octave matrices can be multiplied simply by using *, while the transpose operator is '. Note here that I used pinv (the pseudo inverse) which is necessary (not this time) when the data is too redundant and gives rise to a non invertable X-Xtranspose, keep that in mind if you choose to implement matrix inversion yourself.

Instead PCA

Principal Component Analysis (PCA) is a technique for dimensionality reduction, the object is to find a k-dimensional space from an n dimensional space such that the projection error is minimized. In the general case, n and k are arbitrary, but in this case n = 3 and k = 1. There are 4 main steps.

Step 1: Data preprocessing

For the standard method to work, one must first perform mean normalization and also possibly scale the data so that the algorithm doesn't fail from floating point error. In the latter case, that means if the range of values of one dimension are huge relative to another there could be problem (like -1000 to 1000 in one dimension versus -0.1 to 0.2). Usually they're close enough though.Mean normalization simply mean for each dimension, subtract the average from each datapoint so that the resulting data set is centered around the origin. Take the result and store each data point (x1,x2,...xn) as a row in one big matrix X.

X = [ 6 4 11; 8 5 15; 12 9 25; 2 1 3]

4x3 Array{Int64,2}:

6 4 11

8 5 15

12 9 25

2 1 3

find the averages

y = convert(Array{Float64,1},([sum(X[1:4,x]) for x = 1:3])/4')

3-element Array{Float64,1}:

7.0

4.75

13.5

Normalize...

julia> Xm = X .- y'

4x3 Array{Float64,2}:

-1.0 -0.75 -2.5

1.0 0.25 1.5

5.0 4.25 11.5

-5.0 -3.75 -10.5

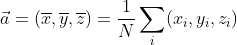

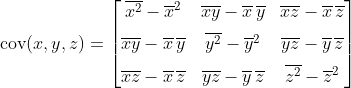

Step 2: Calculate to covariance matrix

The covariance matrix sigma is simply

![enter image description here]()

where m is the number of data points.

Step 3: Perform singular value decomposition

Here it's best to just find a library that takes the covariance matrix and spits out the answer. There are many and here are some of them; in python in R, in Java, and of course in Octave, Julia, Matlab (like R) it's another one liner svd.

Perform SVD on the covariance matrix

(U,S,V) = svd((1/4)*Xm'*Xm);

Step 4: Find the line

Take the first component (for k dimensions, you would take the first k components)

Ureduce = U[:,1]

3-element Array{Float64,1}:

-0.393041

-0.311878

-0.865015

This is the line that minimizes the projection error

Extra Credit: Going back

You can even recover the approximation of the original values, but they will all be lined up and projected on the same line. Connect the dots to get a line segment.

Obtain the reduced dimension of each of the data points in X (since 1-D will each be 1 value):

z= Ureduce' * Xm'

1x4 Array{Float64,2}:

2.78949 -1.76853 -13.2384 12.2174

Go back the other way; the original values but all lying on the same (optimal) line

julia> (Ureduce .* z .+ y)'

4x3 Array{Float64,2}:

5.90362 3.88002 11.0871 6 4 11

7.69511 5.30157 15.0298 versus 8 5 15

12.2032 8.87875 24.9514 12 9 25

2.19806 0.939664 2.93176 2 1 3

is a vector of size n + 1 giving the coefficients of the function that best fits the data.

is a vector of size n + 1 giving the coefficients of the function that best fits the data.  and

and  are the "transpose of X" and the "inverse of the product of the transpose of X and X." That last term can be computationally intensive to obtain because to invert a matrix is O(n^3), but for you n = 4, as long as n less than say 5000, no problem.

are the "transpose of X" and the "inverse of the product of the transpose of X and X." That last term can be computationally intensive to obtain because to invert a matrix is O(n^3), but for you n = 4, as long as n less than say 5000, no problem.