I have a simple Python app running in a container on Google Kubernetes Engine. I am trying to connect the standard Python logging to Google Stackdriver logging using this guide. I have almost succeeded, but I am getting duplicate log entries with one always at the 'error' level...

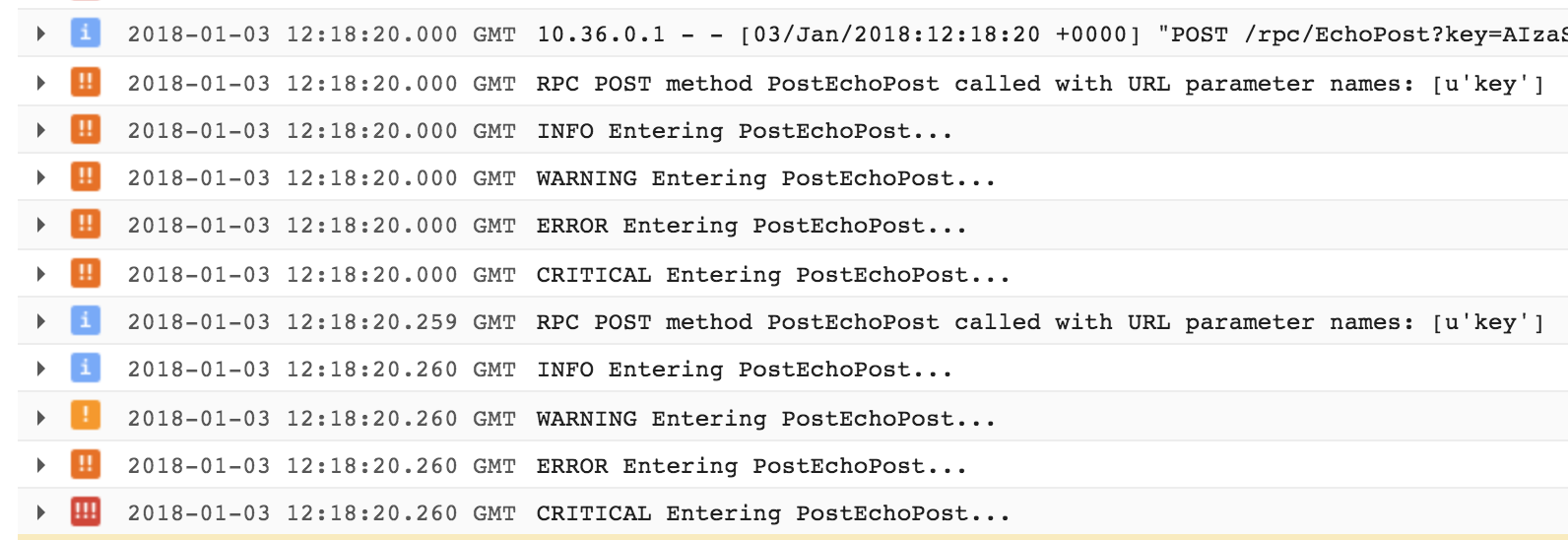

Screenshot of Stackdriver logs showing duplicate entries

This is my python code that set's up the logging according to the above guide:

import webapp2

from paste import httpserver

import rpc

# Imports the Google Cloud client library

import google.cloud.logging

# Instantiates a client

client = google.cloud.logging.Client()

# Connects the logger to the root logging handler; by default this captures

# all logs at INFO level and higher

client.setup_logging()

app = webapp2.WSGIApplication([('/rpc/([A-Za-z]+)', rpc.RpcHandler),], debug=True)

httpserver.serve(app, host='0.0.0.0', port='80')

Here's the code that triggers the logs from the screenshot:

import logging

logging.info("INFO Entering PostEchoPost...")

logging.warning("WARNING Entering PostEchoPost...")

logging.error("ERROR Entering PostEchoPost...")

logging.critical("CRITICAL Entering PostEchoPost...")

Here is the full Stackdriver log, expanded from the screenshot, with an incorrectly interpreted ERROR level:

{

insertId: "1mk4fkaga4m63w1"

labels: {

compute.googleapis.com/resource_name: "gke-alg-microservice-default-pool-xxxxxxxxxx-ttnz"

container.googleapis.com/namespace_name: "default"

container.googleapis.com/pod_name: "esp-alg-xxxxxxxxxx-xj2p2"

container.googleapis.com/stream: "stderr"

}

logName: "projects/projectname/logs/algorithm"

receiveTimestamp: "2018-01-03T12:18:22.479058645Z"

resource: {

labels: {

cluster_name: "alg-microservice"

container_name: "alg"

instance_id: "703849119xxxxxxxxxx"

namespace_id: "default"

pod_id: "esp-alg-xxxxxxxxxx-xj2p2"

project_id: "projectname"

zone: "europe-west1-b"

}

type: "container"

}

severity: "ERROR"

textPayload: "INFO Entering PostEchoPost...

"

timestamp: "2018-01-03T12:18:20Z"

}

Here is the the full Stackdriver log, expanded from the screenshot, with a correctly interpreted INFO level:

{

insertId: "1mk4fkaga4m63w0"

jsonPayload: {

message: "INFO Entering PostEchoPost..."

thread: 140348659595008

}

labels: {

compute.googleapis.com/resource_name: "gke-alg-microservi-default-pool-xxxxxxxxxx-ttnz"

container.googleapis.com/namespace_name: "default"

container.googleapis.com/pod_name: "esp-alg-xxxxxxxxxx-xj2p2"

container.googleapis.com/stream: "stderr"

}

logName: "projects/projectname/logs/algorithm"

receiveTimestamp: "2018-01-03T12:18:22.479058645Z"

resource: {

labels: {

cluster_name: "alg-microservice"

container_name: "alg"

instance_id: "703849119xxxxxxxxxx"

namespace_id: "default"

pod_id: "esp-alg-xxxxxxxxxx-xj2p2"

project_id: "projectname"

zone: "europe-west1-b"

}

type: "container"

}

severity: "INFO"

timestamp: "2018-01-03T12:18:20.260099887Z"

}

So, this entry might be the key:

container.googleapis.com/stream: "stderr"

It looks like in addition to my logging set-up working, all logs from the container are being send to stderr in the container, and I believe that by default, at least on Kubernetes Container Engine, all stdout/stderr are picked up by Google Stackdriver via FluentD... Having said that, I'm out of my depth at this point.

Any ideas why I am getting these duplicate entries?