Consider the following program, with all of HttpRequestMessage, and HttpResponseMessage, and HttpClient disposed properly. It always ends up with about 50MB memory at the end, after collection. Add a zero to the number of requests, and the un-reclaimed memory doubles.

class Program

{

static void Main(string[] args)

{

var client = new HttpClient {

BaseAddress = new Uri("http://localhost:5000/")};

var t = Task.Run(async () =>

{

var resps = new List<Task<HttpResponseMessage>>();

var postProcessing = new List<Task>();

for (int i = 0; i < 10000; i++)

{

Console.WriteLine("Firing..");

var req = new HttpRequestMessage(HttpMethod.Get,

"test/delay/5");

var tsk = client.SendAsync(req);

resps.Add(tsk);

postProcessing.Add(tsk.ContinueWith(async ts =>

{

req.Dispose();

var resp = ts.Result;

var content = await resp.Content.ReadAsStringAsync();

resp.Dispose();

Console.WriteLine(content);

}));

}

await Task.WhenAll(resps);

resps.Clear();

Console.WriteLine("All requests done.");

await Task.WhenAll(postProcessing);

postProcessing.Clear();

Console.WriteLine("All postprocessing done.");

});

t.Wait();

Console.Clear();

var t2 = Task.Run(async () =>

{

var resps = new List<Task<HttpResponseMessage>>();

var postProcessing = new List<Task>();

for (int i = 0; i < 10000; i++)

{

Console.WriteLine("Firing..");

var req = new HttpRequestMessage(HttpMethod.Get,

"test/delay/5");

var tsk = client.SendAsync(req);

resps.Add(tsk);

postProcessing.Add(tsk.ContinueWith(async ts =>

{

var resp = ts.Result;

var content = await resp.Content.ReadAsStringAsync();

Console.WriteLine(content);

}));

}

await Task.WhenAll(resps);

resps.Clear();

Console.WriteLine("All requests done.");

await Task.WhenAll(postProcessing);

postProcessing.Clear();

Console.WriteLine("All postprocessing done.");

});

t2.Wait();

Console.Clear();

client.Dispose();

GC.Collect();

Console.WriteLine("Done");

Console.ReadLine();

}

}

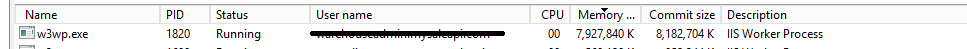

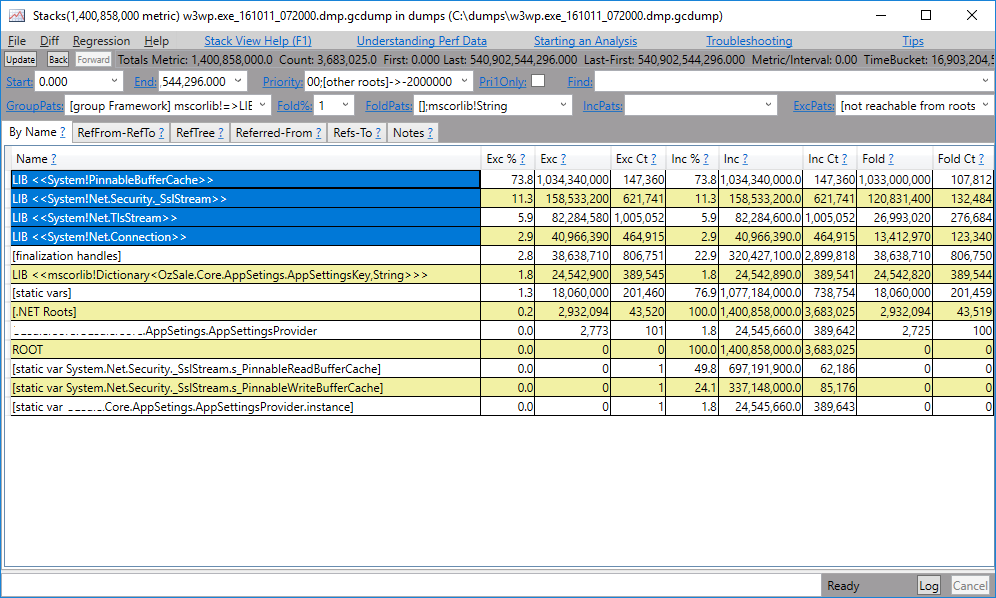

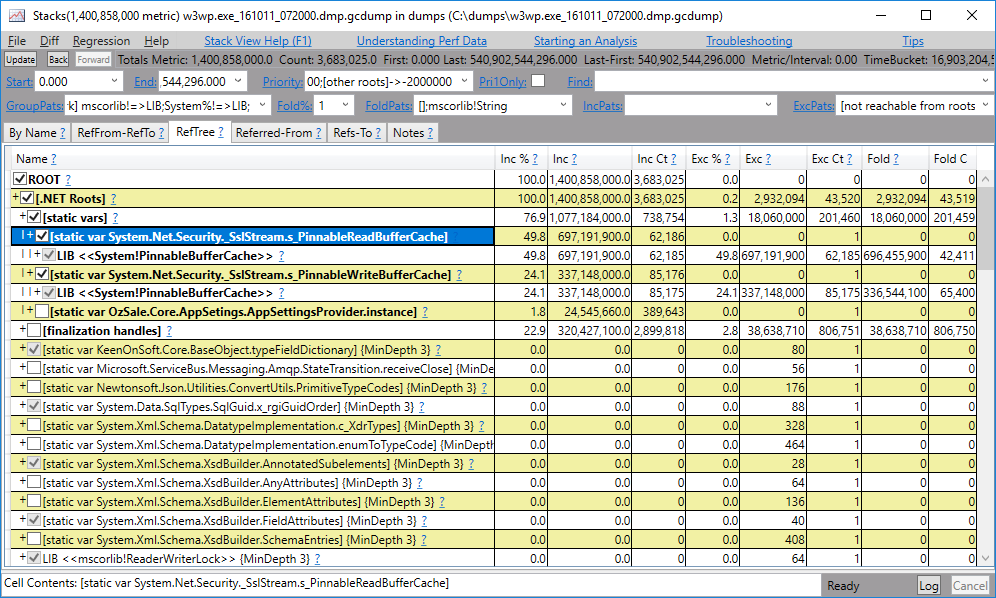

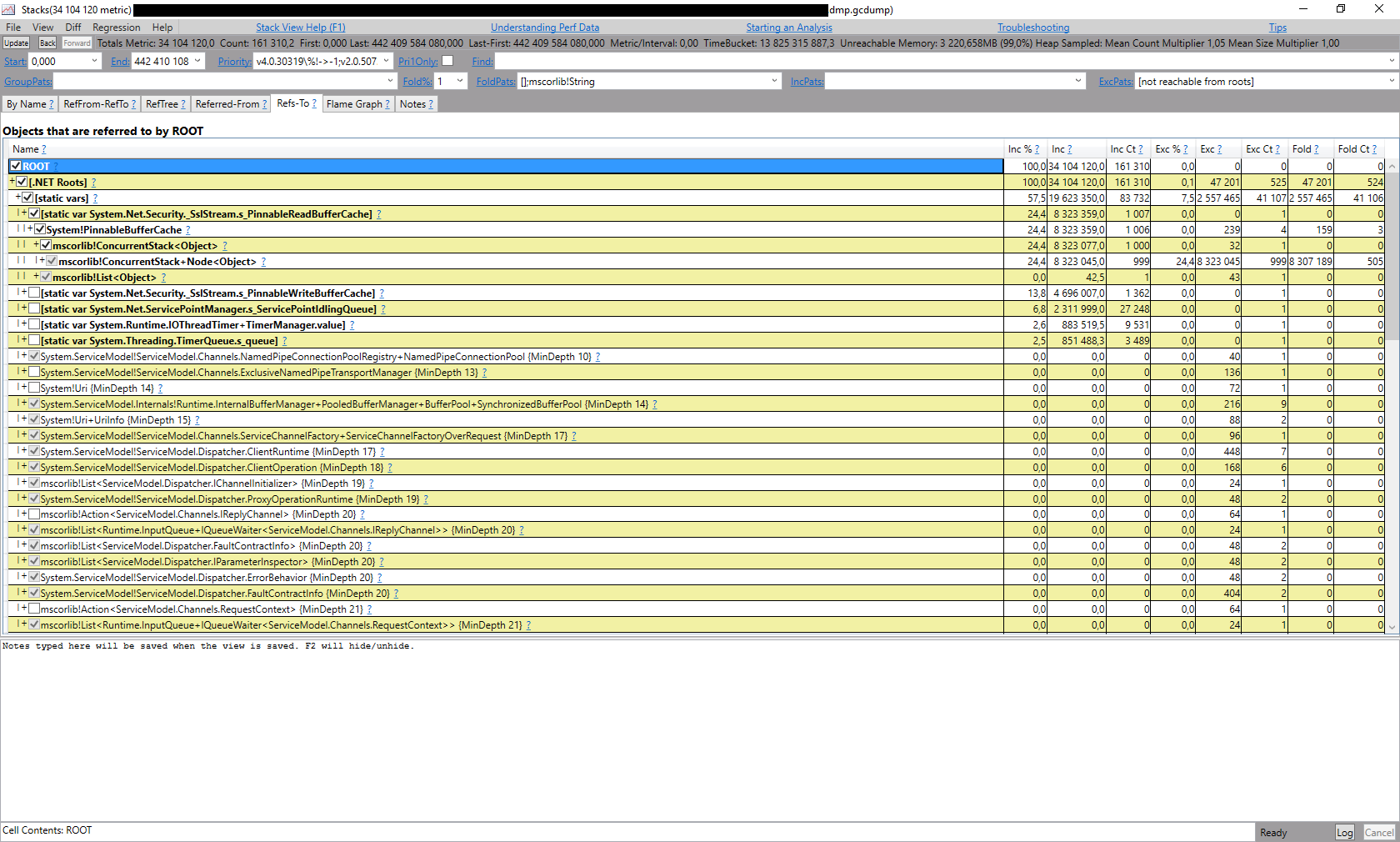

On a quick investigation with a memory profiler, it seems that the objects that take up the memory are all of the type Node<Object> inside mscorlib.

My initial though was that, it was some internal dictionary or a stack, since they are the types that uses Node as an internal structure, but I was unable to turn up any results for a generic Node<T> in the reference source since this is actually Node<object> type.

Is this a bug, or somekind of expected optimization (I wouldn't consider a proportional consumption of memory always retained to be a optimization in any way)? And purely academic, what is the Node<Object>.

Any help in understanding this would be much appreciated. Thanks :)

Update: To extrapolate the results for a much larger test set, I optimized it slightly by throttling it.

Here's the changed program. And now, it seems to stay consistent at 60-70MB, for a 1 million request set. I'm still baffled at what those Node<object>s really are, and its allowed to maintain such a high number of irreclaimable objects.

And the logical conclusion from the differences in these two results leads me to guess, this may not really be an issue in with HttpClient or WebRequest, rather something rooted directly with async - Since the real variant in these two test are the number of incomplete async tasks that exist at a given point in time. This is merely a speculation from the quick inspection.

static void Main(string[] args)

{

Console.WriteLine("Ready to start.");

Console.ReadLine();

var client = new HttpClient { BaseAddress =

new Uri("http://localhost:5000/") };

var t = Task.Run(async () =>

{

var resps = new List<Task<HttpResponseMessage>>();

var postProcessing = new List<Task>();

for (int i = 0; i < 1000000; i++)

{

//Console.WriteLine("Firing..");

var req = new HttpRequestMessage(HttpMethod.Get, "test/delay/5");

var tsk = client.SendAsync(req);

resps.Add(tsk);

var n = i;

postProcessing.Add(tsk.ContinueWith(async ts =>

{

var resp = ts.Result;

var content = await resp.Content.ReadAsStringAsync();

if (n%1000 == 0)

{

Console.WriteLine("Requests processed: " + n);

}

//Console.WriteLine(content);

}));

if (n%20000 == 0)

{

await Task.WhenAll(resps);

resps.Clear();

}

}

await Task.WhenAll(resps);

resps.Clear();

Console.WriteLine("All requests done.");

await Task.WhenAll(postProcessing);

postProcessing.Clear();

Console.WriteLine("All postprocessing done.");

});

t.Wait();

Console.Clear();

client.Dispose();

GC.Collect();

Console.WriteLine("Done");

Console.ReadLine();

}

HttpClient,HttpRequestMessageandHttpResponseMessageare all disposable, but you only disposeHttpClient. Dispose everything that needs disposing (throughusing), then check again. (It may very well still have allocatedNode<>objects, but at least the disposables won't be confusing the issue.) – BringNode<T>seems to come from concurrent collections. – Semiquavert.Dispose()help? – Semiquaver