First stack overflow question here. Hope I do this correctly:

I need to use an external python library in AWS glue. "Openpyxl" is the name of the library.

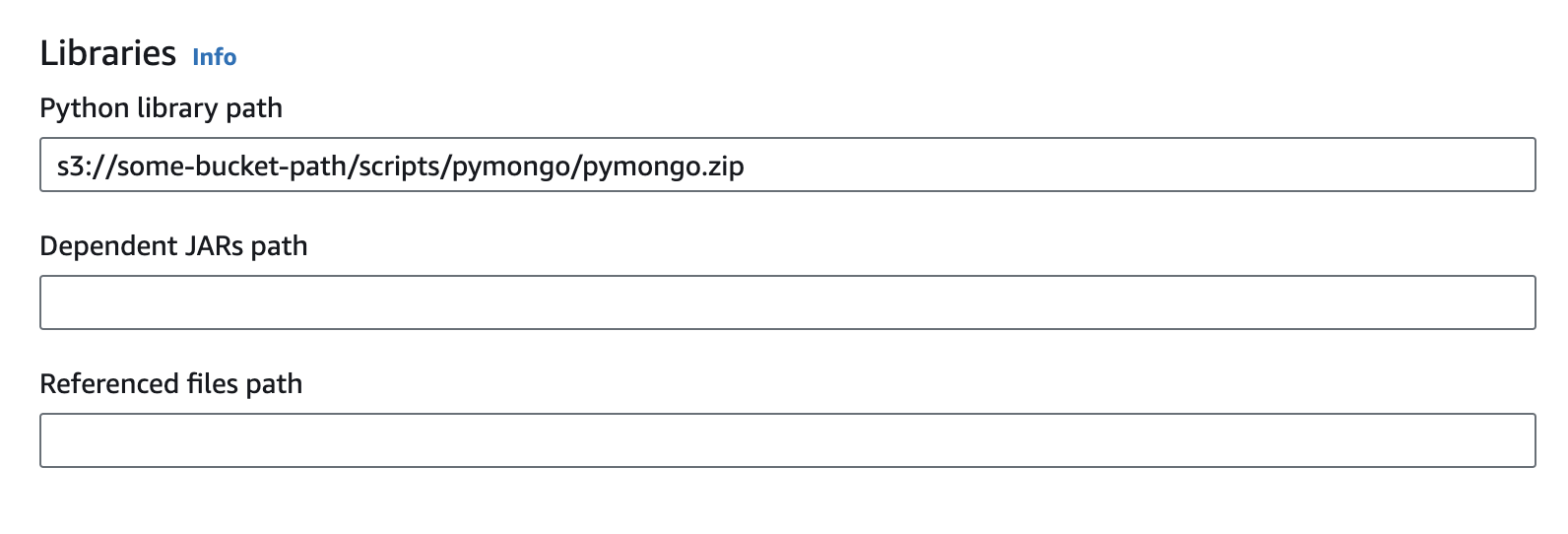

I follow these directions: https://docs.aws.amazon.com/glue/latest/dg/aws-glue-programming-python-libraries.html

However, after I have my zip file saved in the correct s3 location and point my glue job to that location, I'm not sure what to actually write in the script.

I tried your typical Import openpyxl , but that just returns the following error:

ImportError: No module named openpyxl

Obviously I don't know what to do here - also relatively new to programming so I'm not sure if this is a noob question or what. Thanks in advance!