There are a few variables in play here, so nobody can tell you "Above X clients, you need to use a SignalR service." Depending on how your solution is provisioned, one component or another may be the limiting factor.

For example, the App Service service limits show the maximum number of web sockets per Web App instance. For the Basic tier, it's 350. When you need 351, your options are:

- Scale up your App Service Plan to Standard or higher.

- Add an additional instance and use a Redis or Service Bus backplane.

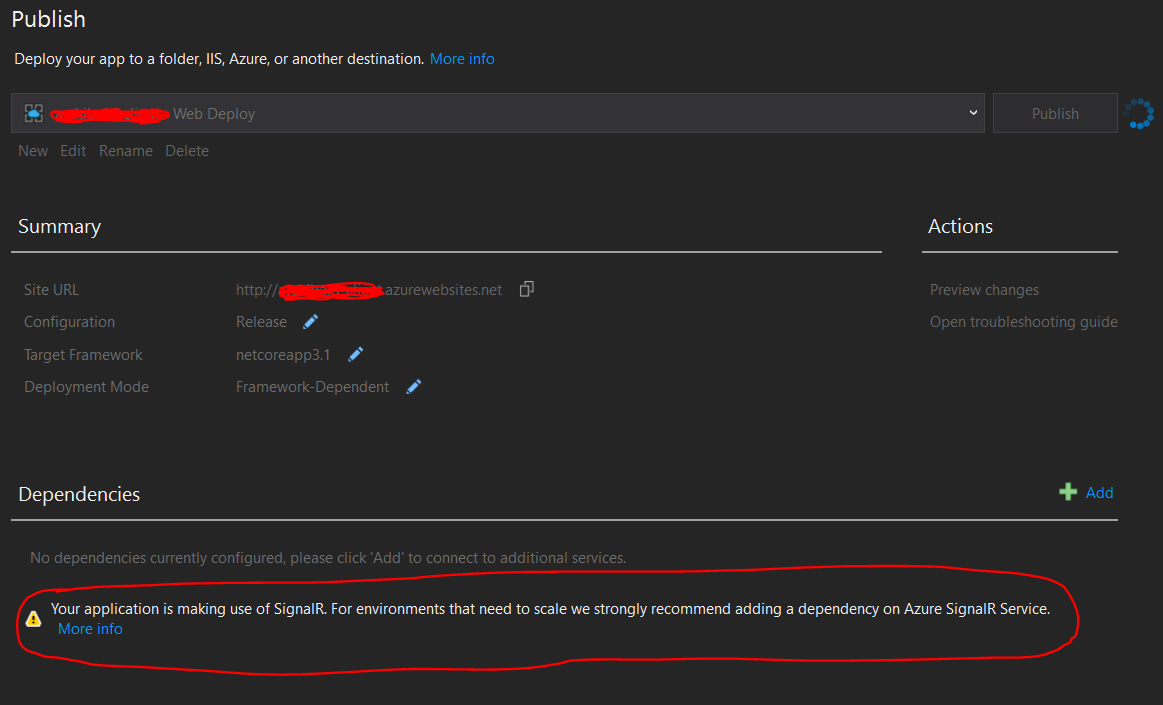

- Use SignalR service.

- Disable websockets from SignalR and rely on something like long polling, which is limited by server resources.

After you go to the Standard service tier and scale out to multiple Web App instances, you can get pretty far hosting SignalR yourself. We've run over 5K concurrently connected clients this way with four Standard S3 instances. Four is a misleading number because we needed the horsepower for other portions of our app, not just SignalR.

When hosting SignalR yourself, it imposes some constraints and there are various creative ways you can hang yourself. For example, using SignalR netcore, you're required to have an ARR affinity token for a multi-instance environment. That sucks. And I once implemented tight polling reconnect after a connection was closed from the front end. It was fun when our servers went down for over two minutes, came back up, and we had a few thousand web browsers tight polling trying to reconnect. And in the standard tier Web App, it's really hard to get a handle on just what percentage of memory and CPU multiple websocket connections are consuming.

So after saying all of this, the answer is "it depends on a lot of things." Having done this both ways, I'd go ahead and use SignalR service.