Your problem can easily be conceived as a graph problem where we have to find the shortest path between two nodes.

To convert your dependency parse in a graph, we first have to deal with the fact that it comes as a string. You want to get this:

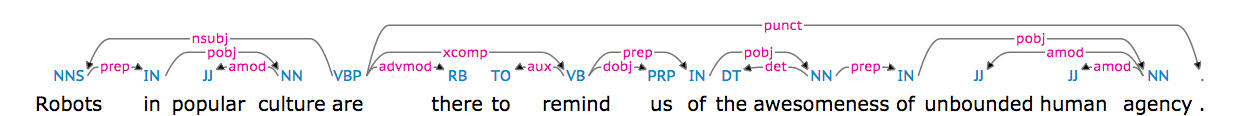

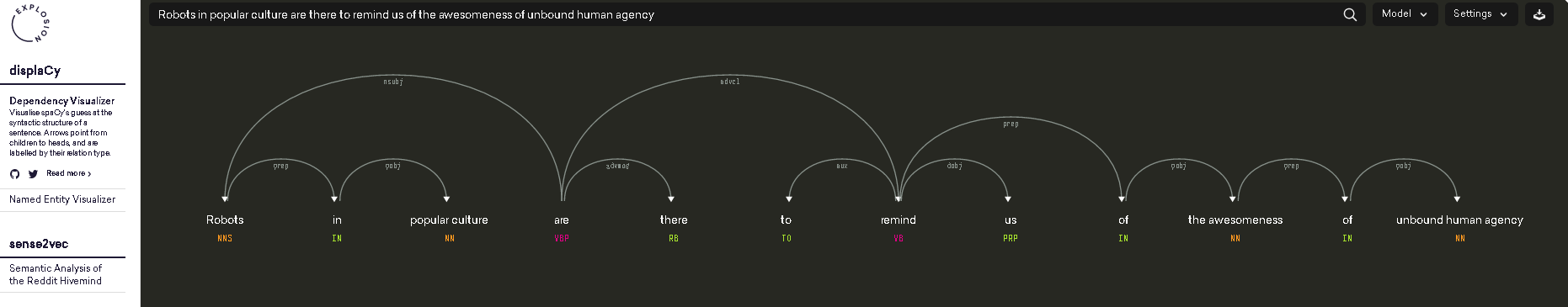

'nsubj(are-5, Robots-1)\nxsubj(remind-8, Robots-1)\namod(culture-4, popular-3)\nprep_in(Robots-1, culture-4)\nroot(ROOT-0, are-5)\nadvmod(are-5, there-6)\naux(remind-8, to-7)\nxcomp(are-5, remind-8)\ndobj(remind-8, us-9)\ndet(awesomeness-12, the-11)\nprep_of(remind-8, awesomeness-12)\namod(agency-16, unbound-14)\namod(agency-16, human-15)\nprep_of(awesomeness-12, agency-16)'

to look like this:

[('are-5', 'Robots-1'), ('remind-8', 'Robots-1'), ('culture-4', 'popular-3'), ('Robots-1', 'culture-4'), ('ROOT-0', 'are-5'), ('are-5', 'there-6'), ('remind-8', 'to-7'), ('are-5', 'remind-8'), ('remind-8', 'us-9'), ('awesomeness-12', 'the-11'), ('remind-8', 'awesomeness-12'), ('agency-16', 'unbound-14'), ('agency-16', 'human-15'), ('awesomeness-12', 'agency-16')]

This way you can feed the tuple list to a graph constructor from the networkx module that will analyze the list and build a graph for you, plus give you a neat method that gives you the length of the shortest path between two given nodes.

Necessary imports

import re

import networkx as nx

from practnlptools.tools import Annotator

How to get your string in the desired tuple list format

annotator = Annotator()

text = """Robots in popular culture are there to remind us of the awesomeness of unbound human agency."""

dep_parse = annotator.getAnnotations(text, dep_parse=True)['dep_parse']

dp_list = dep_parse.split('\n')

pattern = re.compile(r'.+?\((.+?), (.+?)\)')

edges = []

for dep in dp_list:

m = pattern.search(dep)

edges.append((m.group(1), m.group(2)))

How to build the graph

graph = nx.Graph(edges) # Well that was easy

How to compute shortest path length

print(nx.shortest_path_length(graph, source='Robots-1', target='awesomeness-12'))

This script will reveal that the shortest path given the dependency parse is actually of length 2, since you can get from Robots-1 to awesomeness-12 by going through remind-8

1. xsubj(remind-8, Robots-1)

2. prep_of(remind-8, awesomeness-12)

If you don't like this result, you might want to think about filtering some dependencies, in this case not allow the xsubj dependency to be added to the graph.