So its much more than just caching. Aaronman covered a lot so ill only add what he missed.

Raw performance w/o caching is 2-10x faster due to a generally more efficient and well archetected framework. E.g. 1 jvm per node with akka threads is better than forking a whole process for each task.

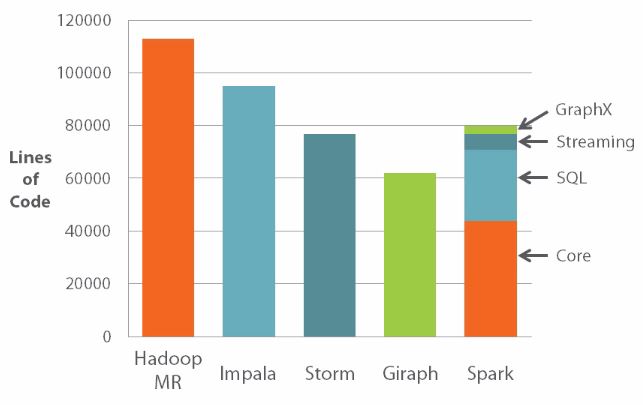

Scala API. Scala stands for Scalable Language and is clearly the best language to choose for parallel processing. They say Scala cuts down code by 2-5x, but in my experience from refactoring code in other languages - especially java mapreduce code, its more like 10-100x less code. Seriously I have refactored 100s of LOC from java into a handful of Scala / Spark. Its also much easier to read and reason about. Spark is even more concise and easy to use than the Hadoop abstraction tools like pig & hive, its even better than Scalding.

Spark has a repl / shell. The need for a compilation-deployment cycle in order to run simple jobs is eliminated. One can interactively play with data just like one uses Bash to poke around a system.

The last thing that comes to mind is ease of integration with Big Table DBs, like cassandra and hbase. In cass to read a table in order to do some analysis one just does

sc.cassandraTable[MyType](tableName).select(myCols).where(someCQL)

Similar things are expected for HBase. Now try doing that in any other MPP framework!!

UPDATE thought of pointing out this is just the advantages of Spark, there are quite a few useful things on top. E.g. GraphX for graph processing, MLLib for easy machine learning, Spark SQL for BI, BlinkDB for insane fast apprx queries, and as mentioned Spark Streaming