I have set up a simple linear regression problem in Tensorflow, and have created simple conda environments using Tensorflow CPU and GPU both in 1.13.1 (using CUDA 10.0 in the backend on an NVIDIA Quadro P600).

However, it looks like the GPU environment always takes longer time than the CPU environment. The code I'm running is below.

import time

import warnings

import numpy as np

import scipy

import tensorflow as tf

import tensorflow_probability as tfp

from tensorflow_probability import edward2 as ed

from tensorflow.python.ops import control_flow_ops

from tensorflow_probability import distributions as tfd

# Handy snippet to reset the global graph and global session.

def reset_g():

with warnings.catch_warnings():

warnings.simplefilter('ignore')

tf.reset_default_graph()

try:

sess.close()

except:

pass

N = 35000

inttest = np.ones(N).reshape(N, 1)

stddev_raw = 0.09

true_int = 1.

true_b1 = 0.15

true_b2 = 0.7

np.random.seed(69)

X1 = (np.atleast_2d(np.linspace(

0., 2., num=N)).T).astype(np.float64)

X2 = (np.atleast_2d(np.linspace(

2., 1., num=N)).T).astype(np.float64)

Ytest = true_int + (true_b1*X1) + (true_b2*X2) + \

np.random.normal(size=N, scale=stddev_raw).reshape(N, 1)

Ytest = Ytest.reshape(N, )

X1 = X1.reshape(N, )

X2 = X2.reshape(N, )

reset_g()

# Create data and param

model_X1 = tf.placeholder(dtype=tf.float64, shape=[N, ])

model_X2 = tf.placeholder(dtype=tf.float64, shape=[N, ])

model_Y = tf.placeholder(dtype=tf.float64, shape=[N, ])

alpha = tf.get_variable(shape=[1], name='alpha', dtype=tf.float64)

# these two params need shape of one if using trainable distro

beta1 = tf.get_variable(shape=[1], name='beta1', dtype=tf.float64)

beta2 = tf.get_variable(shape=[1], name='beta2', dtype=tf.float64)

# Yhat

tf_pred = (tf.multiply(model_X1, beta1) + tf.multiply(model_X2, beta2) + alpha)

# # Make difference of squares

# resid = tf.square(model_Y - tf_pred)

# loss = tf.reduce_sum(resid)

# # Make a Likelihood function based on simple stuff

stddev = tf.square(tf.get_variable(shape=[1],

name='stddev', dtype=tf.float64))

covar = tfd.Normal(loc=model_Y, scale=stddev)

loss = -1.0*tf.reduce_sum(covar.log_prob(tf_pred))

# Trainer

lr=0.005

N_ITER = 20000

opt = tf.train.AdamOptimizer(lr, beta1=0.95, beta2=0.95)

train = opt.minimize(loss)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

start = time.time()

for step in range(N_ITER):

out_l, out_b1, out_b2, out_a, laws = sess.run([train, beta1, beta2, alpha, loss],

feed_dict={model_X1: X1,

model_X2: X2,

model_Y: Ytest})

if step % 500 == 0:

print('Step: {s}, loss = {l}, alpha = {a:.3f}, beta1 = {b1:.3f}, beta2 = {b2:.3f}'.format(

s=step, l=laws, a=out_a[0], b1=out_b1[0], b2=out_b2[0]))

print(f"True: alpha = {true_int}, beta1 = {true_b1}, beta2 = {true_b2}")

end = time.time()

print(end-start)

Here are some outputs printed if they're any indicative of what's happening:

For the CPU run:

Colocations handled automatically by placer.

2019-04-18 09:00:56.329669: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2019-04-18 09:00:56.351151: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 2904000000 Hz

2019-04-18 09:00:56.351672: I tensorflow/compiler/xla/service/service.cc:150] XLA service 0x558fefe604c0 executing computations on platform Host. Devices:

2019-04-18 09:00:56.351698: I tensorflow/compiler/xla/service/service.cc:158] StreamExecutor device (0): <undefined>, <undefined>

For the GPU run:

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

W0418 09:03:21.674947 139956864096064 deprecation.py:506] From /home/sadatnfs/.conda/envs/tf_gpu/lib/python3.6/site-packages/tensorflow/python/training/slot_creator.py:187: calling Zeros.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

2019-04-18 09:03:21.712913: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2019-04-18 09:03:21.717598: I tensorflow/stream_executor/platform/default/dso_loader.cc:42] Successfully opened dynamic library libcuda.so.1

2019-04-18 09:03:21.951277: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:1009] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2019-04-18 09:03:21.952212: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x55e583bc4480 executing computations on platform CUDA. Devices:

2019-04-18 09:03:21.952225: I tensorflow/compiler/xla/service/service.cc:175] StreamExecutor device (0): Quadro P600, Compute Capability 6.1

2019-04-18 09:03:21.971218: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 2904000000 Hz

2019-04-18 09:03:21.971816: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x55e58577f290 executing computations on platform Host. Devices:

2019-04-18 09:03:21.971842: I tensorflow/compiler/xla/service/service.cc:175] StreamExecutor device (0): <undefined>, <undefined>

2019-04-18 09:03:21.972102: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1551] Found device 0 with properties:

name: Quadro P600 major: 6 minor: 1 memoryClockRate(GHz): 1.5565

pciBusID: 0000:01:00.0

totalMemory: 1.95GiB freeMemory: 1.91GiB

2019-04-18 09:03:21.972147: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1674] Adding visible gpu devices: 0

2019-04-18 09:03:21.972248: I tensorflow/stream_executor/platform/default/dso_loader.cc:42] Successfully opened dynamic library libcudart.so.10.0

2019-04-18 09:03:21.973094: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1082] Device interconnect StreamExecutor with strength 1 edge matrix:

2019-04-18 09:03:21.973105: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1088] 0

2019-04-18 09:03:21.973110: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1101] 0: N

2019-04-18 09:03:21.973279: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1222] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 1735 MB memory) -> physical GPU (device: 0, name: Quadro P600, pci bus id: 0000:01:00.0, compute capability: 6.1)

I am about to post another question about implementing CUBLAS in R as well because that was giving me slow speed times compared to Intel MKL, but I'm hoping that maybe there's a clear cut reason why even something as well built as TF (compared to hacky R and CUBLAS patching) is being slow with GPU.

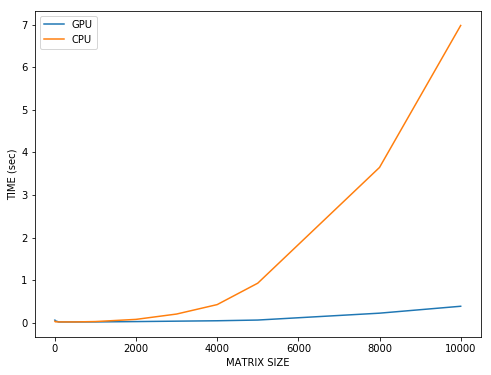

EDIT: Following Vlad's suggestion, I wrote up the following script to try and throw some large sized objects and training it, but I think I might not be setting it up correctly because the CPU one in this case even as the size of the matrices are increasing. Any suggestions perhaps?

import time

import warnings

import numpy as np

import scipy

import tensorflow as tf

import tensorflow_probability as tfp

from tensorflow_probability import edward2 as ed

from tensorflow.python.ops import control_flow_ops

from tensorflow_probability import distributions as tfd

np.random.seed(69)

# Handy snippet to reset the global graph and global session.

def reset_g():

with warnings.catch_warnings():

warnings.simplefilter('ignore')

tf.reset_default_graph()

try:

sess.close()

except:

pass

# Loop over the different number of feature columns

for x_feat in [30, 50, 100, 1000, 10000]:

y_feat=10;

# Simulate data

N = 5000

inttest = np.ones(N).reshape(N, 1)

stddev_raw = np.random.uniform(0.01, 0.25, size=y_feat)

true_int = np.linspace(0.1 ,1., num=y_feat)

xcols = x_feat

true_bw = np.random.randn(xcols, y_feat)

true_X = np.random.randn(N, xcols)

true_errorcov = np.eye(y_feat)

np.fill_diagonal(true_errorcov, stddev_raw)

true_Y = true_int + np.matmul(true_X, true_bw) + \

np.random.multivariate_normal(mean=np.array([0 for i in range(y_feat)]),

cov=true_errorcov,

size=N)

## Our model is:

## Y = a + b*X + error where, for N=5000 observations:

## Y : 10 outputs;

## X : 30,50,100,1000,10000 features

## a, b = bias and weights

## error: just... error

# Number of iterations

N_ITER = 1001

# Training rate

lr=0.005

with tf.device('gpu'):

# Create data and weights

model_X = tf.placeholder(dtype=tf.float64, shape=[N, xcols])

model_Y = tf.placeholder(dtype=tf.float64, shape=[N, y_feat])

alpha = tf.get_variable(shape=[y_feat], name='alpha', dtype=tf.float64)

# these two params need shape of one if using trainable distro

betas = tf.get_variable(shape=[xcols, y_feat], name='beta1', dtype=tf.float64)

# Yhat

tf_pred = alpha + tf.matmul(model_X, betas)

# Make difference of squares (loss fn) [CONVERGES TO TRUTH]

resid = tf.square(model_Y - tf_pred)

loss = tf.reduce_sum(resid)

# Trainer

opt = tf.train.AdamOptimizer(lr, beta1=0.95, beta2=0.95)

train = opt.minimize(loss)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

start = time.time()

for step in range(N_ITER):

out_l, laws = sess.run([train, loss], feed_dict={model_X: true_X, model_Y: true_Y})

if step % 500 == 0:

print('Step: {s}, loss = {l}'.format(

s=step, l=laws))

end = time.time()

print("y_feat: {n}, x_feat: {x2}, Time elapsed: {te}".format(n = y_feat, x2 = x_feat, te = end-start))

reset_g()

(10000, 10000)x(10000x10000). You will see that GPU is much faster. – Steelmaker