I am working on a Java application for solving a class of numerical optimization problems - large-scale linear programming problems to be more precise. A single problem can be split up into smaller subproblems that can solved in parallel. Since there are more subproblems than CPU cores, I use an ExecutorService and define each subproblem as a Callable that gets submitted to the ExecutorService. Solving a subproblem requires calling a native library - a linear programming solver in this case.

Problem

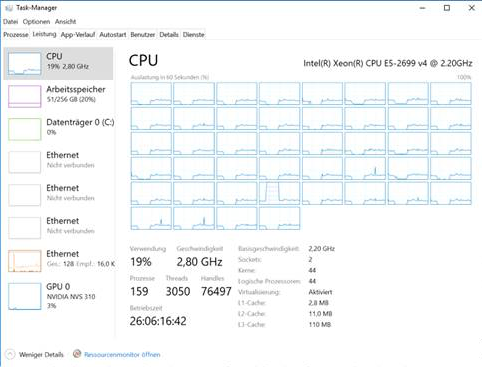

I can run the application on Unix and on Windows systems with up to 44 physical cores and up to 256g memory, but computation times on Windows are an order of magnitude higher than on Linux for large problems. Windows not only requires substantially more memory, but CPU utilization over time drops from 25% in the beginning to 5% after a few hours. Here is a screenshot of the task manager in Windows:

Observations

- Solution times for large instances of the overall problem range from hours to days and consume up to 32g of memory (on Unix). Solution times for a subproblem are in the ms range.

- I do not encounter this issue on small problems that take only a few minutes to solve.

- Linux uses both sockets out-of-the-box, whereas Windows requires me to explicitly activate memory interleaving in the BIOS so that the application utilizes both cores. Whether of not I do this has no effect on the deterioration of overall CPU utilization over time though.

- When I look at the threads in VisualVM all pool threads are running, none are on wait or else.

- According to VisualVM, 90% CPU time is spend on a native function call (solving a small linear program)

- Garbage Collection is not an issue since the application does not create and de-reference a lot of objects. Also, most memory seems to be allocated off-heap. 4g of heap are sufficient on Linux and 8g on Windows for the largest instance.

What I've tried

- all sorts of JVM args, high XMS, high metaspace, UseNUMA flag, other GCs.

- different JVMs (Hotspot 8, 9, 10, 11).

- different native libraries of different linear programming solvers (CLP, Xpress, Cplex, Gurobi).

Questions

- What drives the performance difference between Linux and Windows of a large multi-threaded Java application that makes heavy use of native calls?

- Is there anything that I can change in the implementation that would help Windows, for example, should I avoid using an ExecutorService that receives thousands of Callables and do what instead?

ForkJoinPoolinstead ofExecutorService? 25% CPU utilization is really low if your problem is CPU bound. – FochForkJoinPoolis more efficient than manual scheduling. – FochForkJoinPoolbrings no relief. – Retrospectionmalloc,realloc). When your solver code makes use ofmalloc,realloc, etc. then, in a long-running process the type of allocator may make a difference. It may also explain differences in various operating systems due to memory fragmentation and cache hit rates. See en.wikipedia.org/wiki/C_dynamic_memory_allocation – GalateaCLPNative.javaclpInitialSolve(Pointer<CLPSimplex>)– RetrospectionBlockingQueue; 4. I only use the callable to catch exceptions when a subproblem fails and do not cancel them, but runnables behave the same; 5. No. Thanks :) – Retrospection