I am trying to deploy a trained U-Net with TensorRT. The model was trained using Keras (with Tensorflow as backend). The code is very similar to this one: https://github.com/zhixuhao/unet/blob/master/model.py

When I converted the model to UFF format, using some code like this:

import uff

import os

uff_fname = os.path.join("./models/", "model_" + idx + ".uff")

uff_model = uff.from_tensorflow_frozen_model(

frozen_file = os.path.join('./models', trt_fname), output_nodes = output_names,

output_filename = uff_fname

)

I will get the following warning:

Warning: No conversion function registered for layer: ResizeNearestNeighbor yet.

Converting up_sampling2d_32_12/ResizeNearestNeighbor as custom op: ResizeNearestNeighbor

Warning: No conversion function registered for layer: DataFormatVecPermute yet.

Converting up_sampling2d_32_12/Shape-0-0-VecPermuteNCHWToNHWC-LayoutOptimizer as custom op: DataFormatVecPermute

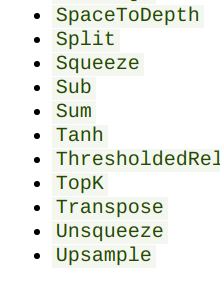

I tried to avoid this by replacing the upsampling layer with upsampling(bilinear interpolation) and transpose convolution. But the converter would throw me similar errors. I checked https://docs.nvidia.com/deeplearning/sdk/tensorrt-support-matrix/index.html and it seemed all these operations are not supported yet.

I am wondering if there is any workaround to this problem? Is there any other format/framework that TensorRT likes and has upsampling supported? Or is it possible to replace it with some other supported operations?

I also saw somewhere that one can add customized operations to replace those unsupported ones for TensorRT. Though I am not so sure how the workflow would be. It would also be really helpful if someone could point out an example of custom layers.

Thank you in advance!

ResizeNearestNeighborlayers, which seem to be custom layers. – Budworth