Definitions

KNN algorithm = K-nearest-neighbour classification algorithm

K-means = centroid-based clustering algorithm

DTW = Dynamic Time Warping a similarity-measurement algorithm for time-series

I show below step by step about how the two time-series can be built and how the Dynamic Time Warping (DTW) algorithm can be computed. You can build a unsupervised k-means clustering with scikit-learn without specifying the number of centroids, then the scikit-learn knows to use the algorithm called auto.

Building the time-series and computing the DTW

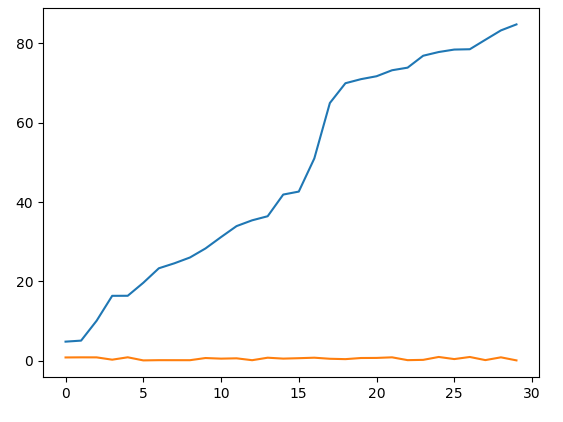

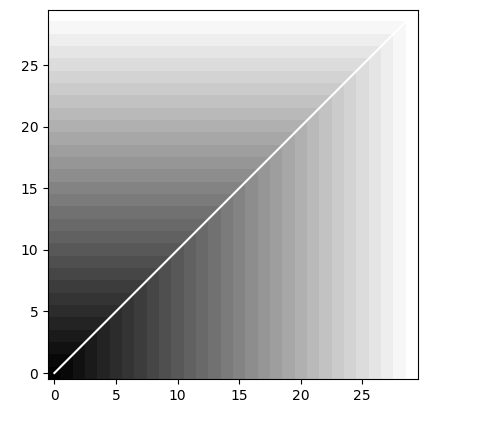

You have have two time-series and you compute the DTW such that

![enter image description here]()

![enter image description here]()

import pandas as pd

import numpy as np

import random

from dtw import dtw

from matplotlib.pyplot import plot

from matplotlib.pyplot import imshow

from matplotlib.pyplot import cm

from sklearn.cluster import KMeans

from sklearn.preprocessing import MultiLabelBinarizer

#About classification, read the tutorial

#http://scikit-learn.org/stable/tutorial/basic/tutorial.html

def createTs(myStart, myLength):

index = pd.date_range(myStart, periods=myLength, freq='H');

values= [random.random() for _ in range(myLength)];

series = pd.Series(values, index=index);

return(series)

#Time series of length 30, start from 1/1/2000 & 1/2/2000 so overlap

myStart='1/1/2000'

myLength=30

timeS1=createTs(myStart, myLength)

myStart='1/2/2000'

timeS2=createTs(myStart, myLength)

#This could be your dataframe but unnecessary here

#myDF = pd.DataFrame([x for x in timeS1.data], [x for x in timeS2.data])#, columns=['data1', 'data2'])

x=[xxx*100 for xxx in sorted(timeS1.data)]

y=[xx for xx in timeS2.data]

choice="dtw"

if (choice="timeseries"):

print(timeS1)

print(timeS2)

if (choice=="drawingPlots"):

plot(x)

plot(y)

if (choice=="dtw"):

#DTW with the 1st order norm

myDiff=[xx-yy for xx,yy in zip(x,y)]

dist, cost, acc, path = dtw(x, y, dist=lambda x, y: np.linalg.norm(myDiff, ord=1))

imshow(acc.T, origin='lower', cmap=cm.gray, interpolation='nearest')

plot(path[0], path[1], 'w')

Classification of the time-series with KNN

It is not evident in the question about what should be labelled and with which labels? So please provide the following details

- What should we label in the data-frame? The path computed by DTW algorithm?

- Which type of labeling? Binary? Multiclass?

after which we can decide our classification algorithm that may be the so-called KNN algorithm. It works such that you have two separate data sets: training set and test set. By training set, you teach the algorithm to label the time series while the test set is a tool by which we can measure about how well the model works with model selection tools such as AUC.

Small puzzle left open until details provided about the questions

#PUZZLE

#from tutorial (#http://scikit-learn.org/stable/tutorial/basic/tutorial.html)

newX = [[1, 2], [2, 4], [4, 5], [3, 2], [3, 1]]

newY = [[0, 1], [0, 2], [1, 3], [0, 2, 3], [2, 4]]

newY = MultiLabelBinarizer().fit_transform(newY)

#Continue to the article.

Scikit-learn comparison article about classifiers is provided in the second enumerate item below.

Clustering with K-means (not the same as KNN)

K-means is the clustering algorithm and its unsupervised version you can use such that

#Unsupervised version "auto" of the KMeans as no assignment for the n_clusters

myClusters=KMeans(path)

#myClusters.fit(YourDataHere)

which is very different algorithm than the KNN algorithm: here we do not need any labels. I provide you further material on the topic below in the first enumerate item.

Further reading

Does K-means incorporate the K-nearest-neighbour algorithm?

Comparison about classifiers in scikit learn here