I'm seeing unexpected behavior with models I'm converting from Keras/TensorFlow/PyTorch etc.

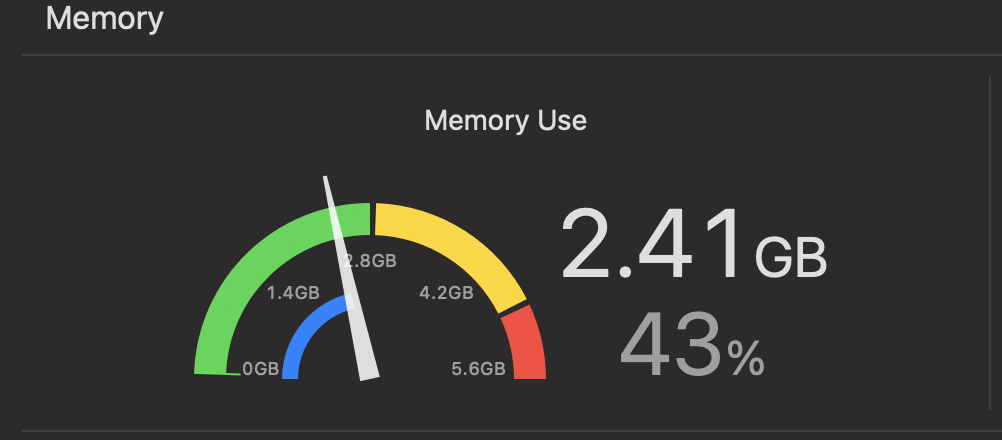

When calling a simple init on an MLModel, without predicting or doing anything else, the app memory on some models spikes to 2-3GB.

self.myModel = MyModel(model: mlModel)

Even for a model that weighs less than a 1MB.

Also, playing with the MLModelConfiguration, changing the computeUnits seems to change the memory usage a bit, where .cpuOnly usually requires the least amount of memory.

But for the love of god, I have no idea why this is happening, is it something with the way I convert them? Specific layer that can cause it? Does anyone have a lead on how to tackle this?

This is the model we've converted: https://github.com/HasnainRaz/Fast-SRGAN