I have got two questions on reading and writing Python objects from/to Azure blob storage.

Can someone tell me how to write Python dataframe as csv file directly into Azure Blob without storing it locally?

I tried using the functions

create_blob_from_textandcreate_blob_from_streambut none of them works.Converting dataframe to string and using

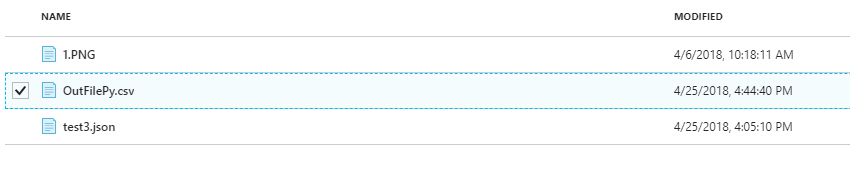

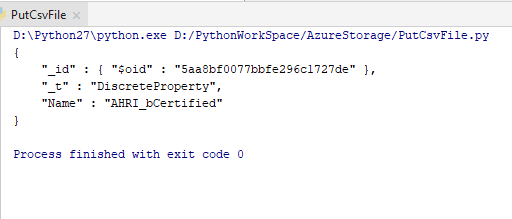

create_blob_from_textfunction writes the file into the blob but as a plain string but not as csv.df_b = df.to_string() block_blob_service.create_blob_from_text('test', 'OutFilePy.csv', df_b)How to directly read a json file in Azure blob storage directly into Python?