How can I best write a query that selects 10 rows randomly from a total of 600k?

A great post handling several cases, from simple, to gaps, to non-uniform with gaps.

http://jan.kneschke.de/projects/mysql/order-by-rand/

For most general case, here is how you do it:

SELECT name

FROM random AS r1 JOIN

(SELECT CEIL(RAND() *

(SELECT MAX(id)

FROM random)) AS id)

AS r2

WHERE r1.id >= r2.id

ORDER BY r1.id ASC

LIMIT 1

This supposes that the distribution of ids is equal, and that there can be gaps in the id list. See the article for more advanced examples

mysqli_fetch_assoc($result) ? Or are those 10 results not necessarily distinguishable? –

Peres Incorrect usage of UPDATE and ORDER BY –

Bagasse FLUSH STATUS; SELECT ... ; SHOW SESSION STATUS LIKE 'Handler%';. If you see numbers like the row count of the table, not good. If you see only numbers like the row count of the resultset, good. –

Steiger WHERE r1.id >= r2.id AND r1.id NOT IN(6,43,21,35,77) AND status_code IN(1,2,3,4,5) (Consider that there is a column called status_code). This may or may not give an empty result. –

Wortham SELECT column FROM table

ORDER BY RAND()

LIMIT 10

Not the efficient solution but works

ORDER BY RAND() is relatively slow –

Mcgowen SELECT words, transcription, translation, sound FROM vocabulary WHERE menu_id=$menuId ORDER BY RAND() LIMIT 10 takes 0.0010, without LIMIT 10 it took 0.0012 (in that table 3500 words). –

Seagraves Simple query that has excellent performance and works with gaps:

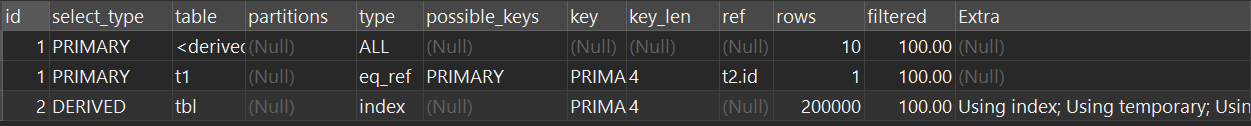

SELECT * FROM tbl AS t1 JOIN (SELECT id FROM tbl ORDER BY RAND() LIMIT 10) as t2 ON t1.id=t2.id

This query on a 200K table takes 0.08s and the normal version (SELECT * FROM tbl ORDER BY RAND() LIMIT 10) takes 0.35s on my machine.

This is fast because the sort phase only uses the indexed ID column. You can see this behaviour in the explain:

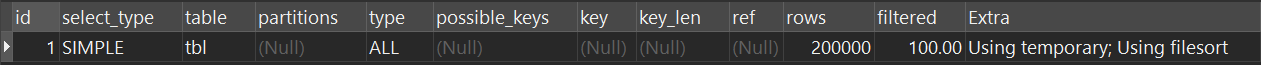

SELECT * FROM tbl ORDER BY RAND() LIMIT 10:

SELECT * FROM tbl AS t1 JOIN (SELECT id FROM tbl ORDER BY RAND() LIMIT 10) as t2 ON t1.id=t2.id

Weighted Version: https://mcmap.net/q/66434/-mysql-select-random-entry-but-weight-towards-certain-entries

I am getting fast queries (around 0.5 seconds) with a slow cpu, selecting 10 random rows in a 400K registers MySQL database non-cached 2Gb size. See here my code: Fast selection of random rows in MySQL

$time= microtime_float();

$sql='SELECT COUNT(*) FROM pages';

$rquery= BD_Ejecutar($sql);

list($num_records)=mysql_fetch_row($rquery);

mysql_free_result($rquery);

$sql="SELECT id FROM pages WHERE RAND()*$num_records<20

ORDER BY RAND() LIMIT 0,10";

$rquery= BD_Ejecutar($sql);

while(list($id)=mysql_fetch_row($rquery)){

if($id_in) $id_in.=",$id";

else $id_in="$id";

}

mysql_free_result($rquery);

$sql="SELECT id,url FROM pages WHERE id IN($id_in)";

$rquery= BD_Ejecutar($sql);

while(list($id,$url)=mysql_fetch_row($rquery)){

logger("$id, $url",1);

}

mysql_free_result($rquery);

$time= microtime_float()-$time;

logger("num_records=$num_records",1);

logger("$id_in",1);

logger("Time elapsed: <b>$time segundos</b>",1);

ORDER BY RAND() –

Erskine FLUSH STATUS; SELECT ...; SHOW SESSION STATUS LIKE 'Handler%'; to see it. –

Steiger ORDER BY RAND() is that it sorts only the ids (not full rows), so temp table is smaller, but still has to sort all of them. –

Ryals microtime_float() with microtime(true) –

Wangle From book :

Choose a Random Row Using an Offset

Still another technique that avoids problems found in the preceding alternatives is to count the rows in the data set and return a random number between 0 and the count. Then use this number as an offset when querying the data set

$rand = "SELECT ROUND(RAND() * (SELECT COUNT(*) FROM Bugs))";

$offset = $pdo->query($rand)->fetch(PDO::FETCH_ASSOC);

$sql = "SELECT * FROM Bugs LIMIT 1 OFFSET :offset";

$stmt = $pdo->prepare($sql);

$stmt->execute( $offset );

$rand_bug = $stmt->fetch();

Use this solution when you can’t assume contiguous key values and you need to make sure each row has an even chance of being selected.

SELECT count(*) becomes slow. –

Darcidarcia OFFSET must step over that many rows. So this 'solution' costs an average of 1.5*N where N is the number of rows in the table. –

Steiger Its very simple and single line query.

SELECT * FROM Table_Name ORDER BY RAND() LIMIT 0,10;

order by rand() is very slow if the table is large –

Headsail Well if you have no gaps in your keys and they are all numeric you can calculate random numbers and select those lines. but this will probably not be the case.

So one solution would be the following:

SELECT * FROM table WHERE key >= FLOOR(RAND()*MAX(id)) LIMIT 1

which will basically ensure that you get a random number in the range of your keys and then you select the next best which is greater. you have to do this 10 times.

however this is NOT really random because your keys will most likely not be distributed evenly.

It's really a big problem and not easy to solve fulfilling all the requirements, MySQL's rand() is the best you can get if you really want 10 random rows.

There is however another solution which is fast but also has a trade off when it comes to randomness, but may suit you better. Read about it here: How can i optimize MySQL's ORDER BY RAND() function?

Question is how random do you need it to be.

Can you explain a bit more so I can give you a good solution.

For example a company I worked with had a solution where they needed absolute randomness extremely fast. They ended up with pre-populating the database with random values that were selected descending and set to different random values afterwards again.

If you hardly ever update you could also fill an incrementing id so you have no gaps and just can calculate random keys before selecting... It depends on the use case!

Id and all your random queries will return you that one Id. –

Griseofulvin FLOOR(RAND()*MAX(id)) is biased toward returning larger ids. –

Steiger How to select random rows from a table:

From here: Select random rows in MySQL

A quick improvement over "table scan" is to use the index to pick up random ids.

SELECT *

FROM random, (

SELECT id AS sid

FROM random

ORDER BY RAND( )

LIMIT 10

) tmp

WHERE random.id = tmp.sid;

PRIMARY KEY). –

Steiger All the best answers have been already posted (mainly those referencing the link http://jan.kneschke.de/projects/mysql/order-by-rand/).

I want to pinpoint another speed-up possibility - caching. Think of why you need to get random rows. Probably you want display some random post or random ad on a website. If you are getting 100 req/s, is it really needed that each visitor gets random rows? Usually it is completely fine to cache these X random rows for 1 second (or even 10 seconds). It doesn't matter if 100 unique visitors in the same 1 second get the same random posts, because the next second another 100 visitors will get different set of posts.

When using this caching you can use also some of the slower solution for getting the random data as it will be fetched from MySQL only once per second regardless of your req/s.

I improved the answer @Riedsio had. This is the most efficient query I can find on a large, uniformly distributed table with gaps (tested on getting 1000 random rows from a table that has > 2.6B rows).

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max := (SELECT MAX(id) FROM table)) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1)

Let me unpack what's going on.

@max := (SELECT MAX(id) FROM table)- I'm calculating and saving the max. For very large tables, there is a slight overhead for calculating

MAX(id)each time you need a row

- I'm calculating and saving the max. For very large tables, there is a slight overhead for calculating

SELECT FLOOR(rand() * @max) + 1 as rand)- Gets a random id

SELECT id FROM table INNER JOIN (...) on id > rand LIMIT 1- This fills in the gaps. Basically if you randomly select a number in the gaps, it will just pick the next id. Assuming the gaps are uniformly distributed, this shouldn't be a problem.

Doing the union helps you fit everything into 1 query so you can avoid doing multiple queries. It also lets you save the overhead of calculating MAX(id). Depending on your application, this might matter a lot or very little.

Note that this gets only the ids and gets them in random order. If you want to do anything more advanced I recommend you do this:

SELECT t.id, t.name -- etc, etc

FROM table t

INNER JOIN (

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max := (SELECT MAX(id) FROM table)) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1) UNION

(SELECT id FROM table INNER JOIN (SELECT FLOOR(RAND() * @max) + 1 as rand) r on id > rand LIMIT 1)

) x ON x.id = t.id

ORDER BY t.id

LIMIT 1 to LIMIT 30 everywhere in query –

Rakehell LIMIT 1 to LIMIT 30 would get you 30 records in a row from a random point in the table. You should instead have 30 copies of the (SELECT id FROM .... part in the middle. –

Darcidarcia Riedsio answer . I have tried with 500 per second hits to the page using PHP 7.0.22 and MariaDB on centos 7, with Riedsio answer I got 500+ extra successful response then your answer. –

Rakehell I've looked through all of the answers, and I don't think anyone mentions this possibility at all, and I'm not sure why.

If you want utmost simplicity and speed, at a minor cost, then to me it seems to make sense to store a random number against each row in the DB. Just create an extra column, random_number, and set it's default to RAND(). Create an index on this column.

Then when you want to retrieve a row generate a random number in your code (PHP, Perl, whatever) and compare that to the column.

SELECT FROM tbl WHERE random_number >= :random LIMIT 1

I guess although it's very neat for a single row, for ten rows like the OP asked you'd have to call it ten separate times (or come up with a clever tweak that escapes me immediately)

I know it is not what you want, but the answer I will give you is what I use in production in a small website.

Depending on the quantity of times you access the random value, it is not worthy to use MySQL, just because you won't be able to cache the answer. We have a button there to access a random page, and a user could click in there several times per minute if he wants. This will cause a mass amount of MySQL usage and, at least for me, MySQL is the biggest problem to optimize.

I would go another approach, where you can store in cache the answer. Do one call to your MySQL:

SELECT min(id) as min, max(id) as max FROM your_table

With your min and max Id, you can, in your server, calculate a random number. In python:

random.randint(min, max)

Then, with your random number, you can get a random Id in your Table:

SELECT *

FROM your_table

WHERE id >= %s

ORDER BY id ASC

LIMIT 1

In this method you do two calls to your Database, but you can cache them and don't access the Database for a long period of time, enhancing performance. Note that this is not random if you have holes in your table. Having more than 1 row is easy since you can create the Id using python and do one request for each row, but since they are cached, it's ok.

If you have too many holes in your table, you can try the same approach, but now going for the total number of records:

SELECT COUNT(*) as total FROM your_table

Then in python you go:

random.randint(0, total)

And to fetch a random result you use the LIMIT like bellow:

SELECT *

FROM your_table

ORDER BY id ASC

LIMIT %s, 1

Notice it will get 1 value after X random rows. Even if you have holes in your table, it will be completely random, but it will cost more for your database.

I used this http://jan.kneschke.de/projects/mysql/order-by-rand/ posted by Riedsio (i used the case of a stored procedure that returns one or more random values):

DROP TEMPORARY TABLE IF EXISTS rands;

CREATE TEMPORARY TABLE rands ( rand_id INT );

loop_me: LOOP

IF cnt < 1 THEN

LEAVE loop_me;

END IF;

INSERT INTO rands

SELECT r1.id

FROM random AS r1 JOIN

(SELECT (RAND() *

(SELECT MAX(id)

FROM random)) AS id)

AS r2

WHERE r1.id >= r2.id

ORDER BY r1.id ASC

LIMIT 1;

SET cnt = cnt - 1;

END LOOP loop_me;

In the article he solves the problem of gaps in ids causing not so random results by maintaining a table (using triggers, etc...see the article); I'm solving the problem by adding another column to the table, populated with contiguous numbers, starting from 1 (edit: this column is added to the temporary table created by the subquery at runtime, doesn't affect your permanent table):

DROP TEMPORARY TABLE IF EXISTS rands;

CREATE TEMPORARY TABLE rands ( rand_id INT );

loop_me: LOOP

IF cnt < 1 THEN

LEAVE loop_me;

END IF;

SET @no_gaps_id := 0;

INSERT INTO rands

SELECT r1.id

FROM (SELECT id, @no_gaps_id := @no_gaps_id + 1 AS no_gaps_id FROM random) AS r1 JOIN

(SELECT (RAND() *

(SELECT COUNT(*)

FROM random)) AS id)

AS r2

WHERE r1.no_gaps_id >= r2.id

ORDER BY r1.no_gaps_id ASC

LIMIT 1;

SET cnt = cnt - 1;

END LOOP loop_me;

In the article i can see he went to great lengths to optimize the code; i have no ideea if/how much my changes impact the performance but works very well for me.

@no_gaps_id no index can be used, so if you look at EXPLAIN for your query, you have Using filesort and Using where (without index) for the subqueries, in contrast to the original query. –

Comedo I needed a query to return a large number of random rows from a rather large table. This is what I came up with. First get the maximum record id:

SELECT MAX(id) FROM table_name;

Then substitute that value into:

SELECT * FROM table_name WHERE id > FLOOR(RAND() * max) LIMIT n;

Where max is the maximum record id in the table and n is the number of rows you want in your result set. The assumption is that there are no gaps in the record id's although I doubt it would affect the result if there were (haven't tried it though). I also created this stored procedure to be more generic; pass in the table name and number of rows to be returned. I'm running MySQL 5.5.38 on Windows 2008, 32GB, dual 3GHz E5450, and on a table with 17,361,264 rows it's fairly consistent at ~.03 sec / ~11 sec to return 1,000,000 rows. (times are from MySQL Workbench 6.1; you could also use CEIL instead of FLOOR in the 2nd select statement depending on your preference)

DELIMITER $$

USE [schema name] $$

DROP PROCEDURE IF EXISTS `random_rows` $$

CREATE PROCEDURE `random_rows`(IN tab_name VARCHAR(64), IN num_rows INT)

BEGIN

SET @t = CONCAT('SET @max=(SELECT MAX(id) FROM ',tab_name,')');

PREPARE stmt FROM @t;

EXECUTE stmt;

DEALLOCATE PREPARE stmt;

SET @t = CONCAT(

'SELECT * FROM ',

tab_name,

' WHERE id>FLOOR(RAND()*@max) LIMIT ',

num_rows);

PREPARE stmt FROM @t;

EXECUTE stmt;

DEALLOCATE PREPARE stmt;

END

$$

then

CALL [schema name].random_rows([table name], n);

Here is a game changer that may be helpfully for many;

I have a table with 200k rows, with sequential id's, I needed to pick N random rows, so I opt to generate random values based in the biggest ID in the table, I created this script to find out which is the fastest operation:

logTime();

query("SELECT COUNT(id) FROM tbl");

logTime();

query("SELECT MAX(id) FROM tbl");

logTime();

query("SELECT id FROM tbl ORDER BY id DESC LIMIT 1");

logTime();

The results are:

- Count:

36.8418693542479ms - Max:

0.241041183472ms - Order:

0.216960906982ms

Based in this results, order desc is the fastest operation to get the max id,

Here is my answer to the question:

SELECT GROUP_CONCAT(n SEPARATOR ',') g FROM (

SELECT FLOOR(RAND() * (

SELECT id FROM tbl ORDER BY id DESC LIMIT 1

)) n FROM tbl LIMIT 10) a

...

SELECT * FROM tbl WHERE id IN ($result);

FYI: To get 10 random rows from a 200k table, it took me 1.78 ms (including all the operations in the php side)

LIMIT slightly -- you can get duplicates. –

Steiger You can easily use a random offset with a limit

PREPARE stm from 'select * from table limit 10 offset ?';

SET @total = (select count(*) from table);

SET @_offset = FLOOR(RAND() * @total);

EXECUTE stm using @_offset;

You can also apply a where clause like so

PREPARE stm from 'select * from table where available=true limit 10 offset ?';

SET @total = (select count(*) from table where available=true);

SET @_offset = FLOOR(RAND() * @total);

EXECUTE stm using @_offset;

Tested on 600,000 rows (700MB) table query execution took ~0.016sec HDD drive.

EDIT: The offset might take a value close to the end of the table, which will result in the select statement returning less rows (or maybe only 1 row), to avoid this we can check the offset again after declaring it, like so

SET @rows_count = 10;

PREPARE stm from "select * from table where available=true limit ? offset ?";

SET @total = (select count(*) from table where available=true);

SET @_offset = FLOOR(RAND() * @total);

SET @_offset = (SELECT IF(@total-@_offset<@rows_count,@_offset-@rows_count,@_offset));

SET @_offset = (SELECT IF(@_offset<0,0,@_offset));

EXECUTE stm using @rows_count,@_offset;

If you want one random record (no matter if there are gapes between ids):

PREPARE stmt FROM 'SELECT * FROM `table_name` LIMIT 1 OFFSET ?';

SET @count = (SELECT

FLOOR(RAND() * COUNT(*))

FROM `table_name`);

EXECUTE stmt USING @count;

[HY000][1210] Incorrect arguments to EXECUTE –

Desiree This is super fast and is 100% random even if you have gaps.

- Count the number

xof rows that you have availableSELECT COUNT(*) as rows FROM TABLE - Pick 10 distinct random numbers

a_1,a_2,...,a_10between 0 andx - Query your rows like this:

SELECT * FROM TABLE LIMIT 1 offset a_ifor i=1,...,10

I found this hack in the book SQL Antipatterns from Bill Karwin.

SELECT column FROM table ORDER BY RAND() LIMIT 10 is in O(nlog(n)). So yes, this is the fasted solution and it works for any distribution of ids. –

Peres x. I would argue that this is not a random generation of 10 rows. In my answer, you have to execute the query in step three 10 times, i.e. one only gets one row per execution and don't have to worry if the offset is at the end of the table. –

Peres The following should be fast, unbiased and independent of id column. However it does not guarantee that the number of rows returned will match the number of rows requested.

SELECT *

FROM t

WHERE RAND() < (SELECT 10 / COUNT(*) FROM t)

Explanation: assuming you want 10 rows out of 100 then each row has 1/10 probability of getting SELECTed which could be achieved by WHERE RAND() < 0.1. This approach does not guarantee 10 rows; but if the query is run enough times the average number of rows per execution will be around 10 and each row in the table will be selected evenly.

If you have just one Read-Request

Combine the answer of @redsio with a temp-table (600K is not that much):

DROP TEMPORARY TABLE IF EXISTS tmp_randorder;

CREATE TABLE tmp_randorder (id int(11) not null auto_increment primary key, data_id int(11));

INSERT INTO tmp_randorder (data_id) select id from datatable;

And then take a version of @redsios Answer:

SELECT dt.*

FROM

(SELECT (RAND() *

(SELECT MAX(id)

FROM tmp_randorder)) AS id)

AS rnd

INNER JOIN tmp_randorder rndo on rndo.id between rnd.id - 10 and rnd.id + 10

INNER JOIN datatable AS dt on dt.id = rndo.data_id

ORDER BY abs(rndo.id - rnd.id)

LIMIT 1;

If the table is big, you can sieve on the first part:

INSERT INTO tmp_randorder (data_id) select id from datatable where rand() < 0.01;

If you have many read-requests

Version: You could keep the table

tmp_randorderpersistent, call it datatable_idlist. Recreate that table in certain intervals (day, hour), since it also will get holes. If your table gets really big, you could also refill holesselect l.data_id as whole from datatable_idlist l left join datatable dt on dt.id = l.data_id where dt.id is null;

Version: Give your Dataset a random_sortorder column either directly in datatable or in a persistent extra table

datatable_sortorder. Index that column. Generate a Random-Value in your Application (I'll call it$rand).select l.* from datatable l order by abs(random_sortorder - $rand) desc limit 1;

This solution discriminates the 'edge rows' with the highest and the lowest random_sortorder, so rearrange them in intervals (once a day).

Another simple solution would be ranking the rows and fetch one of them randomly and with this solution you won't need to have any 'Id' based column in the table.

SELECT d.* FROM (

SELECT t.*, @rownum := @rownum + 1 AS rank

FROM mytable AS t,

(SELECT @rownum := 0) AS r,

(SELECT @cnt := (SELECT RAND() * (SELECT COUNT(*) FROM mytable))) AS n

) d WHERE rank >= @cnt LIMIT 10;

You can change the limit value as per your need to access as many rows as you want but that would mostly be consecutive values.

However, if you don't want consecutive random values then you can fetch a bigger sample and select randomly from it. something like ...

SELECT * FROM (

SELECT d.* FROM (

SELECT c.*, @rownum := @rownum + 1 AS rank

FROM buildbrain.`commits` AS c,

(SELECT @rownum := 0) AS r,

(SELECT @cnt := (SELECT RAND() * (SELECT COUNT(*) FROM buildbrain.`commits`))) AS rnd

) d

WHERE rank >= @cnt LIMIT 10000

) t ORDER BY RAND() LIMIT 10;

One way that i find pretty good if there's an autogenerated id is to use the modulo operator '%'. For Example, if you need 10,000 random records out 70,000, you could simplify this by saying you need 1 out of every 7 rows. This can be simplified in this query:

SELECT * FROM

table

WHERE

id %

FLOOR(

(SELECT count(1) FROM table)

/ 10000

) = 0;

If the result of dividing target rows by total available is not an integer, you will have some extra rows than what you asked for, so you should add a LIMIT clause to help you trim the result set like this:

SELECT * FROM

table

WHERE

id %

FLOOR(

(SELECT count(1) FROM table)

/ 10000

) = 0

LIMIT 10000;

This does require a full scan, but it is faster than ORDER BY RAND, and in my opinion simpler to understand than other options mentioned in this thread. Also if the system that writes to the DB creates sets of rows in batches you might not get such a random result as you where expecting.

I think here is a simple and yet faster way, I tested it on the live server in comparison with a few above answer and it was faster.

SELECT * FROM `table_name` WHERE id >= (SELECT FLOOR( MAX(id) * RAND()) FROM `table_name` ) ORDER BY id LIMIT 30;

//Took 0.0014secs against a table of 130 rows

SELECT * FROM `table_name` WHERE 1 ORDER BY RAND() LIMIT 30

//Took 0.0042secs against a table of 130 rows

SELECT name

FROM random AS r1 JOIN

(SELECT CEIL(RAND() *

(SELECT MAX(id)

FROM random)) AS id)

AS r2

WHERE r1.id >= r2.id

ORDER BY r1.id ASC

LIMIT 30

//Took 0.0040secs against a table of 130 rows

SELECT

*

FROM

table_with_600k_rows

WHERE

RAND( )

ORDER BY

id DESC

LIMIT 30;

id is the primary key, sorted by id, EXPLAIN table_with_600k_rows, find that row does not scan the entire table

What about retrieving rows up and down, joining them and then ordering by rand?

SELECT x.id FROM table_x x

INNER JOIN (

(SELECT id FROM table_x x WHERE x.id >= :id ORDER BY x.id ASC LIMIT :amount) UNION

(SELECT id FROM table_x x WHERE x.id <= :id ORDER BY x.id DESC LIMIT :amount)

) u ON u.id = x.id

ORDER BY RAND() LIMIT :amount

This way we are eliminating issues with holes in database, mitigating the problem of too high or too low random value (ID in this example) resulting in no rows found, minimizing the problem with some rows having bigger chance to be selected (I don't think this eliminates this problem but make it a little more fair compared to some other answers) and eliminating the problem of multiple queries to retrieve more than one row.

The issue is the LIMIT approach where we are randomizing on the selected cluster instead whole database if we want to retrieve more than one row at the time. We are still randomly selecting cluster and randomizing its content, so for the same ID we will get different result most of the time.

Another thing is your preference of the cluster size. In this example, if you want one row, the script will select one or two rows, put them in random order and select one from the top. But for your usage you could decide on static cluster size (like 200) and add to it the amount of rows to retrieve to make it more random:

(SELECT id FROM table_x x WHERE x.id >= :id ORDER BY x.id ASC LIMIT :amount + 200) UNION

(SELECT id FROM table_x x WHERE x.id <= :id ORDER BY x.id DESC LIMIT :amount + 200)

but now you are ordering by RAND 201-402+ rows (depending on the amount and selected ID) which is still good but requires more resources.

Note: Chosen ID must be between minial and maximal value in DB otherwise you might get zero results. Also, I think you should calculate min and max separate of this query and cache the results, then use it to generate random ID. This way query won't have to calculate min/max each time you make the call, saving on time.

I Use this query:

select floor(RAND() * (SELECT MAX(key) FROM table)) from table limit 10

query time:0.016s

This is how I do it:

select *

from table_with_600k_rows

where rand() < 10/600000

limit 10

I like it because does not require other tables, it is simple to write, and it is very fast to execute.

Use the below simple query to get random data from a table.

SELECT user_firstname ,

COUNT(DISTINCT usr_fk_id) cnt

FROM userdetails

GROUP BY usr_fk_id

ORDER BY cnt ASC

LIMIT 10

I guess this is the best possible way..

SELECT id, id * RAND( ) AS random_no, first_name, last_name

FROM user

ORDER BY random_no

© 2022 - 2024 — McMap. All rights reserved.