"Depends on filesystem"

Some users mentioned that the performance impact depends on the used filesystem. Of course. Filesystems like EXT3 can be very slow. But even if you use EXT4 or XFS you can not prevent that listing a folder through ls or find or through an external connection like FTP will become slower an slower.

Solution

I prefer the same way as @armandino. For that I use this little function in PHP to convert IDs into a filepath that results 1000 files per directory:

function dynamic_path($int) {

// 1000 = 1000 files per dir

// 10000 = 10000 files per dir

// 2 = 100 dirs per dir

// 3 = 1000 dirs per dir

return implode('/', str_split(intval($int / 1000), 2)) . '/';

}

or you could use the second version if you want to use alpha-numeric characters:

function dynamic_path2($str) {

// 26 alpha + 10 num + 3 special chars (._-) = 39 combinations

// -1 = 39^2 = 1521 files per dir

// -2 = 39^3 = 59319 files per dir (if every combination exists)

$left = substr($str, 0, -1);

return implode('/', str_split($left ? $left : $str[0], 2)) . '/';

}

results:

<?php

$files = explode(',', '1.jpg,12.jpg,123.jpg,999.jpg,1000.jpg,1234.jpg,1999.jpg,2000.jpg,12345.jpg,123456.jpg,1234567.jpg,12345678.jpg,123456789.jpg');

foreach ($files as $file) {

echo dynamic_path(basename($file, '.jpg')) . $file . PHP_EOL;

}

?>

1/1.jpg

1/12.jpg

1/123.jpg

1/999.jpg

1/1000.jpg

2/1234.jpg

2/1999.jpg

2/2000.jpg

13/12345.jpg

12/4/123456.jpg

12/35/1234567.jpg

12/34/6/12345678.jpg

12/34/57/123456789.jpg

<?php

$files = array_merge($files, explode(',', 'a.jpg,b.jpg,ab.jpg,abc.jpg,ddd.jpg,af_ff.jpg,abcd.jpg,akkk.jpg,bf.ff.jpg,abc-de.jpg,abcdef.jpg,abcdefg.jpg,abcdefgh.jpg,abcdefghi.jpg'));

foreach ($files as $file) {

echo dynamic_path2(basename($file, '.jpg')) . $file . PHP_EOL;

}

?>

1/1.jpg

1/12.jpg

12/123.jpg

99/999.jpg

10/0/1000.jpg

12/3/1234.jpg

19/9/1999.jpg

20/0/2000.jpg

12/34/12345.jpg

12/34/5/123456.jpg

12/34/56/1234567.jpg

12/34/56/7/12345678.jpg

12/34/56/78/123456789.jpg

a/a.jpg

b/b.jpg

a/ab.jpg

ab/abc.jpg

dd/ddd.jpg

af/_f/af_ff.jpg

ab/c/abcd.jpg

ak/k/akkk.jpg

bf/.f/bf.ff.jpg

ab/c-/d/abc-de.jpg

ab/cd/e/abcdef.jpg

ab/cd/ef/abcdefg.jpg

ab/cd/ef/g/abcdefgh.jpg

ab/cd/ef/gh/abcdefghi.jpg

As you can see for the $int-version every folder contains up to 1000 files and up to 99 directories containing 1000 files and 99 directories ...

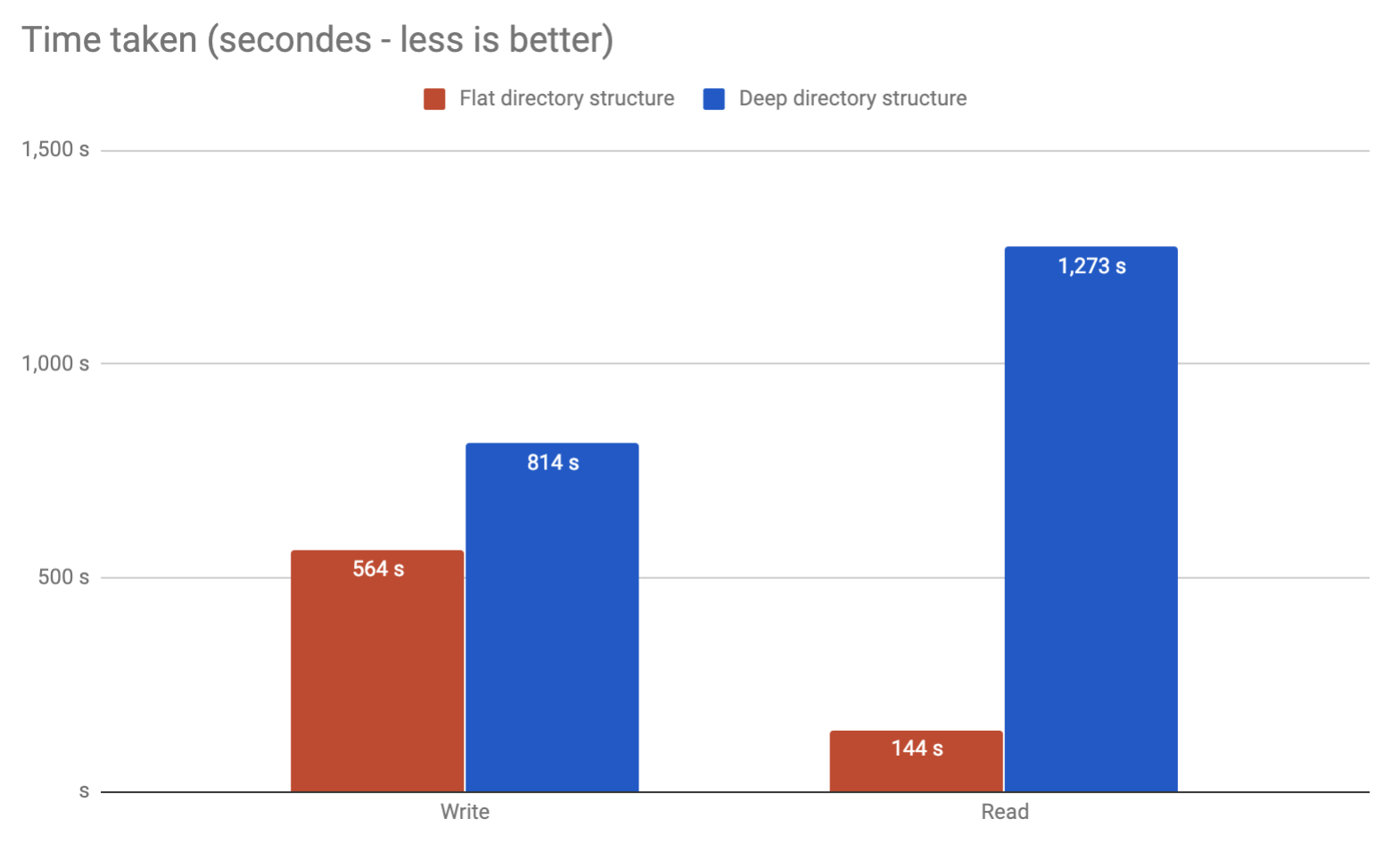

But do not forget that to many directories cause the same performance problems!

Finally you should think about how to reduce the amount of files in total. Depending on your target you can use CSS sprites to combine multiple tiny images like avatars, icons, smilies, etc. or if you use many small non-media files consider combining them e.g. in JSON format. In my case I had thousands of mini-caches and finally I decided to combine them in packs of 10.